Shibboleths: The Perils of Voiceless Sibilant Fricatives, Idiot Lights, and Other Binary-Outcome Tests

AS-SALT, JORDAN — Dr. Reza Al-Faisal once had a job offer from Google to work on cutting-edge voice recognition projects. He turned it down. The 37-year-old Stanford-trained professor of engineering at Al-Balqa’ Applied University now leads a small cadre of graduate students in a government-sponsored program to keep Jordanian society secure from what has now become an overwhelming influx of refugees from the Palestinian-controlled West Bank. “Sometimes they visit relatives here in Jordan and try to stay here illegally,” he says. “We already have our own unemployment problems, especially among the younger generation.”

Al-Faisal’s group has come up with a technological solution, utilizing drones and voice recognition. “The voice is the key. Native Arabic speakers in West Bank and East Bank populations have noticeable differences in their use of voiceless sibilant fricatives.” He points to a quadcopter drone, its four propellers whirring as it hovers in place. “We have installed a high-quality audio system onto these drones, with a speaker and directional microphones. The drone is autonomous. It presents a verbal challenge to the suspect. When the suspect answers, digital signal processing computes the coefficient of palatalization. East Bank natives have a mean palatalization coefficient of 0.85. With West Bank natives it is only 0.59. If the coefficient is below the acceptable threshold, the drone immobilizes the suspect with nonlethal methods until the nearest patrol arrives.”

One of Al-Faisal’s students presses a button and the drone zooms up and off to the west, embarking on another pilot run. If Al-Faisal’s system, the SIBFRIC-2000, proves to be successful in these test runs, it is likely to be used on a larger scale — so that limited Jordanian resources can cover a wider area to patrol for illegal immigrants. “Two weeks ago we caught a group of eighteen illegals with SIBFRIC. Border Security would never have found them. It’s not like in America, where you can discriminate against people because they look different. East Bank, West Bank — we all look the same. We sound different. I am confident the program will work.”

But some residents of the region are skeptical. “My brother was hit by a drone stunner on one of these tests,” says a 25-year old bus driver, who did not want his name to be used for this story. “They said his voice was wrong. Our family has lived in Jordan for generations. We are proud citizens! How can you trust a machine?”

Al-Faisal declines to give out statistics on how many cases like this have occurred, citing national security concerns. “The problem is being overstated. Very few of these incidents have occurred, and in each case the suspect is unharmed. It does not take long for Border Security to verify citizenship once they arrive.”

Others say there are rumors of voice coaches in Nablus and Ramallah, helping desperate refugees to beat the system. Al-Faisal is undeterred. “Ha ha ha, this is nonsense. SIBFRIC has a perfect ear; it can hear the slightest nuances of speech. You cannot cheat the computer.”

When asked how many drones are in the pilot program, Al-Faisal demures. “More than five,” he says, “fewer than five hundred.” Al-Faisal hopes to ramp up the drone program starting in 2021.

Shame Old Shtory, Shame Old Shong and Dansh

Does this story sound familiar? It might. Here’s a similar one from several thousand years earlier:

5 And the Gileadites took the passages of Jordan before the Ephraimites: and it was so, that when those Ephraimites which were escaped said, Let me go over; that the men of Gilead said unto him, Art thou an Ephraimite? If he said, Nay; 6 Then said they unto him, Say now Shibboleth: and he said Sibboleth: for he could not frame to pronounce it right. Then they took him, and slew him at the passages of Jordan: and there fell at that time of the Ephraimites forty and two thousand.

This is the reputed biblical origin of the term shibboleth, which has come to mean any distinguishing cultural behavior that can be used to identify a particular group, not just a linguistic one. The Ephraimites couldn’t say SHHHH and it became a dead giveaway of their origin.

In this article, we’re going to talk about the shibboleth and several other diagnostic tests which have one of two outcomes — pass or fail, yes or no, positive or negative — and some of the implications of using such a test. And yes, this does impact embedded systems. We’re going to spend quite a bit of time looking at a specific example in mathematical terms. If you don’t like math, just skip it whenever it gets too “mathy”.

A quantitative shibboleth detector

So let’s say we did want to develop a technological test for separating out people who couldn’t say SHHH, and we had some miracle algorithm to evaluate a “palatalization coefficient” \( P_{SH} \) for voiceless sibilant fricatives. How would we pick the threshold?

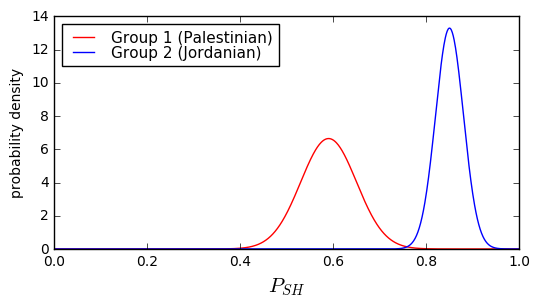

Well, the first thing to do is try to model the system somehow. We took a similar approach in an earlier article on design margin, where our friend the Oracle computed probability distributions for boiler pressures. Let’s do the same here. Suppose the Palestinians (which we’ll call Group 1) have a \( P_{SH} \) which roughly follows a Gaussian distribution with mean μ = 0.59 and standard deviation σ = 0.06, and the Jordanians (which we’ll call Group 2) have a \( P_{SH} \) which is also a Gaussian distribution with a mean μ = 0.85 and a standard deviation σ = 0.03.

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import scipy.stats

from collections import namedtuple

class Gaussian1D(namedtuple('Gaussian1D','mu sigma name id color')):

""" Normal (Gaussian) distribution of one variable """

def pdf(self, x):

""" probabiliy density function """

return scipy.stats.norm.pdf(x, self.mu, self.sigma)

def cdf(self, x):

""" cumulative distribution function """

return scipy.stats.norm.cdf(x, self.mu, self.sigma)

d1 = Gaussian1D(0.59, 0.06, 'Group 1 (Palestinian)','P','red')

d2 = Gaussian1D(0.85, 0.03, 'Group 2 (Jordanian)','J','blue')

def show_binary_pdf(d1, d2, x, fig=None, xlabel=None):

if fig is None:

fig = plt.figure(figsize=(6,3))

ax=fig.add_subplot(1,1,1)

for d in (d1,d2):

ax.plot(x,d.pdf(x),label=d.name,color=d.color)

ax.legend(loc='best',labelspacing=0,fontsize=11)

if xlabel is not None:

ax.set_xlabel(xlabel, fontsize=15)

ax.set_ylabel('probability density')

return ax

show_binary_pdf(d1,d2,x=np.arange(0,1,0.001), xlabel='$P_{SH}$');

Hmm, we have a dilemma here. Both groups have a small possibility that the \( P_{SH} \) measurement is in the 0.75-0.8 range, and this makes it harder to distinguish. Suppose we decided to set the threshold at 0.75. What would be the probability of our conclusion being wrong?

for d in (d1,d2):

print '%-25s %f' % (d.name, d.cdf(0.75))Group 1 (Palestinian) 0.996170 Group 2 (Jordanian) 0.000429

Here the cdf method calls the scipy.stats.norm.cdf function to compute the cumulative distribution function, which is the probability that a given sample from the distribution will be less than a given amount. So there’s a 99.617% chance that Group 1’s \( P_{SH} < 0.75 \), and a 0.0429% chance that Group 2’s \( P_{SH} < 0.75 \). One out of every 261 samples from Group 1 will pass the test (though we were hoping for them to fail) — this is known as a false negative, because a condition that exists (\( P_{SH} < 0.75 \)) remains undetected. One out of every 2331 samples from Group 2 will fail the test (though we were hoping for them to pass) — this is known as a false positive, because a condition that does not exist (\( P_{SH} < 0.75 \)) is mistakenly detected.

The probabilities of false positive and false negative are dependent on the threshold:

for threshold in [0.72,0.73,0.74,0.75,0.76,0.77,0.78]:

c = [d.cdf(threshold) for d in (d1,d2)]

print("threshold=%.2f, Group 1 false negative=%7.5f%%, Group 2 false positive=%7.5f%%"

% (threshold, 1-c[0], c[1]))

threshold = np.arange(0.7,0.801,0.005)

false_positive = d2.cdf(threshold)

false_negative = 1-d1.cdf(threshold)

figure = plt.figure()

ax = figure.add_subplot(1,1,1)

ax.semilogy(threshold, false_positive, label='false positive', color='red')

ax.semilogy(threshold, false_negative, label='false negative', color='blue')

ax.set_ylabel('Probability')

ax.set_xlabel('Threshold $P_{SH}$')

ax.legend(labelspacing=0,loc='lower right')

ax.grid(True)

ax.set_xlim(0.7,0.8);threshold=0.72, Group 1 false negative=0.01513%, Group 2 false positive=0.00001% threshold=0.73, Group 1 false negative=0.00982%, Group 2 false positive=0.00003% threshold=0.74, Group 1 false negative=0.00621%, Group 2 false positive=0.00012% threshold=0.75, Group 1 false negative=0.00383%, Group 2 false positive=0.00043% threshold=0.76, Group 1 false negative=0.00230%, Group 2 false positive=0.00135% threshold=0.77, Group 1 false negative=0.00135%, Group 2 false positive=0.00383% threshold=0.78, Group 1 false negative=0.00077%, Group 2 false positive=0.00982%

If we want a lower probability of false positives (fewer Jordanians detained for failing the test) we can do so by lowering the threshold, but at the expense of raising the probability of false negatives (more Palestinians unexpectedly passing the test and not detained), and vice-versa.

Factors used in choosing a threshold

There is a whole science around binary-outcome tests, primarily in the medical industry, involving sensivity and specificity, and it’s not just a matter of probability distributions. There are two other aspects that make a huge difference in determining a good test threshold:

- base rate — the probability of the condition actually being true, sometimes referred as prevalence in medical diagnosis

- the consequences of false positives and false negatives

Both of these are important because they affect our interpretation of false positive and false negative probabilities.

Base rate

The probabilities we calculated above are conditional probabilities — in our example, we calculated the probability that a person known to be from the Palestinian population passed the SIBFRIC test, and the probability that a person known to be from the Jordanian population failed the SIBFRIC tests.

It’s also important to consider the joint probability distribution — suppose that we are trying to detect a very uncommon condition. In this case the false positive rate will be amplified relative to the false negative rate. Let’s say we have some condition C that has a base rate of 0.001, or one in a thousand, and there is a test with a false positive rate of 0.2% and a false negative rate of 5%. This sounds like a really bad test: we should balance the probabilities by lowering the false negative rate and allowing a higher false positive rate. The net incidence of false positives for C will be 0.999 × 0.002 = 0.001998, and the net incidence of false negatives will be 0.001 × 0.05 = 0.00005. If we had one million people we test for condition C:

- 1000 actually have condition C

- 950 people are correctly diagnosed has having C

- 50 people will remain undetected (false negatives)

- 999000 do not actually have condition C

- 997002 people are correctly diagnosed as not having C

- 1998 people are incorrectly diagnosed as having C (false positives)

The net false positive rate is much higher than the net false negative rate, and if we had a different test with a false positive rate of 0.1% and a false negative rate of 8%, this might actually be better, even though the conditional probabilities of false positives and false negatives look even more lopsided. This is known as the false positive paradox.

Consequences

Let’s continue with our hypothetical condition C with a base rate of 0.001, and the test that has a false positive rate of 0.2% and a false negative rate of 5%. And suppose that the consequences of false positives are unnecessary hospitalization and the consequences of false negatives are certain death:

- 997002 diagnosed as not having C → relief

- 1998 incorrectly diagnosed as having C → unnecessary hospitalization, financial cost, annoyance

- 950 correctly diagnosed as having C → treatment, relief

- 50 incorrectly diagnosed as not having C → death

If the test can be changed, we might want to reduce the false negative, even if it raises the net false positive rate higher. Would lowering 50 deaths per million to 10 deaths per million be worth it if it raises the false positive rate of unnecessary hospitalization from 1998 per million to, say, 5000 per million? 20000 per million?

Consequences can rarely be compared directly; more often we have an apples-to-oranges comparison like death vs. unnecessary hospitalization, or allowing criminals to be free vs. incarcerating the innocent. If we want to handle a tradeof quantitatively, we’d need to assign some kind of metric for the consequences, like assigning a value of \$10 million for an unnecessary death vs. \$10,000 for an unnecessary hospitalization — in such a case we can minimize the net expected loss over an entire population. Otherwise it becomes an ethical question. In jurisprudence there is the idea of Blackstone’s ratio: “It is better that ten guilty persons escape than that one innocent suffer.” But the post-2001 political climate in the United States seems to be that detaining the innocent is more desirable than allowing terrorists or illegal immigrants to remain at large. Mathematics alone won’t help us out of these quandaries.

Optimizing a threshold

OK, so suppose we have a situation that can be described completely in mathematical terms:

- \( A \): Population A can be measured with parameter \( x \) with probability density function \( f_A(x) \) and cumulative density function \( F_A(x) = \int\limits_{-\infty}^x f_A(u) \, du \)

- \( B \): Population B can be measured with parameter \( x \) with PDF \( f_B(x) \) and CDF \( F_B(x) = \int\limits_{-\infty}^x f_B(u) \, du \)

- All samples are either in \( A \) or \( B \):

- \( A \) and \( B \) are disjoint (\( A \cap B = \varnothing \))

- \( A \) and \( B \) are collectively exhaustive (\( A \cup B = \Omega \), where \( \Omega \) is the full sample space, so that \( P(A \ {\rm or}\ B) = 1 \))

- Some threshold \( x_0 \) is determined

- For any given sample \( s \) that is in either A or B (\( s \in A \) or \( s \in B \), respectively), parameter \( x_s \) is compared with \( x_0 \) to determine an estimated classification \( a \) or \( b \):

- \( a \): if \( x_s > x_0 \) then \( s \) is likely to be in population A

- \( b \): if \( x_s \le x_0 \) then \( s \) is likely to be in population B

- Probability of \( s \in A \) is \( p_A \)

- Probability of \( s \in B \) is \( p_B = 1-p_A \)

- Value of various outcomes:

- \( v_{Aa} \): \( s \in A, x_s > x_0 \), correctly classified in A

- \( v_{Ab} \): \( s \in A, x_s \le x_0 \), incorrectly classified in B

- \( v_{Ba} \): \( s \in B, x_s > x_0 \), incorrectly classified in A

- \( v_{Bb} \): \( s \in B, x_s \le x_0 \), correctly classified in B

The expected value over all outcomes is

$$\begin{aligned} E[v] &= v_{Aa}P(Aa)+v_{Ab}P(Ab) + v_{Ba}P(Ba) + v_{Bb}P(Bb)\cr &= v_{Aa}p_A P(a\ |\ A) \cr &+ v_{Ab}p_A P(b\ |\ A) \cr &+ v_{Ba}p_B P(a\ |\ B) \cr &+ v_{Bb}p_B P(b\ |\ B) \end{aligned}$$

These conditional probabilities \( P(a\ |\ A) \) (denoting the probability of the classification \( a \) given that the sample is in \( A \)) can be determined with the CDF functions; for example, if the sample is in A then \( P(a\ |\ A) = P(x > x_0\ |\ A) = 1 - P(x \le x_0) = 1 - F_A(x_0) \), and once we know that, then we have

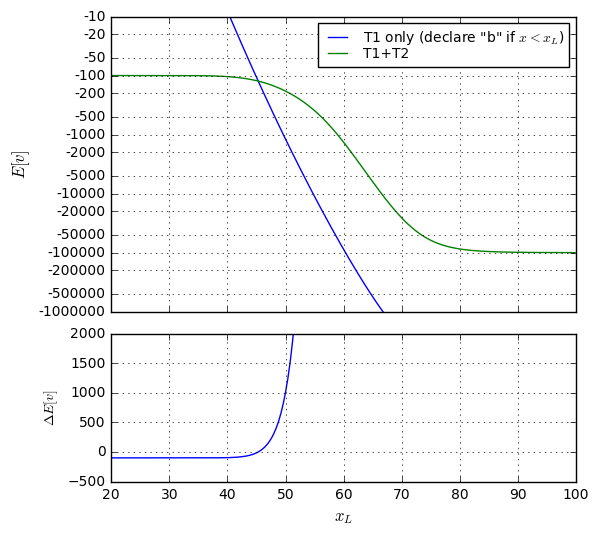

$$\begin{aligned} E[v] &= v_{Aa}p_A (1-F_A(x_0)) \cr &+ v_{Ab}p_A F_A(x_0) \cr &+ v_{Ba}p_B (1-F_B(x_0)) \cr &+ v_{Bb}p_B F_B(x_0) \cr E[v] &= p_A \left(v_{Ab} + (v_{Aa}-v_{Ab})\left(1-F_A(x_0)\right)\right) \cr &+ p_B \left(v_{Bb} + (v_{Ba}-v_{Bb})\left(1-F_B(x_0)\right)\right) \end{aligned}$$

\( E[v] \) is actually a function of the threshold \( x_0 \), and we can locate its maximum value by determining points where its partial derivative \( {\partial E[v] \over \partial {x_0}} = 0: \)

$$0 = {\partial E[v] \over \partial {x_0}} = p_A(v_{Ab}-v_{Aa})f_A(x_0) + p_B(v_{Bb}-v_{Ba})f_B(x_0)$$

$${f_A(x_0) \over f_B(x_0)} = \rho_p \rho_v = -\frac{p_B(v_{Bb}-v_{Ba})}{p_A(v_{Ab}-v_{Aa})}$$

where the \( \rho \) are ratios for probability and for value tradeoffs:

$$\begin{aligned} \rho_p &= p_B/p_A \cr \rho_v &= -\frac{v_{Bb}-v_{Ba}}{v_{Ab}-v_{Aa}} \end{aligned}$$

One interesting thing about this equation is that since probabilities \( p_A \) and \( p_B \) and PDFs \( f_A \) and \( f_B \) are positive, this means that \( v_{Bb}-v_{Ba} \) and \( v_{Ab}-v_{Aa} \) must be opposite signs, otherwise… well, let’s see:

Case study: Embolary Pulmonism

Suppose we have an obscure medical condition; let’s call it an embolary pulmonism, or EP for short. This must be treated within 48 hours, or the patient can transition in minutes from seeming perfectly normal, to a condition in which a small portion of their lungs degrade rapidly, dissolve, and clog up blood vessels elsewhere in the body, leading to extreme discomfort and an almost certain death. Before this rapid decline, the only symptoms are a sore throat and achy eyes.

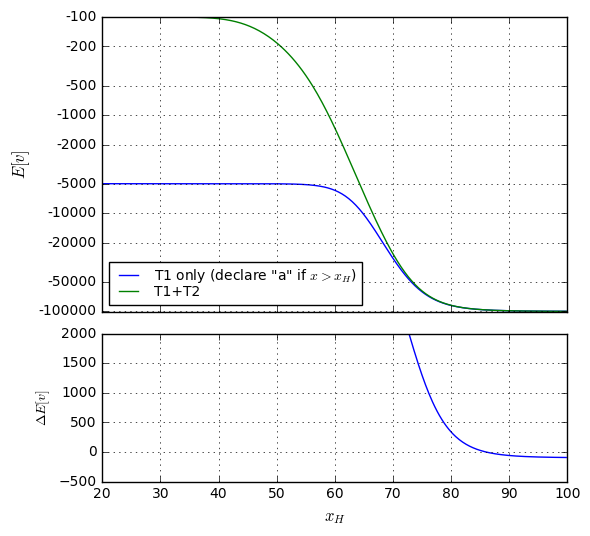

We’re developing an inexpensive diagnostic test \( T_1 \) (let’s suppose it costs \$1) where the patient looks into a machine and it takes a picture of the patient’s eyeballs and uses machine vision to come up with some metric \( x \) that can vary from 0 to 100. We need to pick a threshold \( x_0 \) such that if \( x > x_0 \) we diagnose the patient with EP.

Let’s consider some math that’s not quite realistic:

- condition A: patient has EP

- condition B: patient does not have EP

- incidence of EP in patients complaining of sore throats and achy eyes: \( p_A = \) 0.004% (40 per million)

- value of Aa (correct diagnosis of EP): \( v_{Aa}=- \) \$100000

- value of Ab (false negative, patient has EP, diagnosis of no EP): \( v_{Ab}=0 \)

- value of Ba (false positive, patient does not have EP, diagnosis of EP): \( v_{Ba}=- \)\$5000

- value of Bb (correct diagnosis of no EP): \( v_{Bb} = 0 \)

So we have \( v_{Ab}-v_{Aa} = \) \$100000 and \( v_{Bb}-v_{Ba} = \) \$5000, for a \( \rho_v = -0.05, \) which implies that we’re looking for a threshold \( x_0 \) where \( \frac{f_A(x_0)}{f_B(x_0)} = -0.05\frac{p_B}{p_A} \) is negative, and that never occurs with any real probability distributions. In fact, if we look carefully at the values \( v \), we’ll see that when we diagnose a patient with EP, it always has a higher cost: If we correctly diagnose them with EP, it costs \$100,000 to treat. If we incorrectly diagnose them with EP, it costs \$5,000, perhaps because we can run a lung biopsy and some other fancy test to determine that it’s not EP. Whereas if we give them a negative diagnosis, it doesn’t cost anything. This implies that we should always prefer to give patients a negative diagnosis. So we don’t even need to test them!

Patient: “Hi, Doc, I have achy eyes and a sore throat, do I have EP?”

Doctor: (looks at patient’s elbows studiously for a few seconds) “Nope!”

Patient: (relieved) “Okay, thanks!”

What’s wrong with this picture? Well, all the values look reasonable except for two things. First, we haven’t included the \$1 cost of the eyeball test… but that will affect all four outcomes, so let’s just state that the values \( v \) are in addition to the cost of the test. The more important issue is the false negative, the Ab case, where the patient is diagnosed incorrectly as not having EP, and it’s likely the patient will die. Perhaps the hospital’s insurance company has estimated a cost of \$10 million per case to cover wrongful death civil suits, in which case we should be using \( v _ {Ab} = -\$10^7 \). So here’s our revised description:

- condition A: patient has EP

- condition B: patient does not have EP

- incidence of EP in patients complaining of sore throats and achy eyes: \( p_A = \) 0.004% (40 per million)

- value of Aa (correct diagnosis of EP): \( v_{Aa}=- \) \$100000 (rationale: mean cost of treatment)

- value of Ab (false negative, patient has EP, diagnosis of no EP): \( v_{Ab}=-\$10^7 \) (rationale: mean cost of resulting liability due to high risk of death)

- value of Ba (false positive, patient does not have EP, diagnosis of EP): \( v_{Ba}=- \)\$5000 (rationale: mean cost of additional tests to confirm)

- value of Bb (correct diagnosis of no EP): \( v_{Bb} = 0 \) (rationale: no further treatment needed)

The equation for choosing \( x_0 \) then becomes

$$\begin{aligned} \rho_p &= p_B/p_A = 0.99996 / 0.00004 = 24999 \cr \rho_v &= -\frac{v_{Bb}-v_{Ba}}{v_{Ab}-v_{Aa}} = -5000 / {-9.9}\times 10^6 \approx 0.00050505 \cr {f_A(x_0) \over f_B(x_0)} &= \rho_p\rho_v \approx 12.6258. \end{aligned}$$

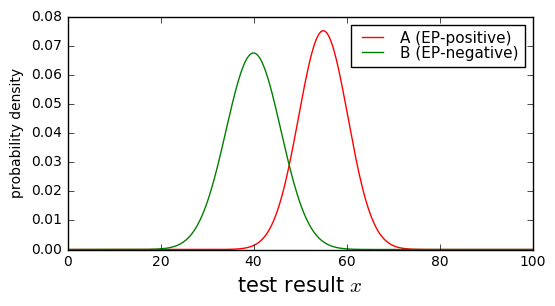

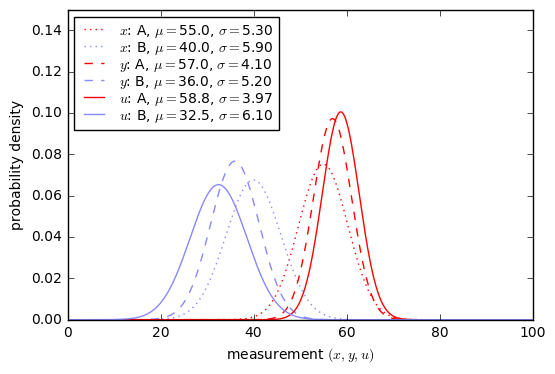

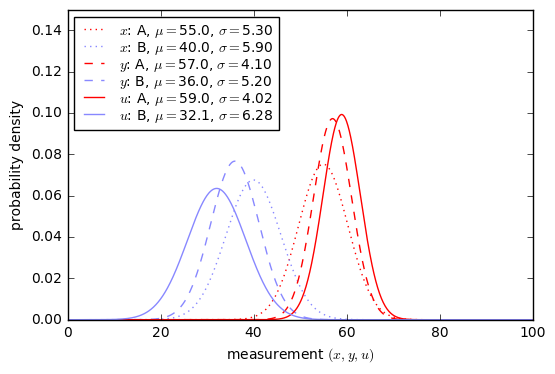

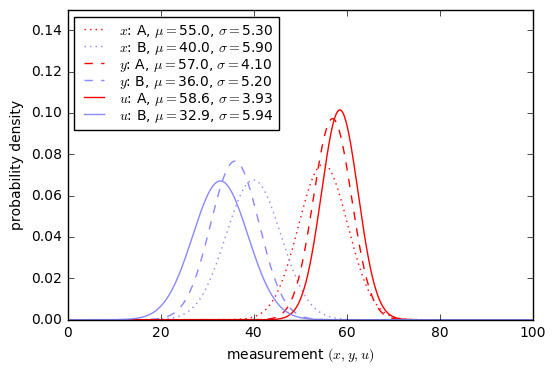

Now we need to know more about our test. Suppose that the results of the eye machine test have normal probability distributions with \( \mu=55, \sigma=5.3 \) for patients with EP and \( \mu=40, \sigma=5.9 \) for patients without EP.

x = np.arange(0,100,0.1) dpos = Gaussian1D(55, 5.3, 'A (EP-positive)','A','red') dneg = Gaussian1D(40, 5.9, 'B (EP-negative)','B','green') show_binary_pdf(dpos, dneg, x, xlabel = 'test result $x$');

Yuck. This doesn’t look like a very good test; there’s a lot of overlap between the probability distributions.

At any rate, suppose we pick a threshold \( x_0=47 \); what kind of false positive / false negative rates will we get, and what’s the expected overall value?

import IPython.core.display

from IPython.display import display, HTML

def analyze_binary(dneg, dpos, threshold):

"""

Returns confusion matrix

"""

pneg = dneg.cdf(threshold)

ppos = dpos.cdf(threshold)

return np.array([[pneg, 1-pneg],

[ppos, 1-ppos]])

def show_binary_matrix(confusion_matrix, threshold, distributions,

outcome_ids, ppos, vmatrix,

special_format=None):

if special_format is None:

special_format = {}

def cellinfo(c, p, v):

# joint probability = c*p

jp = c*p

return (c, jp, jp*v)

def rowcalc(i,confusion_row):

""" write this for rows containing N elements, not just 2 """

p = ppos if (i == 1) else (1-ppos)

return [cellinfo(c,p,vmatrix[i][j]) for j,c in enumerate(confusion_row)]

Jfmtlist = special_format.get('J')

cfmtlist = special_format.get('c')

vfmtlist = special_format.get('v')

try:

if isinstance(vfmtlist, basestring):

vfmt_general = vfmtlist

else:

vfmt_general = vfmtlist[0]

except:

vfmt_general = '%.3f'

def rowfmt(row,dist):

def get_format(fmt, icell, default):

if fmt is None:

return default

if isinstance(fmt,basestring):

return fmt

return fmt[icell] or default

def cellfmt(icell):

Jfmt = get_format(Jfmtlist, icell, '%.7f')

cfmt = get_format(cfmtlist, icell, '%.5f')

vfmt = get_format(vfmtlist, icell, '%.3f')

return '<td>'+cfmt+'<br>J='+Jfmt+'<br>wv='+vfmt+'</td>'

return '<th>'+dist.name+'</th>' + ''.join(

(cellfmt(i) % cell)

for i,cell in enumerate(row))

rows = [rowcalc(i,row) for i,row in enumerate(confusion_matrix)]

vtot = sum(v for row in rows for c,J,v in row)

if not isinstance (threshold, basestring):

threshold = 'x_0 = %s' % threshold

return HTML(('<p>Report for threshold \\(%s \\rightarrow E[v]=\\)'

+vfmt_general+'</p>')%(threshold,vtot)

+'<table><tr><td></td>'+

''.join('<th>%s</th>' % id for id in outcome_ids)

+'</tr>'

+''.join('<tr>%s</tr>' % rowfmt(row,dist)

for row,dist in zip(rows,distributions))

+'</table>')

threshold = 47

C = analyze_binary(dneg, dpos, threshold)

show_binary_matrix(C, threshold, [dneg, dpos], 'ba', 40e-6,[[0,-5000],[-1e7, -1e5]],

special_format={'v':'$%.2f'})Report for threshold \(x_0 = 47 \rightarrow E[v]=\)$-618.57

| b | a | |

|---|---|---|

| B (EP-negative) | 0.88228 J=0.8822406 wv=$0.00 | 0.11772 J=0.1177194 wv=$-588.60 |

| A (EP-positive) | 0.06559 J=0.0000026 wv=$-26.24 | 0.93441 J=0.0000374 wv=$-3.74 |

Here we’ve shown a modified confusion matrix showing for each of the four outcomes the following quantities:

- Conditional probability of each outcome: \( \begin{bmatrix}P(b\ |\ B) & P(a\ |\ B) \cr P(b\ |\ A) & P(a\ |\ A)\end{bmatrix} \) — read each entry like \( P(a\ |\ B) \) as “the probability of \( a \), given that \( B \) is true”, so that the numbers in each row add up to 1

- J: Joint probability of the outcome: \( \begin{bmatrix}P(Bb) & P(Ba) \cr P(Ab) & P(Aa)\end{bmatrix} \) — read each entry like \( P(Ba) \) as “The probability that \( B \) and \( a \) are true”, so that the numbers in the entire matrix add up to 1

- wv: Weighted contribution to expected value = joint probability of the outcome × its value

For example, if the patient does not have EP, there’s about an 88.2% chance that they will be diagnosed correctly and an 11.8% chance that the test will produce a false positive. If the patient does have EP, there’s about a 6.6% chance the test will produce a false negative, and a 93.4% chance the test will correctly diagnose that they have EP.

The really interesting thing here is the contribution to expected value. Remember, the false negative (Ab) is really bad, since it has a value of \$10 million, but it’s also very rare because of the low incidence of EP and the fact that condtional probability of a false negative is only 6.6%. Whereas the major contribution to expected value comes from the false positive case (Ba) that occurs in almost 11.8% of the population.

We should be able to use a higher threshold to reduce the expected cost over the population:

for threshold in [50, 55]:

C = analyze_binary(dneg, dpos, threshold)

display(show_binary_matrix(C, threshold, [dneg, dpos], 'ba', 40e-6,[[0,-5000],[-1e7, -1e5]],

special_format={'v':'$%.2f'}))Report for threshold \(x_0 = 50 \rightarrow E[v]=\)$-297.62

| b | a | |

|---|---|---|

| B (EP-negative) | 0.95495 J=0.9549161 wv=$0.00 | 0.04505 J=0.0450439 wv=$-225.22 |

| A (EP-positive) | 0.17274 J=0.0000069 wv=$-69.10 | 0.82726 J=0.0000331 wv=$-3.31 |

Report for threshold \(x_0 = 55 \rightarrow E[v]=\)$-229.52

| b | a | |

|---|---|---|

| B (EP-negative) | 0.99449 J=0.9944551 wv=$0.00 | 0.00551 J=0.0055049 wv=$-27.52 |

| A (EP-positive) | 0.50000 J=0.0000200 wv=$-200.00 | 0.50000 J=0.0000200 wv=$-2.00 |

The optimal threshold should probably be somewhere between \( x_0=50 \) and \( x_0=55 \), since in one case the contributions to expected value is mostly from the false positive case, and in the other from the false negative case. If we have a good threshold, these contributions are around the same order of magnitude from the false positive and false negative cases. (They won’t necessarily be equal, though.)

To compute this threshold we are looking for

$$\rho = {f_A(x_0) \over f_B(x_0)} = \rho_p\rho_v \approx 12.6258.$$

We can either solve it using numerical methods, or try to solve analytically using the normal distribution probability density

$$f(x) = \frac{1}{\sigma\sqrt{2\pi}}e^{-(x-\mu)^2/2\sigma^2}$$

which gives us

$$\frac{1}{\sigma_A}e^{-(x_0-\mu_A)^2/2\sigma_A{}^2} = \frac{1}{\sigma_B}\rho e^{-(x_0-\mu_B)^2/2\sigma_B{}^2}$$

and taking logs, we get

$$-\ln\sigma_A-(x_0-\mu_A)^2/2\sigma_A{}^2 = \ln\rho -\ln\sigma_B-(x_0-\mu_B)^2/2\sigma_B{}^2$$

If we set \( u = x_0 - \mu_A \) and \( \Delta = \mu_B - \mu_A \) then we get

$$-u^2/2\sigma_A{}^2 = \ln\rho\frac{\sigma_A}{\sigma_B} -(u-\Delta)^2/2\sigma_B{}^2$$

$$-\sigma_B{}^2u^2 = 2\sigma_A{}^2\sigma_B{}^2\left(\ln\rho\frac{\sigma_A}{\sigma_B} \right) -\sigma_A{}^2(u^2 - 2\Delta u + \Delta^2)$$

which simplifies to \( Au^2 + Bu + C = 0 \) with

$$\begin{aligned} A &= \sigma_B{}^2 - \sigma_A{}^2 \cr B &= 2\Delta\sigma_A{}^2 \cr C &= 2\sigma_A{}^2\sigma_B{}^2\left(\ln\rho\frac{\sigma_A}{\sigma_B}\right) - \Delta^2\sigma_A{}^2 \end{aligned}$$

We can solve this with the alternate form of the quadratic formula \( u = \frac{2C}{-B \pm \sqrt{B^2-4AC}} \) which can compute the root(s) even with \( A=0\ (\sigma_A = \sigma_B = \sigma) \), where it simplifies to \( u=-C/B=\frac{-\sigma^2 \ln \rho}{\mu_B - \mu_A} + \frac{\mu_B - \mu_A}{2} \) or \( x_0 = \frac{\mu_B + \mu_A}{2} - \frac{\sigma^2 \ln \rho}{\mu_B - \mu_A} \).

def find_threshold(dneg, dpos, ppos, vmatrix):

num = -(1-ppos)*(vmatrix[0][0]-vmatrix[0][1])

den = ppos*(vmatrix[1][0]-vmatrix[1][1])

rho = num/den

A = dneg.sigma**2 - dpos.sigma**2

ofs = dpos.mu

delta = dneg.mu - dpos.mu

B = 2.0*delta*dpos.sigma**2

C = (2.0 * dneg.sigma**2 * dpos.sigma**2 * np.log(rho*dpos.sigma/dneg.sigma)

- delta**2 * dpos.sigma**2)

if (A == 0):

roots = [ofs-C/B]

else:

D = B*B-4*A*C

roots = [ofs + 2*C/(-B-np.sqrt(D)),

ofs + 2*C/(-B+np.sqrt(D))]

# Calculate expected value, so that if we have more than one root,

# the caller can determine which is better

pneg = 1-ppos

results = []

for i,root in enumerate(roots):

cneg = dneg.cdf(root)

cpos = dpos.cdf(root)

Ev = (cneg*pneg*vmatrix[0][0]

+(1-cneg)*pneg*vmatrix[0][1]

+cpos*ppos*vmatrix[1][0]

+(1-cpos)*ppos*vmatrix[1][1])

results.append((root,Ev))

return results

find_threshold(dneg, dpos, 40e-6, [[0,-5000],[-1e7, -1e5]])[(182.23914143860179, -400.00000000000006), (53.162644275683974, -212.51747111423805)]

threshold =53.1626

for x0 in [threshold, threshold-0.1, threshold+0.1]:

C = analyze_binary(dneg, dpos, x0)

display(show_binary_matrix(C, x0, [dneg, dpos], 'ba', 40e-6,[[0,-5000],[-1e7, -1e5]],

special_format={'v':'$%.2f'}))Report for threshold \(x_0 = 53.1626 \rightarrow E[v]=\)$-212.52

| b | a | |

|---|---|---|

| B (EP-negative) | 0.98716 J=0.9871183 wv=$0.00 | 0.01284 J=0.0128417 wv=$-64.21 |

| A (EP-positive) | 0.36442 J=0.0000146 wv=$-145.77 | 0.63558 J=0.0000254 wv=$-2.54 |

Report for threshold \(x_0 = 53.0626 \rightarrow E[v]=\)$-212.58

| b | a | |

|---|---|---|

| B (EP-negative) | 0.98659 J=0.9865461 wv=$0.00 | 0.01341 J=0.0134139 wv=$-67.07 |

| A (EP-positive) | 0.35735 J=0.0000143 wv=$-142.94 | 0.64265 J=0.0000257 wv=$-2.57 |

Report for threshold \(x_0 = 53.2626 \rightarrow E[v]=\)$-212.58

| b | a | |

|---|---|---|

| B (EP-negative) | 0.98771 J=0.9876692 wv=$0.00 | 0.01229 J=0.0122908 wv=$-61.45 |

| A (EP-positive) | 0.37153 J=0.0000149 wv=$-148.61 | 0.62847 J=0.0000251 wv=$-2.51 |

So it looks like we’ve found the threshold \( x_0 = 53.0626 \) to maximize expected value of all possible outcomes at \$-212.52. The vast majority (98.72%) of people taking the test don’t incur any cost or trouble beyond that of the test itself.

Still, it’s somewhat unsatisfying to have such a high false negative rate: over 36% of patients who are EP-positive are undetected by our test, and are likely to die. To put this into perspective, consider a million patients who take this test. The expected number of them for each outcome are

- 12842 will be diagnosed with EP but are actually EP-negative (false positive) and require \$5000 in tests to confirm

- 15 will be EP-positive but will not be diagnosed with EP (false negative) and likely to die

- 25 will be EP-positive and correctly diagnosed and incur \$100,000 in treatment

- the rest are EP-negative and correctly diagnosed.

That doesn’t seem fair to those 15 people, just to help reduce the false positive rate.

We could try skewing the test by assigning a value of \$100 million, rather than \$10 million, for the false negative case, because it’s really really bad:

find_threshold(dneg, dpos, 40e-6, [[0,-5000],[-1e8, -1e5]])

[(187.25596232479654, -4000.0000000000005), (48.145823389489138, -813.91495238472135)]

threshold = 48.1458

for x0 in [threshold, threshold-0.1, threshold+0.1]:

C = analyze_binary(dneg, dpos, x0)

display(show_binary_matrix(C, x0, [dneg, dpos], 'ba', 40e-6,[[0,-5000],[-1e8, -1e5]],

special_format={'v':'$%.2f'}))Report for threshold \(x_0 = 48.1458 \rightarrow E[v]=\)$-813.91

| b | a | |

|---|---|---|

| B (EP-negative) | 0.91631 J=0.9162691 wv=$0.00 | 0.08369 J=0.0836909 wv=$-418.45 |

| A (EP-positive) | 0.09796 J=0.0000039 wv=$-391.85 | 0.90204 J=0.0000361 wv=$-3.61 |

Report for threshold \(x_0 = 48.0458 \rightarrow E[v]=\)$-814.23

| b | a | |

|---|---|---|

| B (EP-negative) | 0.91367 J=0.9136317 wv=$0.00 | 0.08633 J=0.0863283 wv=$-431.64 |

| A (EP-positive) | 0.09474 J=0.0000038 wv=$-378.96 | 0.90526 J=0.0000362 wv=$-3.62 |

Report for threshold \(x_0 = 48.2458 \rightarrow E[v]=\)$-814.23

| b | a | |

|---|---|---|

| B (EP-negative) | 0.91888 J=0.9188456 wv=$0.00 | 0.08112 J=0.0811144 wv=$-405.57 |

| A (EP-positive) | 0.10126 J=0.0000041 wv=$-405.06 | 0.89874 J=0.0000359 wv=$-3.59 |

There, by moving the threshold downward by about 5 points, we’ve reduced the false negative rate to just under 10%, and the false positive rate is just over 8%. The expected value has nearly quadrupled, though, to \$-813.91, mostly because the false positive rate has increased, but also because the cost of a false negative is much higher.

Now, for every million people who take the test, we would expect

- around 83700 will have a false positive

- 36 will be correctly diagnosed with EP

- 4 will incorrectly remain undiagnosed and likely die.

Somehow that doesn’t sound very satisfying either. Can we do any better?

Idiot lights

Let’s put aside our dilemma of choosing a diagnosis threshold, for a few minutes, and talk about idiot lights. This term generally refers to indicator lights on automotive instrument panels, and apparently showed up in print around 1960. A July 1961 article by Phil McCafferty in Popular Science, called Let’s Bring Back the Missing Gauges, states the issue succinctly:

Car makers have you and me figured out for idiots — to the tune of about eight million dollars a year. This is the estimated amount that auto makers save by selling us little blink-out bulbs — known to fervent nonbelievers as “idiot lights” — on five million new cars instead of fitting them out with more meaningful gauges.

Not everything about blink-outs is bad. They do give a conspicuous warning when something is seriously amiss. But they don’t tell enough, or tell it soon enough to be wholly reliable. That car buyers aren’t happy is attested by the fact that gauge makers gleefully sell some quarter of a million accessory instruments a year to people who insist on knowing what’s going on under their hoods.

He goes on to say:

There’s little consolation in being told your engine has overheated after it’s done it. With those wonderful old-time gauges, a climbing needle gave you warning before you got into trouble.

The basic blink-out principle is contrary to all rules of safety. Blink-outs do not “fail safe.” The system operates on the assurance that all is well when the lights are off. If a bulb burns out while you’re traveling, you’ve lost your warning system.

Sure, most indicators remain on momentarily during starting, which makes it possible to check them for burnouts. But this has been known to have problems.

Consider the Midwestern husband who carefully lectured his young wife on the importance of watching gauges, then traded in the old car for a new blink-out model. Anxious to try it out, the wife fired it up the minute it arrived without waiting for her husband to come home. Panicked by three glaring lights, she assumed the worst, threw open the hood, and was met by the smell of new engine paint burning. Without hesitation, she popped off the cap and filled the crank-case to the brim—with water.

The big blink-out gripe is that the lights fail to tell the degree of what is taking place. Most oil-pressure blink-outs turn off at about 10 to 15 pounds’ pressure. Yet this is not nearly enough to lubricate an engine at 70 m.p.h.

The generator blink-out, unlike an ammeter, tells only whether or not the generator is producing current, not how much. You can be heading for a dead battery if you are using more current than the generator is producing. A battery can also be ruined by pouring an excessive charge into it, and over-production can kill a generator. Yet in all cases the generator is working, so the light is off.

The lights hide the secrets that a sinking, climbing, or fluctuating needle can reveal. Because of this, blink-outs are one of the greatest things that ever happened to a shady used-car dealer.

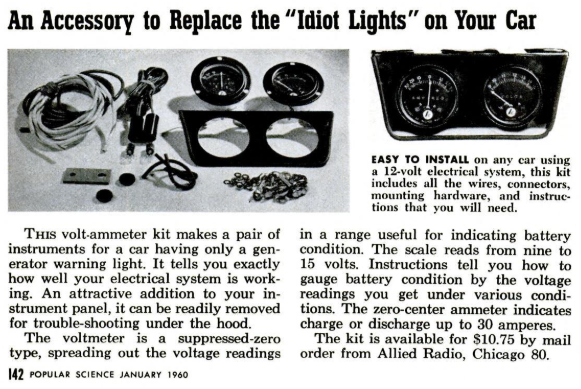

McCafferty seems to have been a bit of a zealot on this topic, publishing a November 1955 article in Popular Science, I Like the Gauges Detroit Left Out, though it doesn’t mention the term “idiot light”. The earliest use of “idiot light” in common media seems to be in the January 1960 issue of Popular Science, covering some automotive accessories like this volt-ammeter kit:

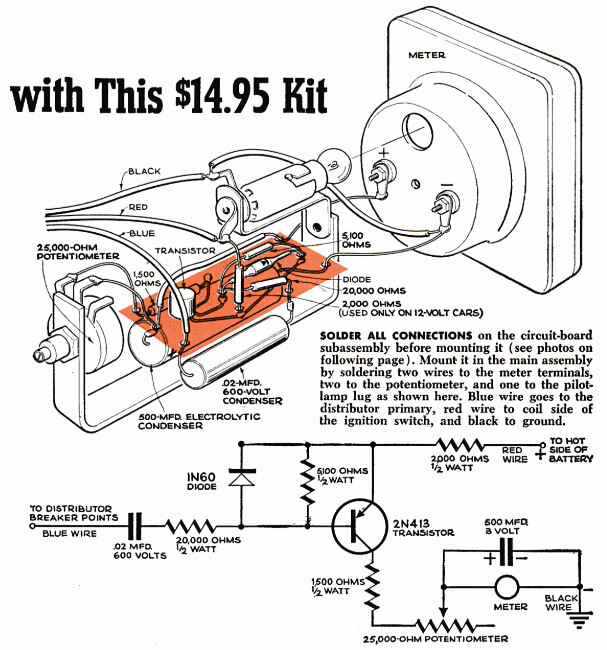

Interestingly enough, on the previous page is an article (TACHOMETER: You Can Assemble One Yourself with This \$14.95 Kit) showing a schematic and installation of a tachometer circuit that gets its input from one of the distributor terminals, and uses a PNP transistor to send a charge pulse to a capacitor and ammeter everytime the car’s distributor sends a high-voltage pulse to the spark plugs:

The earliest use of “idiot light” I could find in any publication is from a 1959 monograph on Standard Cells from the National Bureau of Standards which states

It is realized that the use of good-bad indicators must be approached with caution. Good-bad lights, often disparagingly referred to as idiot lights, are frequently resented by the technician because of the obvious implication. Furthermore, skilled technicians may feel that a good-bad indication does not give them enough information to support their intuitions. However, when all factors are weighed, good-bad indicators appear to best fit the requirements for an indication means that may be interpreted quickly and accurately by a wide variety of personnel whether trained or untrained.

Regardless of the term’s history, we’re stuck with these lights in many cases, and they’re a compromise between two principles:

- idiot lights are an effective and inexpensive method of catching the operator’s attention to one of many possible conditions that can occur, but they hide information by reducing a continuous value to a true/false indication

- a numeric result, shown by a dial-and-needle gauge or a numeric display, can show more useful information than an idiot light, but it is more expensive, doesn’t draw attention as easily as an idiot light, and it requires the operator to interpret the numeric value

¿Por qué no los dos?

If we don’t have an ultra-cost-sensitive system, why not have both? Computerized screens are very common these days, and it’s relatively easy to display both a PASS/FAIL or YES/NO indicator — for drawing attention to a possible problem — and a value that allows the operator to interpret the data.

Since 2008, new cars sold in the United States have been required to have a tire pressure monitoring system (TPMS). As a driver, I both love it and hate it. The TPMS is great for dealing with slow leaks before they become a problem. I carry a small electric tire pump that plugs into the 12V socket in my car, so if the TPMS light comes on, I can pull over, check the tire pressure, and pump up the one that has a leak to its normal range. I’ve had slow leaks that have lasted several weeks before they start getting worse. Or sometimes they’re just low pressure because it’s November or December and the temperature has decreased. What I don’t like is that there’s no numerical gauge. If my TPMS light comes on, I have no way to distinguish a slight decrease in tire pressure (25psi vs. the normal 30psi) vs. a dangerously low pressure (15psi), unless I stop and measure all four tires with a pressure gauge. I have no way to tell how quickly the tire pressure is decreasing, so I can decide whether to keep driving home and deal with it later, or whether to stop at the nearest possible service station. It would be great if my car had an information screen where I could read the tire pressure readings and decide what to do based on having that information.

As far as medical diagnostic tests go, using the extra information from a raw test score can be a more difficult decision, especially in cases where the chances and costs of both false positives and false negatives are high. In the EP example we looked at earlier, we had a 0-to-100 test with a threshold of somewhere in the 55-65 range. Allowing a doctor to use their judgment when interpreting this kind of a test might be a good thing, especially when the doctor can try to make use of other information. But as a patient, how am I to know? If I’m getting tested for EP and I have a 40 reading, my doctor can be very confident that I don’t have EP, whereas with a 75 reading, it’s a no-brainer to start treatment right away. But those numbers near the threshold are tricky.

Triage (¿Por qué no los tres?)

In medicine, the term triage refers to a process of rapid prioritization or categorization in order to determine which patients should be served first. The idea is to try to make the most difference, given limited resources — so patients who are sick or injured, but not in any immediate danger, may have to wait.

As an engineer, my colleagues and I use triage as a way to categorize issues so that we can focus only on the few that are most important. A couple of times a year we’ll go over the unresolved issues in our issue tracking database, to figure out which we’ll address in the near term. One of the things I’ve noticed is that our issues fall into three types:

- Issues which are obviously low priority — these are ones that we can look at in a few seconds and agree, “Oh, yeah, we don’t like that behavior but it’s just a minor annoyance and isn’t going to cause any real trouble.”

- Issues which are obviously high priority — these are ones that we can also look at in a few seconds and agree that we need to address them soon.

- Issues with uncertainty — we look at these and kind of sit and stare for a while, or have arguments within the group, about whether they’re important or not.

The ones in the last category take a lot of time, and slow this process down immensely. I would much rather come to a 30-second consensus of L/H/U (“low priority”/”high priority”/”uncertain”) and get through the whole list, then come back and go through the U issues one by one at a later date.

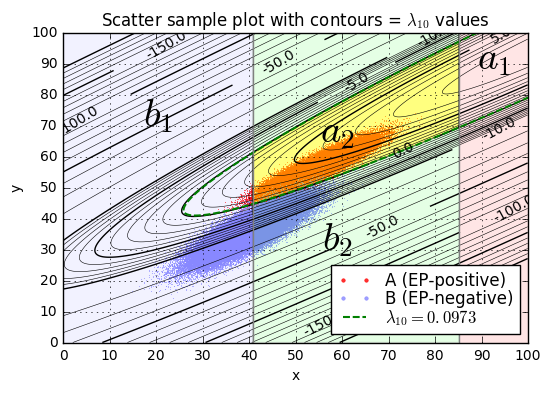

Let’s go back to our EP case, and use the results of our \$1 eyeball-photography test \( T_1 \), but instead of dividing our diagnosis into two outcomes, let’s divide it into three outcomes:

- Patients are diagnosed as EP-positive, with high confidence

- Patients for which the EP test is “ambivalent” and it is not possible to distinguish between EP-positive and EP-negative cases with high confidence

- Patients are diagnosed as EP-negative, with high confidence

We take the same actions in the EP-positive case (admit patient and begin treatment) and the EP-negative case (discharge patient) as before, but now we have this middle ground. What should we do? Well, we can use resources to evaluate the patient more carefully. Perhaps there’s some kind of blood test \( T_2 \), which costs \$100, but improves our ability to distinguish between EP-positive and EP-negative populations. It’s more expensive than the \$1 test, but much less expensive than the \$5000 run of tests we used in false positive cases in our example.

How can we evaluate test \( T_2 \)?

Bivariate normal distributions

Let’s say that \( T_2 \) also has a numeric result \( y \) from 0 to 100, and it has a Gaussian distribution as well, so that tests \( T_1 \) and \( T_2 \) return a pair of values \( (x,y) \) with a bivariate normal distribution, in particular both \( x \) and \( y \) can be described by their mean values \( \mu_x, \mu_y \), standard deviations \( \sigma_x, \sigma_y \) and correlation coefficient \( \rho \), so that the covariance matrix \( \operatorname{cov}(x,y) = \begin{bmatrix}S_{xx} & S_{xy} \cr S_{xy} & S_{yy}\end{bmatrix} \) can be calculated with \( S_{xx} = \sigma_x{}^2, S_{yy} = \sigma_y{}^2, S_{xy} = \rho\sigma_x\sigma_y. \)

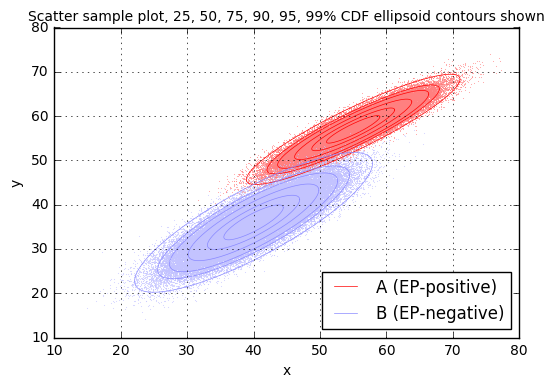

-

When a patient has EP (condition A), the second-order statistics of \( (x,y) \) can be described as

- \( \mu_x = 55, \mu_y = 57 \)

- \( \sigma_x=5.3, \sigma_y=4.1 \)

- \( \rho = 0.91 \)

-

When a patient does not have EP (condition B), the second-order statistics of \( (x,y) \) can be described as

- \( \mu_x = 40, \mu_y = 36 \)

- \( \sigma_x=5.9, \sigma_y=5.2 \)

- \( \rho = 0.84 \)

The covariance matrix may be unfamiliar to you, but it’s not very complicated. (Still, if you don’t like the math, just skip to the graphs below.) Each of the entries of the covariance matrix is merely the expected value of the product of the variables in question after removing the mean, so with a pair of zero-mean random variables \( (x’,y’) \) with \( x’ = x - \mu_x, y’=y-\mu_y \), the covariance matrix is just \( \operatorname{cov}(x’,y’) = \begin{bmatrix}E[x’^2] & E[x’y’] \cr E[x’y’] & E[y’^2] \end{bmatrix} \)

In order to help visualize this, let’s graph the two conditions.

First of all, we need to know how to generate pseudorandom values with these distributions. If we generate two independent Gaussian random variables \( (u,v) \) with zero mean and unit standard deviation, then the covariance matrix is just \( \begin{bmatrix}1 & 0 \cr 0 & 1\end{bmatrix} \). We can create new random variables \( (x,y) \) as a linear combination of \( u \) and \( v \):

$$\begin{aligned}x &= a_1u + b_1v \cr y &= a_2u + b_2v \end{aligned}$$

In this case, \( \operatorname{cov}(x,y)=\begin{bmatrix}E[x^2] & E[xy] \cr E[xy] & E[y^2] \end{bmatrix} = \begin{bmatrix}a_1{}^2 + b_1{}^2 & a_1a_2 + b_1b_2 \cr a_1a_2 + b_1b_2 & a_2{}^2 + b_2{}^2 \end{bmatrix}. \) As an example for computing this, \( E[x^2] = E[(a_1u+b_1v)^2] = a_1{}^2E[u^2] + 2a_1b_1E[uv] + b_1{}^2E[v^2] = a_1{}^2 + b_1{}^2 \) since \( E[u^2]=E[v^2] = 1 \) and \( E[uv]=0 \).

We can choose values \( a_1, a_2, b_1, b_2 \) so that we achieve the desired covariance matrix:

$$\begin{aligned} a_1 &= \sigma_x \cos \theta_x \cr b_1 &= \sigma_x \sin \theta_x \cr a_2 &= \sigma_y \cos \theta_y \cr b_2 &= \sigma_y \sin \theta_y \cr \end{aligned}$$

which yields \( \operatorname{cov}(x,y) = \begin{bmatrix}\sigma_x^2 & \sigma_x\sigma_y\cos(\theta_x -\theta_y) \cr \sigma_x\sigma_y\cos(\theta_x -\theta_y) & \sigma_y^2 \end{bmatrix}, \) and therefore we can choose any \( \theta_x, \theta_y \) such that \( \cos(\theta_x -\theta_y) = \rho. \) In particular, we can always choose \( \theta_x = 0 \) and \( \theta_y = \cos^{-1}\rho \), so that

$$\begin{aligned} a_1 &= \sigma_x \cr b_1 &= 0 \cr a_2 &= \sigma_y \rho \cr b_2 &= \sigma_y \sqrt{1-\rho^2} \cr \end{aligned}$$

and therefore

$$\begin{aligned} x &= \mu_x + \sigma_x u \cr y &= \mu_y + \rho\sigma_y u + \sqrt{1-\rho^2}\sigma_y v \end{aligned}$$

is a possible method of constructing \( (x,y) \) from independent unit Gaussian random variables \( (u,v). \) (For the mean values, we just added them in at the end.)

OK, so let’s use this to generate samples from the two conditions A and B, and graph them:

from scipy.stats import chi2

import matplotlib.colors

colorconv = matplotlib.colors.ColorConverter()

Coordinate2D = namedtuple('Coordinate2D','x y')

class Gaussian2D(namedtuple('Gaussian','mu_x mu_y sigma_x sigma_y rho name id color')):

@property

def mu(self):

""" mean """

return Coordinate2D(self.mu_x, self.mu_y)

def cov(self):

""" covariance matrix """

crossterm = self.rho*self.sigma_x*self.sigma_y

return np.array([[self.sigma_x**2, crossterm],

[crossterm, self.sigma_y**2]])

def sample(self, N, r=np.random):

""" generate N random samples """

u = r.randn(N)

v = r.randn(N)

return self._transform(u,v)

def _transform(self, u, v):

""" transform from IID (u,v) to (x,y) with this distribution """

rhoc = np.sqrt(1-self.rho**2)

x = self.mu_x + self.sigma_x*u

y = self.mu_y + self.sigma_y*self.rho*u + self.sigma_y*rhoc*v

return x,y

def uv2xy(self, u, v):

return self._transform(u,v)

def xy2uv(self, x, y):

rhoc = np.sqrt(1-self.rho**2)

u = (x-self.mu_x)/self.sigma_x

v = ((y-self.mu_y) - self.sigma_y*self.rho*u)/rhoc/self.sigma_y

return u,v

def contour(self, c, npoint=360):

"""

generate elliptical contours

enclosing a fraction c of the population

(c can be a vector)

R^2 is a chi-squared distribution with 2 degrees of freedom:

https://en.wikipedia.org/wiki/Chi-squared_distribution

"""

r = np.sqrt(chi2.ppf(c,2))

if np.size(c) > 1 and len(np.shape(c)) == 1:

r = np.atleast_2d(r).T

th = np.arange(npoint)*2*np.pi/npoint

return self._transform(r*np.cos(th), r*np.sin(th))

def pdf_exponent(self, x, y):

xdelta = x - self.mu_x

ydelta = y - self.mu_y

return -0.5/(1-self.rho**2)*(

xdelta**2/self.sigma_x**2

- 2.0*self.rho*xdelta*ydelta/self.sigma_x/self.sigma_y

+ ydelta**2/self.sigma_y**2

)

@property

def pdf_scale(self):

return 1.0/2/np.pi/np.sqrt(1-self.rho**2)/self.sigma_x/self.sigma_y

def pdf(self, x, y):

""" probability density function """

q = self.pdf_exponent(x,y)

return self.pdf_scale * np.exp(q)

def logpdf(self, x, y):

return np.log(self.pdf_scale) + self.pdf_exponent(x,y)

@property

def logpdf_coefficients(self):

"""

returns a vector (a,b,c,d,e,f)

such that log(pdf(x,y)) = ax^2 + bxy + cy^2 + dx + ey + f

"""

f0 = np.log(self.pdf_scale)

r = -0.5/(1-self.rho**2)

a = r/self.sigma_x**2

b = r*(-2.0*self.rho/self.sigma_x/self.sigma_y)

c = r/self.sigma_y**2

d = -2.0*a*self.mu_x -b*self.mu_y

e = -2.0*c*self.mu_y -b*self.mu_x

f = f0 + a*self.mu_x**2 + c*self.mu_y**2 + b*self.mu_x*self.mu_y

return np.array([a,b,c,d,e,f])

def project(self, axis):

"""

Returns a 1-D distribution on the specified axis

"""

if isinstance(axis, basestring):

if axis == 'x':

mu = self.mu_x

sigma = self.sigma_x

elif axis == 'y':

mu = self.mu_y

sigma = self.sigma_y

else:

raise ValueError('axis must be x or y')

else:

# assume linear combination of x,y

a,b = axis

mu = a*self.mu_x + b*self.mu_y

sigma = np.sqrt((a*self.sigma_x)**2

+(b*self.sigma_y)**2

+2*a*b*self.rho*self.sigma_x*self.sigma_y)

return Gaussian1D(mu,sigma,self.name,self.id,self.color)

def slice(self, x=None, y=None):

"""

Returns information (w, mu, sigma) on the probability distribution

with x or y constrained:

w: probability density across the entire slice

mu: mean value of the pdf within the slice

sigma: standard deviation of the pdf within the slice

"""

if x is None and y is None:

raise ValueError("At least one of x or y must be a value")

rhoc = np.sqrt(1-self.rho**2)

if y is None:

w = scipy.stats.norm.pdf(x, self.mu_x, self.sigma_x)

mu = self.mu_y + self.rho*self.sigma_y/self.sigma_x*(x-self.mu_x)

sigma = self.sigma_y*rhoc

else:

# x is None

w = scipy.stats.norm.pdf(y, self.mu_y, self.sigma_y)

mu = self.mu_x + self.rho*self.sigma_x/self.sigma_y*(y-self.mu_y)

sigma = self.sigma_x*rhoc

return w, mu, sigma

def slicefunc(self, which):

rhoc = np.sqrt(1-self.rho**2)

if which == 'x':

sigma = self.sigma_y*rhoc

a = self.rho*self.sigma_y/self.sigma_x

def f(x):

w = scipy.stats.norm.pdf(x, self.mu_x, self.sigma_x)

mu = self.mu_y + a*(x-self.mu_x)

return w,mu,sigma

elif which == 'y':

sigma = self.sigma_x*rhoc

a = self.rho*self.sigma_x/self.sigma_y

def f(y):

w = scipy.stats.norm.pdf(y, self.mu_y, self.sigma_y)

mu = self.mu_x + a*(y-self.mu_y)

return w,mu,sigma

else:

raise ValueError("'which' must be x or y")

return f

DETERMINISTIC_SEED = 123

np.random.seed(DETERMINISTIC_SEED)

N = 100000

distA = Gaussian2D(mu_x=55,mu_y=57,sigma_x=5.3,sigma_y=4.1,rho=0.91,name='A (EP-positive)',id='A',color='red')

distB = Gaussian2D(mu_x=40,mu_y=36,sigma_x=5.9,sigma_y=5.2,rho=0.84,name='B (EP-negative)',id='B',color='#8888ff')

xA,yA = distA.sample(N)

xB,yB = distB.sample(N)

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

def scatter_samples(ax,xyd_list,contour_list=(),**kwargs):

Kmute = 1 if not contour_list else 0.5

for x,y,dist in xyd_list:

mutedcolor = colorconv.to_rgb(dist.color)

mutedcolor = [c*Kmute+(1-Kmute) for c in mutedcolor]

if not contour_list:

kwargs['label']=dist.name

ax.plot(x,y,'.',color=mutedcolor,alpha=0.8,markersize=0.5,**kwargs)

for x,y,dist in xyd_list:

# Now draw contours for certain probabilities

th = np.arange(1200)/1200.0*2*np.pi

u = np.cos(th)

v = np.sin(th)

first = True

for p in contour_list:

cx,cy = dist.contour(p)

kwargs = {}

if first:

kwargs['label']=dist.name

first = False

ax.plot(cx,cy,color=dist.color,linewidth=0.5,**kwargs)

ax.set_xlabel('x')

ax.set_ylabel('y')

ax.legend(loc='lower right',markerscale=10)

ax.grid(True)

title = 'Scatter sample plot'

if contour_list:

title += (', %s%% CDF ellipsoid contours shown'

% (', '.join('%.0f' % (p*100) for p in contour_list)))

ax.set_title(title, fontsize=10)

scatter_samples(ax,[(xA,yA,distA),

(xB,yB,distB)],

[0.25,0.50,0.75,0.90,0.95,0.99])

for x,y,desc in [(xA, yA,'A'),(xB,yB,'B')]:

print "Covariance matrix for case %s:" % desc

C = np.cov(x,y)

print C

sx = np.sqrt(C[0,0])

sy = np.sqrt(C[1,1])

rho = C[0,1]/sx/sy

print "sample sigma_x = %.3f" % sx

print "sample sigma_y = %.3f" % sy

print "sample rho = %.3f" % rhoCovariance matrix for case A: [[ 28.06613839 19.74199382] [ 19.74199382 16.76597717]] sample sigma_x = 5.298 sample sigma_y = 4.095 sample rho = 0.910 Covariance matrix for case B: [[ 34.6168817 25.69386711] [ 25.69386711 27.05651845]] sample sigma_x = 5.884 sample sigma_y = 5.202 sample rho = 0.840

It may seem strange, but having the results of two tests (\( x \) from test \( T_1 \) and \( y \) from test \( T_2 \)) gives more useful information than the result of each test considered on its own. We’ll come back to this idea a little bit later.

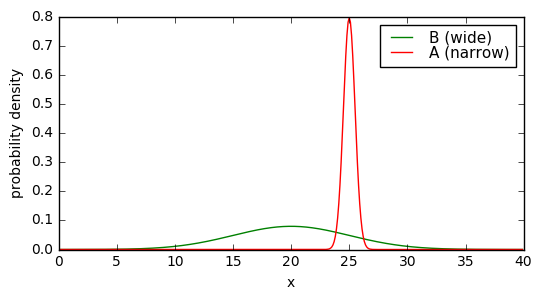

The more immediate question is: given the pair of results \( (x,y) \), how would we decide whether the patient has \( EP \) or not? With just test \( T_1 \) we could merely declare an EP-positive diagnosis if \( x > x_0 \) for some threshold \( x_0 \). With two variables, some kind of inequality is involved, but how do we decide?

Bayes’ Rule

We are greatly indebted to the various European heads of state and religion during much of the 18th century (the Age of Enlightenment) for merely leaving people alone. (OK, this wasn’t universally true, but many of the monarchies turned a blind eye towards intellectualism.) This lack of interference and oppression resulted in numerous mathematical and scientific discoveries, one of which was Bayes’ Rule, named after Thomas Bayes, a British clergyman and mathematician. Bayes’ Rule was published posthumously in An Essay towards solving a Problem in the Doctrine of Chances, and later inflicted on throngs of undergraduate students of probability and statistics.

The basic idea involves conditional probabilities and reminds me of the logical converse. As a hypothetical example, suppose we know that 95% of Dairy Queen customers are from the United States and that 45% of those US residents who visit Dairy Queen like peppermint ice cream, whereas 72% of non-US residents like peppermint ice cream. We are in line to get some ice cream, and we notice that the person in front of us orders peppermint ice cream. Can we make any prediction of the probability that this person is from the US?

Bayes’ Rule relates these two conditions. Let \( A \) represent the condition that a Dairy Queen customer is a US resident, and \( B \) represent that they like peppermint ice cream. Then \( P(A\ |\ B) = \frac{P(B\ |\ A)P(A)}{P(B)} \), which is really just an algebraic rearrangement of the expression of the joint probability that \( A \) and \( B \) are both true: \( P(AB) = P(A\ |\ B)P(B) = P(B\ |\ A)P(A) \). Applied to our Dairy Queen example, we have \( P(A) = 0.95 \) (95% of Dairy Queen customers are from the US) and \( P(B\ |\ A) = 0.45 \) (45% of customers like peppermint ice cream, given that they are from the US). But what is \( P(B) \), the probability that a Dairy Queen customer likes peppermint ice cream? Well, it’s the sum of the all the constituent joint probabilities where the customer likes peppermint ice cream. For example, \( P(AB) = P(B\ |\ A)P(A) = 0.45 \times 0.95 = 0.4275 \) is the joint probability that a Dairy Queen customer is from the US and likes peppermint ice cream, and \( P(\bar{A}B) = P(B\ |\ \bar{A})P(\bar{A}) = 0.72 \times 0.05 = 0.036 \) is the joint probability that a Dairy Queen customer is not from the US and likes peppermint ice cream. Then \( P(B) = P(AB) + P(\bar{A}B) = 0.4275 + 0.036 = 0.4635 \). (46.35% of all Dairy Queen customers like peppermint ice cream.) The final application of Bayes’ Rule tells us

$$P(A\ |\ B) = \frac{P(B\ |\ A)P(A)}{P(B)} = \frac{0.45 \times 0.95}{0.4635} \approx 0.9223$$

and therefore if we see someone order peppermint ice cream at Dairy Queen, there is a whopping 92.23% chance they are not from the US.

Let’s go back to our embolary pulmonism scenario where a person takes both tests \( T_1 \) and \( T_2 \), with results \( R = (x=45, y=52) \). Can we estimate the probability that this person has EP?

N = 500000

np.random.seed(DETERMINISTIC_SEED)

xA,yA = distA.sample(N)

xB,yB = distB.sample(N)

fig=plt.figure()

ax=fig.add_subplot(1,1,1)

scatter_samples(ax,[(xA,yA,distA),

(xB,yB,distB)])

ax.plot(45,52,'.k');

We certainly aren’t going to be able to find the answer exactly just from looking at this chart, but it looks like an almost certain case of A being true — that is, \( R: x=45, y=52 \) implies that the patient probably has EP. Let’s figure it out as

$$P(A\ |\ R) = \frac{P(R\ |\ A)P(A)}{P(R)}.$$

Remember we said earlier that the base rate, which is the probability of any given person presenting symptoms having EP before any testing, is \( P(A) = 40\times 10^{-6} \). (This is known as the a priori probability, whenever this Bayesian stuff gets involved.) The other two probabilities \( P(R\ |\ A) \) and \( P(R) \) are technically infinitesimal, because they are part of continuous probability distributions, but we can handwave and say that \( R \) is really the condition that the results are \( 45 \le x \le 45 + dx \) and \( 52 \le y \le 52+dy \) for some infinitesimal interval widths \( dx, dy \), in which case \( P(R\ |\ A) = p_A(R)\,dx\,dy \) and \( P(R) = P(R\ |\ A)P(A) + P(R\ |\ B)P(B) = p_A(R)P(A)\,dx\,dy + p_B(R)P(B)\,dx\,dy \) where \( p_A \) and \( p_B \) are the probability density functions. Substituting this all in we get

$$P(A\ |\ R) = \frac{p_A(R)P(A)}{p_A(R)P(A)+p_B(R)P(B)}$$

The form of the bivariate normal distribution is not too complicated, just a bit unwieldy:

$$p(x,y) = \frac{1}{2\pi\sqrt{1-\rho^2}\sigma_x\sigma_y}e^{q(x,y)}$$

with

$$q(x,y) = -\frac{1}{2(1-\rho^2)}\left(\frac{(x-\mu_x)^2}{\sigma_x{}^2}-2\rho\frac{(x-\mu_x)(y-\mu_y)}{\sigma_x\sigma_y}+\frac{(y-\mu_y)^2}{\sigma_y{}^2}\right)$$

and the rest is just number crunching:

x1=45

y1=52

PA_total = 40e-6

pA = distA.pdf(x1, y1)

pB = distB.pdf(x1, y1)

print "pA(%.1f,%.1f) = %.5g" % (x1,y1,pA)

print "pB(%.1f,%.1f) = %.5g" % (x1,y1,pB)

print ("Bayes' rule result: p(A | x=%.1f, y=%.1f) = %.5g" %

(x1,y1,pA*PA_total/(pA*PA_total+pB*(1-PA_total))))pA(45.0,52.0) = 0.0014503 pB(45.0,52.0) = 4.9983e-07 Bayes' rule result: p(A | x=45.0, y=52.0) = 0.104

Wow, that’s counterintuitive. This result value \( R \) lies much closer to the probability “cloud” of A = EP-positive than B = EP-negative, but Bayes’ Rule tells us there’s only about a 10.4% probability that a patient with test results \( (x=45, y=52) \) has EP. And it’s because of the very low incidence of EP.

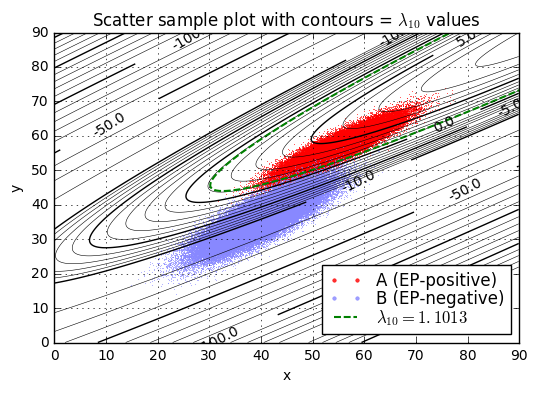

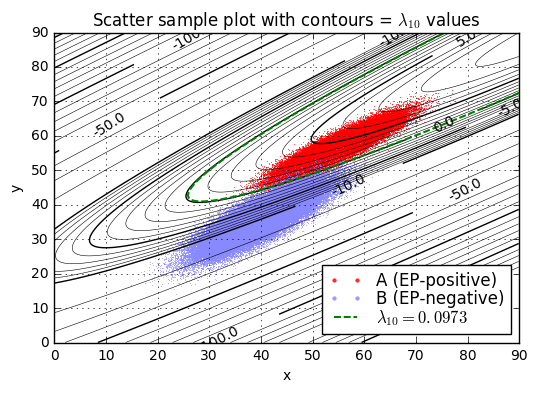

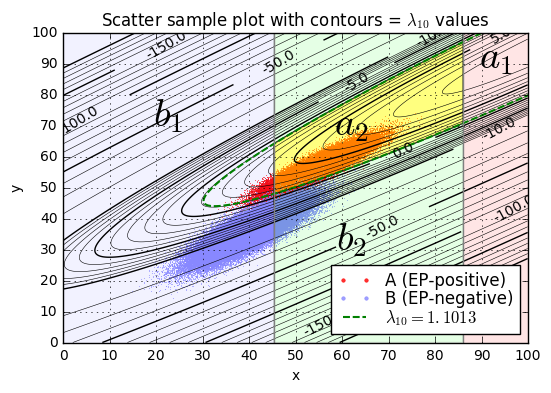

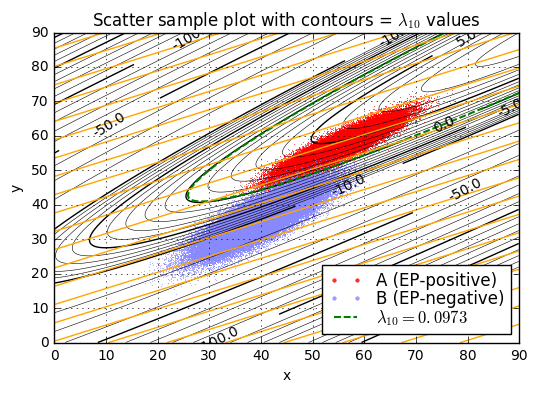

There is something we can do to make reading the graph useful, and that’s to plot a parameter I’m going to call \( \lambda(x,y) \), which is the logarithm of the ratio of probability densities:

$$\lambda(x,y) = \ln \frac{p_A(x,y)}{p_B(x,y)} = \ln p_A(x,y) - \ln p_B(x,y)$$

Actually, we’ll plot \( \lambda_{10}(x,y) = \lambda(x,y) / \ln 10 = \log_{10} \frac{p_A(x,y)}{p_B(x,y)} \).

This parameter is useful because Bayes’ rule calculates

$$\begin{aligned} P(A\ |\ x,y) &= \frac{p_A(x,y) P_A} { p_A(x,y) P_A + p_B(x,y) P_B} \cr &= \frac{p_A(x,y)/p_B(x,y) P_A} {p_A(x,y)/p_B(x,y) P_A + P_B} \cr &= \frac{e^{\lambda(x,y)} P_A} {e^{\lambda(x,y)} P_A + P_B} \cr &= \frac{1}{1 + e^{-\lambda(x,y)}P_B / P_A} \end{aligned}$$

and for any desired \( P(A\ |\ x,y) \) we can figure out the equivalent value of \( \lambda(x,y) = - \ln \left(\frac{P_A}{P_B}((1/P(A\ |\ x,y) - 1)\right). \)

This means that for a fixed value of \( \lambda \), then \( P(A\ |\ \lambda) = \frac{1}{1 + e^{-\lambda}P_B / P_A}. \)

For bivariate Gaussian distributions, the \( \lambda \) parameter is also useful because it is a quadratic function of \( x \) and \( y \), so curves of constant \( \lambda \) are conic sections (lines, ellipses, hyperbolas, or parabolas).

def jcontourp(ax,x,y,z,levels,majorfunc,color=None,fmt=None, **kwargs):

linewidths = [1 if majorfunc(l) else 0.4 for l in levels]

cs = ax.contour(x,y,z,levels,

linewidths=linewidths,

linestyles='-',

colors=color,**kwargs)

labeled = [l for l in cs.levels if majorfunc(l)]

ax.clabel(cs, labeled, inline=True, fmt='%s', fontsize=10)

xv = np.arange(10,80.01,0.1)

yv = np.arange(10,80.01,0.1)

x,y = np.meshgrid(xv,yv)

def lambda10(distA,distB,x,y):

return (distA.logpdf(x, y)-distB.logpdf(x, y))/np.log(10)

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

scatter_samples(ax,[(xA,yA,distA),

(xB,yB,distB)],

zorder=-1)

ax.plot(x1,y1,'.k')

print "lambda10(x=%.1f,y=%.1f) = %.2f" % (x1,y1,lambda10(distA,distB,x1,y1))

levels = np.union1d(np.arange(-10,10), np.arange(-200,100,10))

def levelmajorfunc(level):

if -10 <= level <= 10:

return int(level) % 5 == 0

else:

return int(level) % 25 == 0

jcontourp(ax,x,y,lambda10(distA,distB,x,y),

levels,

levelmajorfunc,

color='black')

ax.set_xlim(xv.min(), xv.max()+0.001)

ax.set_ylim(yv.min(), yv.max()+0.001)

ax.set_title('Scatter sample plot with contours = $\lambda_{10}$ values');lambda10(x=45.0,y=52.0) = 3.46

We’ll pick one particular \( \lambda_{10} \) value as a threshold \( L_{10} = L/ \ln 10 \), and if \( \lambda_{10} > L_{10} \) then we’ll declare condition \( a \) (the patient is diagnosed as EP-positive), otherwise we’ll declare condition \( b \) (the patient is diagnosed as EP-negative). The best choice of \( L_{10} \) is the one that maximizes expected value.

Remember how we did this in the case with only the one test \( T_1 \) with result \( x \): we chose threshold \( x_0 \) based on the point where \( {\partial E \over \partial x_0} = 0 \); in other words, a change in diagnosis did not change the expected value at this point. We can do the same thing here:

$$0 = {\partial E[v] \over \partial {L}} = P(A\ |\ \lambda=L)(v_{Ab}-v_{Aa}) + P(B\ |\ \lambda=L)(v_{Bb}-v_{Ba})$$

With our earlier definitions

$$\rho_v = -\frac{v_{Bb}-v_{Ba}}{v_{Ab}-v_{Aa}}, \qquad \rho_p = P_B / P_A$$

then the equation for \( L \) becomes \( 0 = P(A\ |\ \lambda=L) - \rho_v P(B\ |\ \lambda=L) = P(A\ |\ \lambda=L) - \rho_v (1-P(A\ |\ \lambda=L)) \), which simplifies to

$$P(A\ |\ \lambda=L) = \frac{\rho_v}{\rho_v+1} = \frac{1}{1+1/\rho_v} .$$

But we already defined \( \lambda \) as the group of points \( (x,y) \) that have equal probability \( P(A\ |\ \lambda) = \frac{1}{1 + e^{-\lambda}P_B / P_A} = \frac{1}{1 + \rho_p e^{-\lambda}} \) so

$$\frac{1}{1+1/\rho_v} = \frac{1}{1 + \rho_p e^{-L}}$$

which occurs when \( L = \ln \rho_v \rho_p. \)

In our EP example,

$$\begin{aligned} \rho_p &= p_B/p_A = 0.99996 / 0.00004 = 24999 \cr \rho_v &= -\frac{v_{Bb}-v_{Ba}}{v_{Ab}-v_{Aa}} = -5000 / -9.9\times 10^6 \approx 0.00050505 \cr \rho_v\rho_p &\approx 12.6258 \cr L &= \ln \rho_v\rho_p \approx 2.5357 \cr L_{10} &= \log_{10}\rho_v\rho_p \approx 1.1013 \end{aligned}$$

and we can complete the analysis empirically by looking at the fraction of pseudorandomly-generated sample points where \( \lambda_{10} < L_{10} \); this is an example of Monte Carlo analysis.

class Quadratic1D(namedtuple('Quadratic1D','a b c')):

"""

Q(x) = a*x*x + b*x + c

"""

__slots__ = ()

@property

def x0(self):

return -self.b/(2.0*self.a)

@property

def q0(self):

return self.c - self.a*self.x0**2

def __call__(self,x):

return self.a*x*x + self.b*x + self.c

def solve(self, q):

D = self.b*self.b - 4*self.a*(self.c-q)

sqrtD = np.sqrt(D)

return np.array([-self.b-sqrtD, -self.b+sqrtD])/(2*self.a)

class QuadraticLissajous(namedtuple('QuadraticLissajous','x0 y0 Rx Ry phi')):

"""

A parametric curve as a function of theta:

x = x0 + Rx * cos(theta)

y = y0 + Ry * sin(theta+phi)

"""

__slots__ = ()

def __call__(self, theta):

return (self.x0 + self.Rx * np.cos(theta),

self.y0 + self.Ry * np.sin(theta+self.phi))

class Quadratic2D(namedtuple('Quadratic2D','a b c d e f')):

"""

Bivariate quadratic function

Q(x,y) = a*x*x + b*x*y + c*y*y + d*x + e*y + f

= a*(x-x0)*(x-x0) + b*(x-x0)*(y-y0) + c*(y-y0)*(y-y0) + q0

= s*(u*u + v*v) + q0 where s = +/-1

https://en.wikipedia.org/wiki/Quadratic_function#Bivariate_(two_variable)_quadratic_function

(Warning: this implementation assumes convexity,

that is, b*b < 4*a*c, so hyperboloids/paraboloids are not handled.)

"""

__slots__ = ()

@property

def discriminant(self):

return self.b**2 - 4*self.a*self.c

@property

def x0(self):

return (2*self.c*self.d - self.b*self.e)/self.discriminant

@property

def y0(self):

return (2*self.a*self.e - self.b*self.d)/self.discriminant

@property

def q0(self):

x0 = self.x0

y0 = self.y0

return self.f - self.a*x0*x0 - self.b*x0*y0 - self.c*y0*y0

def _Kcomponents(self):

s = 1 if self.a > 0 else -1

r = s*self.b/2.0/np.sqrt(self.a*self.c)

rc = np.sqrt(1-r*r)

Kux = rc*np.sqrt(self.a*s)

Kvx = r*np.sqrt(self.a*s)

Kvy = np.sqrt(self.c*s)

return Kux, Kvx, Kvy

@property

def Kxy2uv(self):

Kux, Kvx, Kvy = self._Kcomponents()

return np.array([[Kux, 0],[Kvx, Kvy]])

@property

def Kuv2xy(self):

Kux, Kvx, Kvy = self._Kcomponents()

return np.array([[1.0/Kux, 0],[-1.0*Kvx/Kux/Kvy, 1.0/Kvy]])

@property

def transform_xy2uv(self):

Kxy2uv = self.Kxy2uv

x0 = self.x0

y0 = self.y0

def transform(x,y):

return np.dot(Kxy2uv, [x-x0,y-y0])

return transform

@property

def transform_uv2xy(self):

Kuv2xy = self.Kuv2xy

x0 = self.x0

y0 = self.y0

def transform(u,v):

return np.dot(Kuv2xy, [u,v]) + [[x0],[y0]]

return transform

def uv_radius(self, q):

"""

Returns R such that solutions (u,v) of Q(x,y) = q

lie within the range [-R, R],

or None if there are no solutions.

"""

s = 1 if self.a > 0 else -1

D = (q-self.q0)*s

return np.sqrt(D) if D >= 0 else None

def _xy_radius_helper(self, q, z):

D = (self.q0 - q) * 4 * z / self.discriminant

if D < 0:

return None

else:

return np.sqrt(D)

def x_radius(self, q):

"""

Returns Rx such that solutions (x,y) of Q(x,y) = q

lie within the range [x0-Rx, x0+Rx],

or None if there are no solutions.

Equal to uv_radius(q)/Kux, but we can solve more directly

"""

return self._xy_radius_helper(q, self.c)

def y_radius(self, q):

"""

Returns Rx such that solutions (x,y) of Q(x,y) = q

lie within the range [x0-Rx, x0+Rx],

or None if there are no solutions.

Equal to uv_radius(q)/Kux, but we can solve more directly

"""

return self._xy_radius_helper(q, self.a)

def lissajous(self, q):

"""

Returns a QuadraticLissajous with x0, y0, Rx, Ry, phi such that

the solutions (x,y) of Q(x,y) = q

can be written:

x = x0 + Rx * cos(theta)

y = y0 + Ry * sin(theta+phi)

Rx and Ry and phi may each return None if no such solution exists.

"""

D = self.discriminant

x0 = (2*self.c*self.d - self.b*self.e)/D

y0 = (2*self.a*self.e - self.b*self.d)/D

q0 = self.f - self.a*x0*x0 - self.b*x0*y0 - self.c*y0*y0

Dx = 4 * (q0-q) * self.c / D

Rx = None if Dx < 0 else np.sqrt(Dx)

Dy = 4 * (q0-q) * self.a / D

Ry = None if Dy < 0 else np.sqrt(Dy)

phi = None if D > 0 else np.arcsin(self.b / (2*np.sqrt(self.a*self.c)))

return QuadraticLissajous(x0,y0,Rx,Ry,phi)

def contour(self, q, npoints=360):

"""

Returns a pair of arrays x,y such that

Q(x,y) = q

"""

s = 1 if self.a > 0 else -1

R = np.sqrt((q-self.q0)*s)

th = np.arange(npoints)*2*np.pi/npoints

u = R*np.cos(th)

v = R*np.sin(th)

return self.transform_uv2xy(u,v)

def constrain(self, x=None, y=None):

if x is None and y is None:

return self

if x is None:

# return a function in x

return Quadratic1D(self.a,

self.d + y*self.b,

self.f + y*self.e + y*y*self.c)

if y is None:

# return a function in y

return Quadratic1D(self.c,

self.e + x*self.b,

self.f + x*self.d + x*x*self.a)

return self(x,y)

def __call__(self, x, y):

return (self.a*x*x

+self.b*x*y

+self.c*y*y

+self.d*x

+self.e*y

+self.f)

def decide_limits(*args, **kwargs):

s = kwargs.get('s', 6)

xmin = None

xmax = None

for xbatch in args:

xminb = min(xbatch)

xmaxb = max(xbatch)

mu = np.mean(xbatch)

std = np.std(xbatch)

xminb = min(mu-s*std, xminb)

xmaxb = max(mu+s*std, xmaxb)

if xmin is None:

xmin = xminb

xmax = xmaxb

else:

xmin = min(xmin,xminb)

xmax = max(xmax,xmaxb)

# Quantization

q = kwargs.get('q')

if q is not None:

xmin = np.floor(xmin/q)*q

xmax = np.ceil(xmax/q)*q

return xmin, xmax

def separation_plot(xydistA, xydistB, Q, L, ax=None, xlim=None, ylim=None):

L10 = L/np.log(10)

if ax is None:

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

xA,yA,distA = xydistA

xB,yB,distB = xydistB

scatter_samples(ax,[(xA,yA,distA),

(xB,yB,distB)],

zorder=-1)

xc,yc = Q.contour(L)

ax.plot(xc,yc,

color='green', linewidth=1.5, dashes=[5,2],

label = '$\\lambda_{10} = %.4f$' % L10)

ax.legend(loc='lower right',markerscale=10,

labelspacing=0,fontsize=12)

if xlim is None:

xlim = decide_limits(xA,xB,s=6,q=10)

if ylim is None:

ylim = decide_limits(yA,yB,s=6,q=10)

xv = np.arange(xlim[0],xlim[1],0.1)

yv = np.arange(ylim[0],ylim[1],0.1)

x,y = np.meshgrid(xv,yv)

jcontourp(ax,x,y,lambda10(distA,distB,x,y),

levels,

levelmajorfunc,

color='black')

ax.set_xlim(xlim)

ax.set_ylim(ylim)

ax.set_title('Scatter sample plot with contours = $\lambda_{10}$ values')

def separation_report(xydistA, xydistB, Q, L):

L10 = L/np.log(10)

for x,y,dist in [xydistA, xydistB]:

print "Separation of samples in %s by L10=%.4f" % (dist.id,L10)

lam = Q(x,y)

lam10 = lam/np.log(10)

print " Range of lambda10: %.4f to %.4f" % (np.min(lam10), np.max(lam10))

n = np.size(lam)

p = np.count_nonzero(lam < L) * 1.0 / n

print " lambda10 < L10: %.5f" % p

print " lambda10 >= L10: %.5f" % (1-p)

L = np.log(5000 / 9.9e6 * 24999)

C = distA.logpdf_coefficients - distB.logpdf_coefficients

Q = Quadratic2D(*C)

separation_plot((xA,yA,distA),(xB,yB,distB), Q, L)

separation_report((xA,yA,distA),(xB,yB,distB), Q, L)Separation of samples in A by L10=1.1013 Range of lambda10: -3.4263 to 8.1128 lambda10 < L10: 0.01760 lambda10 >= L10: 0.98240 Separation of samples in B by L10=1.1013 Range of lambda10: -36.8975 to 4.0556 lambda10 < L10: 0.99834 lambda10 >= L10: 0.00166

Or we can determine the results by numerical integration of probability density.

The math below isn’t difficult, just tedious; for each of the two Gaussian distributions for A and B, I selected a series of 5000 intervals (10001 x-axis points) from \( \mu_x-8\sigma_x \) to \( \mu_x + 8\sigma_x \), and used Simpson’s Rule to integrate the probability density \( f_x(x_i) \) at each point \( x_i \), given that

$$x \approx x_i \quad\Rightarrow\quad f_x(x) \approx f_x(x_i) = p_x(x_i) \left(F_{x_i}(y_{i2}) - F_{x_i}(y_{i1})\right)$$

where

- \( p_x(x_i) \) is the probability density that \( x=x_i \) with \( y \) unspecified

- \( F_{x_i}(y_0) \) is the 1-D cumulative distribution function, given \( x=x_i \), that \( y<y_0 \)

- \( y_{i1} \) and \( y_{i2} \) are either

- the two solutions of \( \lambda(x_i,y) = L \)

- or zero, if there are no solutions or one solution

and the sample points \( x_i \) are placed more closely together nearer to the extremes of the contour \( \lambda(x,y)=L \) to capture the suddenness of the change.

Anyway, here’s the result, which is nicely consistent with the Monte Carlo analysis:

def assert_ordered(*args, **kwargs):

if len(args) < 2:

return

rthresh = kwargs.get('rthresh',1e-10)

label = kwargs.get('label',None)

label = '' if label is None else label+': '

xmin = args[0]

xmax = args[-1]

if len(args) == 2:

# not very interesting case

xthresh = rthresh*(xmax+xmin)/2.0

else:

xthresh = rthresh*(xmax-xmin)

xprev = xmin

for x in args[1:]:

assert x - xprev >= -xthresh, "%s%s > %s + %g" % (label,xprev,x,xthresh)

xprev = x

def arccos_sat(x):

if x <= -1:

return np.pi

if x >= 1:

return 0

return np.arccos(x)

def simpsons_rule_points(xlist, bisect=True):

"""

Generator for Simpson's rule

xlist: arbitrary points in increasing order

bisect: whether or not to add bisection points

returns a generator of weights w (if bisect=False)

or tuples (w,x) (if bisect = True)

such that the integral of f(x) dx over the list of points xlist

is approximately equal to:

sum(w*f(x) for w,x in simpsons_rule_points(xlist))

The values of x returned are x[i], (x[i]+x[i+1])/2, x[i+1]

with relative weights dx/6, 4*dx/6, dx/6 for each interval

[x[i], x[i+1]]

"""

xiter = iter(xlist)

xprev = xiter.next()

w2 = 0

x2 = None

if bisect:

for x2 in xiter:

x0 = xprev

dx = x2-x0

x1 = x0 + dx/2.0

xprev = x2

w6 = dx/6.0

w0 = w2 + w6

yield (w0, x0)

w1 = 4*w6

yield (w1, x1)

w2 = w6

if x2 is not None:

yield (w2, x2)

else:

for x1 in xiter:

x0 = xprev

try:

x2 = xiter.next()

except StopIteration:

raise ValueError("Must have an odd number of points")

dx = x2-x0

xprev = x2

w6 = dx/6.0

w0 = w2 + w6

yield w0

w1 = 4*w6

yield w1

w2 = w6

if x2 is not None:

yield w2

def estimate_separation_numerical(dist, Q, L, xmin, xmax, Nintervals=5000,

return_pair=False, sampling_points=None):

"""

Numerical estimate of each row of confusion matrix

using N integration intervals (each bisected to use Simpson's Rule)

covering the interval [xmin,xmax].

dist: Gaussian2D distribution

Q: Quadratic2D function Q(x,y)

L: lambda threshold:

diagnose as A when Q(x,y) > L,

otherwise as B

xmin: start x-coordinate

xmax: end x-coordinate

Nintervals: number of integration intervals

Returns p, where p is the probability of Q(x,y) > L for that

distribution.

If return_pair is true, returns [p,q];

if [x1,x2] covers most of the distribution, then p+q should be close to 1.

"""

Qliss = Q.lissajous(L)

xqr = Qliss.Rx

xqmu = Qliss.x0

# Determine range of solutions:

# no solutions if xqr is None,

# otherwise Q(x,y) > L if x in Rx = [xqmu-xqr, xqmu+xqr]

#

# Cover the trivial cases first:

if xqr is None:

return (0,1) if return_pair else 0

if (xmax < xqmu - xqr) or (xmin > xqmu + xqr):

# All solutions are more than Nsigma from the mean

# isigma_left = (xqmu - xqr - dist.mu_x)/dist.sigma_x

# isigma_right = (xqmu + xqr - dist.mu_x)/dist.sigma_x

# print "sigma", isigma_left, isigma_right

return (0,1) if return_pair else 0

# We want to cover the range xmin, xmax.