Hello again! Today we’re going to take a closer look at Moore’s Law, semiconductor foundries, and semiconductor economics — and a game that explores the effect of changing economics on the supply chain.

We’ll try to answer some of these questions:

- What does Moore’s Law really mean, and how does it impact the economics of semiconductor manufacturing?

- How does the foundry business model work, and how is it affected by the different mix of technology nodes? (180nm, 40nm, 28nm, 7nm, etc.)

- What affects the choice of technology node to design a chip?

- How will foundries decide what additional capacity to build, because of the chip shortage?

- Which is more profitable for semiconductor manufacturers: making chips in their own fab, or purchasing wafers from the foundries?

In Part Two of this series I gave a roundabout historical overview of the semiconductor industry from the early 1970s (the MOS 6502 and the pocket calculator days) through the 1980s (Commodore and the 8-bit personal computers), and through the 1990s and early 2000s (DRAM industry consolidation and Micron’s Lehi fab) along with some examples of fab sales. Successful semiconductor companies either build their own incredibly expensive new fabs for leading-edge technologies (which I called the “Mr. Spendwell” strategy), or they buy a not-so-leading-edge fab second-hand (the “Mr. Frugal” strategy”) from another company that decides it is more profitable to sell theirs. We looked at the 1983 Electronic Arts game M.U.L.E., an innovative economic simulation game that showcases some important aspects of microeconomics. We looked at some examples of commodity cost curves where there are multiple suppliers each with varying levels of profitability, and we talked about perfect competition and oligopolies. We looked at the semiconductor business cycle. And we brought up the awful choice faced by companies in the semiconductor industry when it comes to capital expenditures and capacity expansion:

-

Build or purchase more/newer production capacity — take financial risk to reduce competitive risk. The only way to keep a competitive edge, but costs \$\$\$ (Mr. Frugal) or \$\$\$\$ (Mr. Spendwell), and unless you can keep the fabs full, depreciation costs will reduce profitability. In aggregate across the industry, lots of new supply will trigger a glut, which nobody wants, but is a fact of life in semiconductor manufacturing.

-

Conserve cash, don’t expand — take competitive risk to reduce financial risk. Maintain short-term profitability, but can lose market share and become less competitive.

Most of Part Two focused on the semiconductor manufacturers that design and sell the finished integrated circuits. Here in Part Three we’re going to look at the foundries, most notably Taiwan Semiconductor Manufacturing Company (TSMC).

First I need to get a few short preliminaries out of the way.

Preliminaries

Disclaimers

As usual, I need to state a few disclaimers:

I am not an economist. I am also not directly involved in the semiconductor manufacturing process. So take my “wisdom” with a grain of salt. I have made reasonable attempts to understand some of the nuances of the semiconductor industry that are relevant to the chip shortage, but I expect that understanding is imperfect. At any rate, I would appreciate any feedback to correct errors in this series of articles.

Though I work for Microchip Technology, Inc. as an application engineer, the views and opinions expressed in this article are my own and are not representative of my employer. Furthermore, although from time to time I do have some exposure to internal financial and operations details of a semiconductor manufacturer, that exposure is minimal and outside my normal job responsibilities, and I have taken great care in this article not to reveal what little proprietary business information I do know. Any specific financial or strategic information I mention about Microchip in these articles is specifically cited from public financial statements or press releases.

Furthermore, nothing in this article should be construed as investment advice.

Bell Chord

I also need to introduce something called a bell chord — which you’ve probably heard before, even if you don’t know what it is. Imagine three people wearing different colored shirts, who we’ll just call Red Shirt, Green Shirt, and Blue Shirt. Each is humming one note:

- Red Shirt starts first

- Green Shirt joins in one second later

- Blue Shirt joins in another second after that

We could graph this as a function of time:

If you still can’t imagine how that might sound, there’s an example in the first few seconds of this Schoolhouse Rock video about adverbs from 1974:

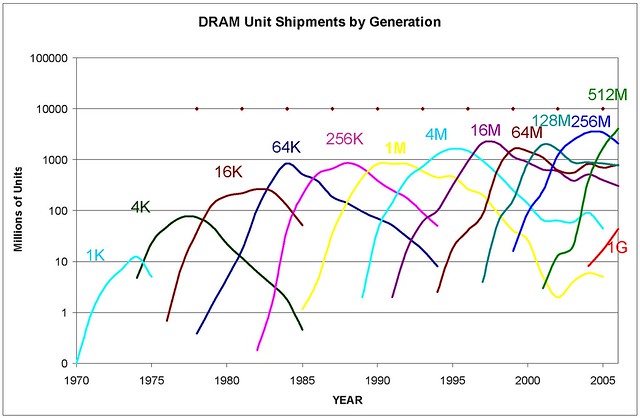

Remember that graph: you’re going to see others that are similar.

Ready? Let’s go!

More about Moore’s Law

I talked a little bit about Moore’s Law in Part Two: I had bought a 64MB CompactFlash card in 2001 for about \$1 per megabyte, and twenty years later, some 128 GB micro-SD cards for about 16 cents a gigabyte. Transistors get smaller, faster, and cheaper as technology advances and the feature sizes decrease.

That’s the common understanding of Moore’s Law: smaller faster cheaper technology advancement. But there are some nuances about Moore’s Law that affect the economics of semiconductor production.

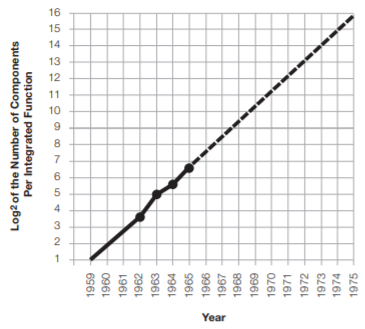

In 1965, Gordon Moore, then director of Fairchild Semiconductor’s Research and Development Laboratories, wrote a famous article in Electronics Magazine titled “Cramming More Components onto Integrated Circuits”, in which he stated (emphasis mine):

Reduced cost is one of the big attractions of integrated electronics, and the cost advantage continues to increase as the technology evolves toward the production of larger and larger circuit functions on a single semiconductor substrate. For simple circuits, the cost per component is nearly inversely proportional to the number of components, the result of the equivalent piece of semiconductor in the equivalent package containing more components. But as components are added, decreased yields more than compensate for the increased complexity, tending to raise the cost per component. Thus there is a minimum cost at any given time in the evolution of the technology. At present, it is reached when 50 components are used per circuit. But the minimum is rising rapidly while the entire cost curve is falling (see graph below). If we look ahead five years, a plot of costs suggests that the minimum cost per component might be expected in circuits with about 1,000 components per circuit (providing such circuit functions can be produced in moderate quantities.) In 1970, the manufacturing cost per component can be expected to be only a tenth of the present cost

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year (see graph on next page). Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years. That means by 1975, the number of components per integrated circuit for minimum cost will be 65,000.

This observation — that the number of components, for minimum cost per component, doubles every year — was dubbed Moore’s Law, and as a prediction for the future, was downgraded by Moore himself in 1975, in a speech titled “Progress in Digital Integrated Electronics” to doubling every two years. Moore’s Law is not a “law” in a scientific sense, like Newton’s laws of motion, or the laws of thermodynamics; instead it is a realistic prediction about the pace of technological advancements in IC density. It is a pace that has become a sort of self-fulfilled prophecy; so many companies in the semiconductor and computer industries have adapted to this rate of advancement, that with today’s complex wafer fabrication processes, they can no longer make technological progress on their own, but instead are dependent on the pace of the group. In some ways, the group of leading-edge technology manufacturers are like the vanguard pack of competitive bicyclists in a race, known as the peloton. According to a 2008 essay by Michael Barry:

The peloton flows with the roads, and we, the cyclists, blindly hope that the flow is not broken. A wall of wheels and bodies means we can never see too far in front, so we trust that the peloton flows around any obstacle in the road like fish in a current.

When in the group, we follow the wheels, looking a few yards ahead, watching other riders to gauge our braking, accelerations and how we maneuver our bikes. Over time, cycling in a peloton becomes instinctual, and our bicycles become extensions of our bodies. When that flow is broken, reaction time is limited and we often crash.

Pat Gelsinger, the present CEO of Intel, stated a different analogy in a 2008 press roundtable — he was a senior vice-president at the time:

According to Gelsinger, Moore’s Law is like driving down a foggy road at night: a headlight allows one to see some distance ahead, but how the driver negotiates the road is up to him. Intel is “on the way to 22[-nm],” Gelsinger said. “We have a good view of what 14[-nm] and [15-nm] will look like, and a good idea of how we’ll break through 10[-nm]. Beyond that, we’re not sure.”

Well… perhaps; the headlight-on-the-foggy-road analogy makes it sound like Moore’s Law is a vision of what’s ahead that helps everyone imagine the future. It’s really a lot more than that: it’s this commitment that allows participants in the semiconductor industry to trust that there is a future. If I had to use a foggy road analogy, I’d change to say it’s more like a extremely nearsighted man driving down a foggy road at night, Mister-Magoo-style, in the forested countryside, while someone else drives ahead of him to make sure the road is clear, pushing the deer and porcupines out of the way.

One approach the industry has used to keep things going is a series of technology “roadmaps”. When everyone follows the roadmap, it all comes together and we get this amazing pace of improvement to keep Moore’s Law going, with transistors getting smaller and smaller, and lower cost per transistor. This does not come easily. The technological work is pipelined, to go as fast as possible, with development just enough in advance of production to make things work reliably and iron out the last few kinks. Wafer fabrication equipment companies and fab process engineers and electronic design automation (EDA) companies and chip designers are all collaborating at the leading edge to use new processes as soon as they are ready. (This hot-off-the-presses stage is called “risk production”.[1])

It’s almost as though there are cars zooming forward on a highway at full speed, and the first cars leading the way are just behind the construction crews that are taking down the traffic cones and the “ROAD CLOSED” signs, which are behind a section of fresh asphalt that has just cooled enough to drive on, which is behind the paving equipment applying asphalt, and the road rollers, and the road graders, and the earth moving equipment, and the surveyors… all moving at full highway speed, to ensure the cars behind them remain unhindered, allowing each driver the oblivious faith needed to keep a foot on the gas pedal.

Source: The Herald-Dispatch, July 2020, photo by Kenny Kemp

Better hurry!

But enough with metaphors — the pace of technology development is dependent on the entire industry, not just one company.

Interlude, 1980s: Crisis and Recovery

The pace of Moore’s Law in the early days just sort of happened organically, as a by-product of the competition between the individual firms of the semiconductor industry. How did this industry-wide coordination come about, then?

In the early 1980s, Japanese companies gained the dominant market share of DRAM suppliers — which I mentioned in Part Two — and the resulting shift to Japan became a sort of slow-motion creeping horror story in the United States semiconductor industry, which had previously dominated most of the semiconductor market. But that wasn’t the end of semiconductor manufacturing in the USA. The DRAM crisis led directly to a number of positive developments.

One was the formation of SEMATECH, an industry consortium of semiconductor manufacturers. As the old SEMATECH website stated:

SEMATECH’s history traces back to 1986, when the idea of launching a bold experiment in industry-government cooperation was conceived to strengthen the U.S. semiconductor industry. The consortium, called SEMATECH (SEmiconductor MAnufacturing TECHnology), was formed in 1987, when 14 U.S.-based semiconductor manufacturers and the U.S. government came together to solve common manufacturing problems by leveraging resources and sharing risks. Austin, Texas, was chosen as the site, and SEMATECH officially began operations in 1988, focused on improving the industry infrastructure, particularly by working with domestic equipment suppliers to improve their capabilities.

By 1994, it had become clear that the U.S. semiconductor industry—both device makers and suppliers—had regained strength and market share; at that time, the SEMATECH Board of Directors voted to seek an end to matching federal funding after 1996, reasoning that the industry had returned to health and should no longer receive government support. SEMATECH continued to serve its membership, and the semiconductor industry at large, through advanced technology development in program areas such as lithography, front end processes, and interconnect, and through its interactions with an increasingly global supplier base on manufacturing challenges.

SEMATECH came in at a critical time and helped the industry organize some basic manufacturing research. Japanese DRAM manufacturers raised the bar on quality, achieving higher yields, and American companies had to react to catch up. Technology roadmapping was also one of SEMATECH’s first organized activities, in 1987.[2] As the semiconductor industry matured, and SEMATECH weaned itself off of government funding, and a number of member companies left in the early 1990s, its importance began to diminish. SEMATECH finally fizzled away around 2015, when Intel and Samsung left — the remnants were merged with SUNY Polytechnic Institute in 2015.

But SEMATECH wasn’t the only consortium that helped U.S. semiconductor firms organize towards improved progress. The Semiconductor Industry Association sponsored a series of cooperative roadmap exercises,[2] with the help of SEMATECH and the Semiconductor Research Corporation, culminating in the National Technology Roadmap for Semiconductors (NTRS) in 1992. This document included specific targets for the semiconductor industry to coordinate together:

The process that the groups followed was to develop the Roadmap “strawman,” or draft, and iterate it with their individual committee members. A revised draft of the Roadmap was issued before the workshop, and the key issues were highlighted for review at the actual workshop.

The charter of the workshop was to evaluate likely progress in key areas relative to expected industry requirements and to identify resources that might best be used to ensure that the industry would have the necessary technology for success in competitive world markets. One underlying assumption of the Semiconductor Technology Roadmap is that it refers to mainstream silicon Complimentary Metal-Oxide Silicon (CMOS) technology and does not include nonsilicon technologies.

…

For the first time, an industry technical roadmapping process prioritized the “cost-to-produce” as a key metric. The cost/cm² was taken as a benchmark metric against which budget targets were developed for the various fab production technologies. For example, lithography was allocated 35 percent of the total, multilevel metal and etch 25 percent, and so forth (see Table 3).

Technical characteristics of note are increasing logic complexity (gates/chip) and chip frequency and decreasing power-supply voltage. These specific characteristics set the vision for requirements for dynamic power for CMOS. They also set in place additional implications for engineering the capabilities to achieve or manage those requirements.

Many organizations have used the Roadmap to set up their own development plans, to prioritize investments, and to act as resource material for discussion of technology trends at various forums, including those of international character. In the United States, one of the most significant results of the workshop was to reinforce a culture of cooperation. The intent to renew the Roadmap on a periodic basis was established.

The 1992 NTRS was followed by another one in 1994:

The success of the 1992 Roadmap prompted the renewal of the Roadmap in 1994. A central assumption of the 1994 process was that Moore’s Law (a 4x increase in complexity every three years) would again extend over the next 15 years. Many experts have challenged this assumption because maintaining the pace of technology and cost reduction is an exceedingly difficult task. Nonetheless, no expectation or algorithm for slowing was agreed upon, and the coordinating committee framed the workshop against extension of Moore’s Law. A 15-year outlook was again taken, this time reaching to 0.07-μm CMOS technology in 2010. A proof of concept exists for low-leakage CMOS transistors near a 0.07-μm gate length. There appears to be no fundamental limit to fabrication of quality 0.07-μm CMOS transistors.

The final NTRS Roadmap occurred in 1997; this was succeeded by an annual series of International Technology Roadmap for Semiconductors from 1998 to 2016, and was subsequently reframed as the International Roadmap for Devices and Systems starting in 2017. NTRS, ITRS, and IRDS have all identified technical challenges needed to continue this pace of development, including transistor design, lithography, packaging, and several other key technologies.

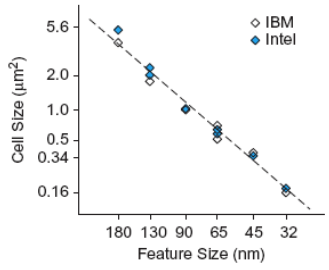

The upshot of all of this is to keep the transistor density increasing.

Moore’s Law keeps marching onwards, and this basically affects us in some combination of four aspects:

-

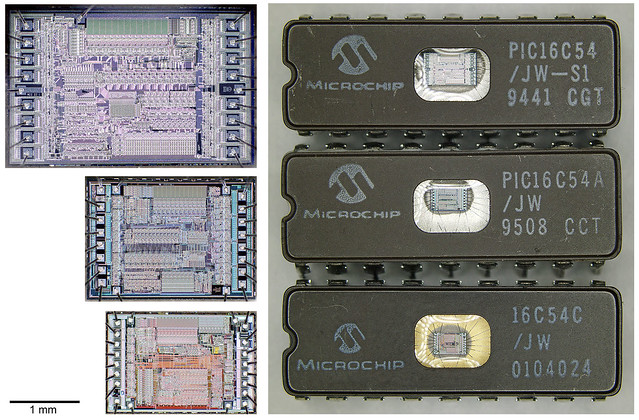

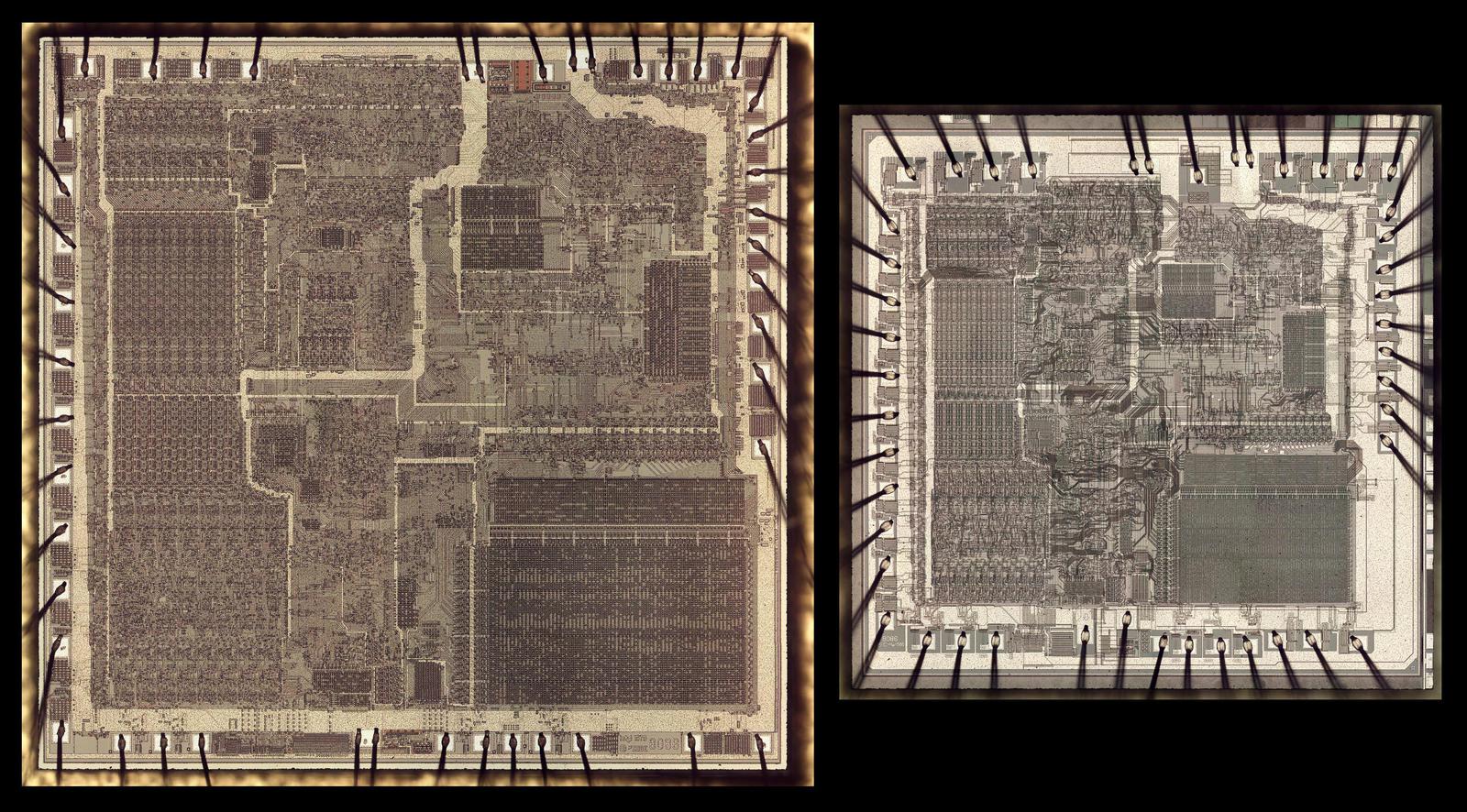

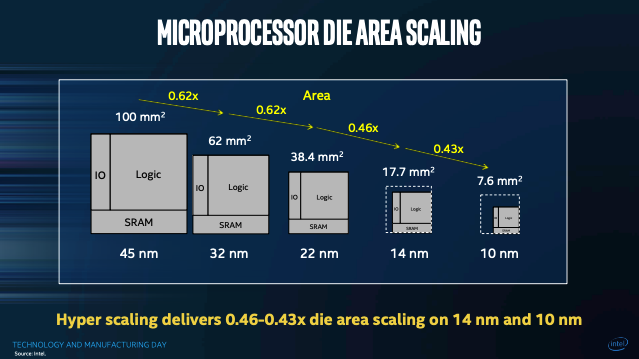

Chips of the same complexity get smaller, and more of them can fit on a wafer, so in general they cost less. When an IC design is reduced slightly, it’s called a die shrink. Ken Shirriff posted a technical writeup of the 8086 die shrink from 3μm to about 2μm which is worth the read.

© Ken Shirriff, reproduced with permission

Die shrinks also speed up and/or lower power consumption due to lower parasitic capacitances of the smaller transistors. If you want to get technical, there’s a principle known as Dennard scaling, which maintains the same electric field strength and the same power density per unit area, by reducing the supply voltage for smaller transistor geometries. So we can run smaller feature size chips faster at the same power level, or run them at the same speed with lower power dissipation, or some combination in between.

We can increase the number of transistors to fit more of them in about the same die area. This has been happening for microprocessors for the past 50 years:

Graph by Max Roser, Hannah Ritchie, licensed under CC BY 4.0

These three factors are known as Power-Performance-Area or PPA, and represent tradeoffs in design. If you’re willing to wait to take advantage of the next fabrication process with smaller feature sizes, however, you can improve all of them to some extent.

Benefits, benefits, lots of benefits from Moore’s Law. Yep. And we’ve mentioned how the pace of Moore’s Law is important to coordinate technological investments, and involves industry-wide roadmaps. But a question remains:

How do companies pay for the tremendous cost?

The Depreciation March

I am particularly desirous that there should be no misunderstanding about this work. It constitutes an experiment in a very special and limited direction, and should not be suspected of aiming at achieving anything different from, or anything more than, it actually does achieve.

— Maurice Ravel[3]

Now would be a good time to listen to some music, perhaps Ravel’s Boléro.

Boléro is a rather odd piece of classical music, in that it is one of the most repetitive classical compositions in existence — and yet it is also one of the most famous pieces of the 20th century. It isn’t exactly a march (it has a 3/4 time signature, so more like a waltz) but the pace is metronomic, and as I think of the historical pace of the semiconductor industry, I like to have this music going on in my head. Even though technology makes its way in cycles and bursts of innovation, somehow it is convenient to think of everything moving along in unison.

The semiconductor march of technology has a lot to do with depreciation, which I covered a bit in Part Two. Companies have to account for the expenses they put into capital equipment, and rather than just reporting some big expenditure all at once — which wouldn’t look very good or consistent in a financial statement — they spread it over several years instead. The big expense of semiconductor depreciation is in the wafer fabrication and test equipment, notably lithography machines, and is typically spread over four or five years. The building itself and cooling systems use 10-year or 15-year straight-line depreciation, but tend to be a smaller fraction of the expense. Integrated Circuit Engineering (ICE) estimated that semiconductor equipment comprised 80% of new fab costs in 1996,[4] with the other 20% coming from building construction and other improvements.

And the cost of that fab equipment is increasing. Sometimes this is known as Moore’s Second Law: that the cost of a new fab is rising exponentially. Gordon Moore stated his concern about fab cost in a 1995 article in The Economist:[5]

Perhaps the most daunting problem of all is financial. The expense of building new tools and constructing chip fabrication plants has increased as fast as the power of the chips themselves. “What has come to worry me most recently is the increasing cost,” writes Mr Moore. “This is another exponential.” By 1998 work will have started on the first \$3-billion fabrication plant. However large the potential return on investment, companies will find it hard to raise money for new plants. Smaller firms will give up first, so there are likely to be fewer chip producers—and fewer innovative ideas.

In 1997, ICE cited an estimate of 20% per year increase in the cost to build a leading-edge fab:[6]

Since the late 1990s this appears to have slowed down — in December 2018, the Taiwan News reported the cost of TSMC’s 3nm fab in Tainan as 19.45 billion dollars, which represents “only” a 13.7% annual increase over the roughly \$1.5 billion it cost to build several fabs in 1998[6] — but it’s still a lot of money.

How do they plan to pay for it?

It takes time to build a semiconductor fab and get it up and running productively, so at first it’s not making money, and is just a depreciation expense. Because of this, there is some urgency to make most of the money back in those first few years. In practice, it’s the profits from the previous two technology nodes that is paying for the next-generation fab.

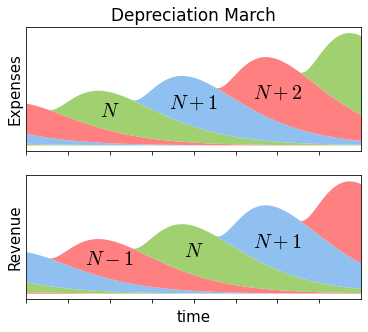

Here’s a graph from a slide that Pat Gelsinger presented at Intel’s 2022 Investor Day:

The x-axis here is time, and the y-axis here is presumably revenue. The \( n \), \( n+1 \), \( n+2 \), \( n+3 \) are generations of process technology.

The idea is that each new process technology goes through different stages of maturity, and at some point, the manufacturer takes it off-line and then sells the equipment to make room for the latest equipment, so the manufacturer’s mix of technology nodes evolves over time.

Finding examples of fabs selling equipment in this manner is tricky — not as straightforward as finding examples of fab sales (of which I mentioned several in Part Two) or fab shutdowns, which make the newspapers, like the 2013 shutdown of Intel’s Fab 17 in Hudson, Massachusetts. This was Intel’s last fab for 200mm wafers, and was closed because, as Intel spokesman Chuck Malloy said at the time, “It’s a good factory. It’s just that there’s no road map for the future.” The transition between 200mm wafer equipment and 300mm wafer equipment is more of a barrier than between technology nodes of the same wafer size; I’m not really sure what that means in practice, but perhaps there are major building systems that need to be upgraded, so a fab that processes 200mm wafers just isn’t cost-effective to upgrade to recent generations of 300mm equipment. The most recent leading-edge fabs that use ASML’s EUV machines require structural design changes in the buildings to accommodate these machines. From a December 2021 article in Protocol describing Intel’s Hillsboro Oregon site:

There are no pillars or support columns inside the plant, which leaves more space for the engineers to work around the tools. Custom-made cranes capable of lifting 10 tons each are built into the ceiling. Intel needs those cranes to move the tools into place, and open the machines up if one needs work or an upgrade. One of the ASML systems Phillips stopped by to demonstrate was undergoing an upgrade, exposing a complex system of hoses, wires and chambers.

“Each [crane] beam can lift 10,000 kilograms, and for some of the lifts you have to bring two of the crane beams over to lift the components in the tool,” Phillips said. “That’s needed to both install them and maintain them; if something deep inside really breaks, you’ll have to lift up the top module, set it on a stand out behind or in front of the tool, then the ASML field-service engineers can get to work.”

Below the EUV tools, Intel built a lower level that contains the transformers, power conditioners, racks of vacuum pumps for hydrogen-abatement equipment systems and racks of power supplies that run the CO2 driver laser. The driver laser shoots the microscopic pieces of tin inside the tool at a rate of 50,000 times a second. Originally made by a laser company called Trumpf for metal cutting, the EUV version combines four of its most powerful designs into a single unit for EUV use, Phillips said.

And an EE News Europe article in April 2022 describing a recent delivery of EUV machines to Intel’s Fab 34 in Leixlip, Ireland: (my emphasis)

The system consists of 100,000 parts, 3,000 cables, 40,000 bolts and more than a mile of hosing. It has taken 18 months of design and construction activity to prepare the Fab 34 building to receive the machine. The building was design [sic] around the scanner, which spans four levels of the factory, from the basement, through to the subfab to the fab. It has an inbuilt crane that extends 3m into the ceiling level. It also needs approximately 700 utility points of connection for its electrical, mechanical, chemical and gas supplies.

It was first transported by 4 Boeing 747 Cargo planes with pieces weighing up to 15 tonnes each. It completed the final part of its journey via road where it was carried by over 35 trucks.

So chances are you’re not going to see old fabs upgraded to use EUV equipment. At any rate, there are reasons that buildings reach the end of their useful life. Fab equipment is a different story, and there is a market for used equipment, but it’s not well-publicized. As a 2009 EE Times article put it:[7]

Mainstream equipment vendors that have divisions focused on selling refurbished gear aren’t anxious to publicize used equipment sales because their marketing efforts are focused on pushing their latest and greatest technology. Few analysts follow the used equipment market, and even those that do acknowledge that it’s difficult to track. But those analysts who do track the market for refurbished equipment forecast that it will rise significantly in 2010 after a down year in 2009.

As evidence that more companies are warming to used equipment buys, Exarcos, Kelley and others point to Texas Instruments Inc.’s October deal to buy a full fab of equipment from Qimonda AG and use it to equip what had been an empty fab shell in Richardson, Texas. While virtually everyone agrees that the TI deal was an unusual circumstance because bankruptcy forced Qimonda to unload an entire fab’s worth of relatively new 300-mm equipment at a huge discount, Exarcos and others say it highlights what has taken place in the past year.

DRAM vendors and others moved swiftly to take capacity offline early this year at the height of the downturn. Other companies were forced by economic reasons to close fabs or file for bankruptcy protection. As a result, there is a glut of used equipment on the market, much of it a lot newer than you would typically find on the market, they say.

Equipment apparently goes through several stages in its life cycle:

- new: high cost (depreciation ongoing) but revenue capture increasing from zero to high revenue

- depreciated: moderate revenue capture with low cost (depreciation complete)

- legacy: low revenue capture with low cost

I prefer to think of it like this, at least as a very rough approximation, without the effects of economic cycles:

The horizontal tick marks are about a year apart — since 180nm in the late 1990s, the technology nodes have been introduced every two years or so — and the vertical scales are not identical, since revenue has to be larger than expenses to allow some gross margin. Revenue from the next generation \( N+1 \) (“new”) comes in a couple of years after the start of depreciation and development expenses — it takes time to bring up each new process — which are financed by the revenues of generations \( N \) (“new” transitioning to “depreciated”) and \( N-1 \) (“depreciated”). In order to remain profitable, each new generation’s revenue (and expenses) is larger than the previous one. Equipment for depreciated technology nodes brings in income to pay for equipment in new technology nodes, and this new equipment eventually becomes the next set of depreciated equipment three to five years later. Sometime after that, it eventually becomes legacy equipment with low revenue-earning potential, and may be sold to make room for the next round of new equipment. (New, depreciated, legacy, new, depreciated, legacy, one two three, one two three....)

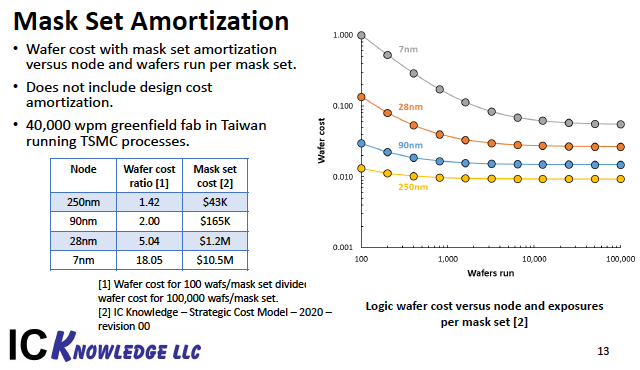

On the expenses side, Scotten Jones of IC Knowledge LLC described it this way in an article, Leading Edge Foundry Wafer Prices,[8] from November 2020:

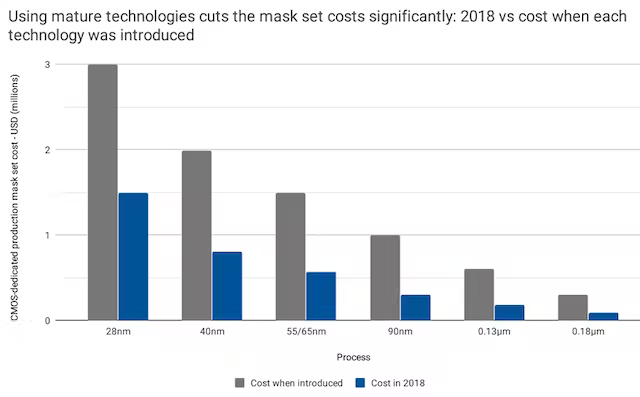

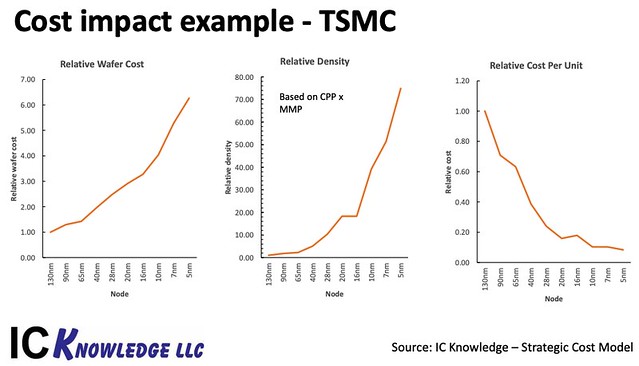

When a new process comes on-line, depreciation costs are high but then as the equipment becomes fully depreciated the wafer manufacturing costs drops more than in-half. TSMC and other foundries typically do not pass all the cost reduction that occurs when equipment becomes fully depreciated on to the customer, the net result is that gross margins are lower for newer processes and higher for older fully depreciated processes.

Jones also shows a more realistic graph of depreciation expenses in this article — see his Figure 2. Whereas my “expenses” graph looks like a bunch of proportionally-scaled dead whales piled up on each other, his is more irregular and looks like a pile of laundry or misshapen leeches that has toppled over, but otherwise the general characteristics are at least somewhat similar.

On the revenue side:

This behavior makes sense for DRAM, since today’s cutting-edge DRAM chips will become worthless and obsolete in a relatively short time. PC microprocessors have a limited lifetime as well; here’s a graph from Integrated Circuit Engineering (ICE) Corporation’s “Profitability in the Semiconductor Industry”, showing about an 8-10 year life for each Intel processor generation in the 1980s and 1990s, with dominance lasting only about 2-3 years for each generation:[4]

Source: Integrated Circuit Engineering, Profitability in the Semiconductor Industry, Figure 1-25, Product Lifecycle for Intel Processors

So eventually the older legacy nodes fade away. Here’s another example, from a 2009 paper by Byrne, Kovak, and Michaels, graphing different TSMC node revenue:[9]

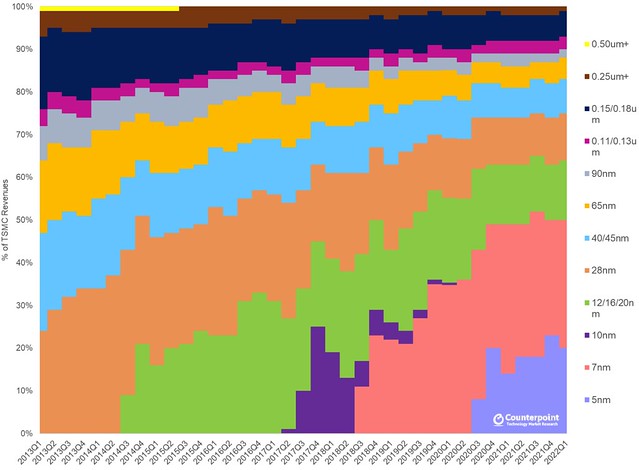

And a similar graph in cumulative form from Counterpoint Research, showing more recent TSMC earnings data:[10]

Take a look: 65nm and 40/45nm made up about 40% of TSMC’s revenue in 2013, but now in 2022 they’re on their way out, amounting to barely 10%.

Except something’s not right here.

See if you can figure out what. In the meantime, since these are graphs from TSMC financial statements, we’re going to talk about foundries.

An Introduction to Semiconductor Foundries

It seems odd today, but for the first quarter-century after the invention of the integrated circuit in the late 1950s, semiconductor design and fabrication were tightly coupled, and nearly all semiconductor companies manufactured their own chips. (Today we call that sort of company an integrated device manufacturer or IDM.) I showed this excerpt from New Scientist’s September 9, 1976 issue in Part Two:

At present, Commodore produces the art-work for its calculator chips and subcontracts the chip manufacture to outside plants around the world with spare capacity. The recent purchase of factories in the Far East has enabled it to assemble electronic watch modules by this subcontracting method. But as the up-turn in the economy begins to effect [sic] the consumer electronics industry, less spare capacity is becoming available for this type of subcontracting. When considered along with the additional recent purchase of an LED display manufacturing facility, Commodore now has a completely integrated operation.

Custom chips were a thing in the 1970s, but relied on spare capacity from larger semiconductor companies… and as we saw in Part Two, available semiconductor manufacturing capacity undergoes wild swings every four or five years with the business cycle. Not a particularly attractive place for those on the buying end. Changes began in the late 1970s, with Carver Mead and Lynn Conway’s 1979 book, Introduction to VLSI Systems, that created a more systematic approach to large digital IC designs. Mead had visions of a future where design and fabrication were separated, using Gordon Moore’s term “silicon foundry”. In September 1981, Intel had announced its foundry service; an article in Electronics described Intel’s intentions:

Forging ahead in the foundry business

While one part of Intel knocks on doors for software, another has opened its door to outside chip designers, allowing them to take advantage of its design-automation and integrated-circuit-processing facilities. Though it has been providing the service for six months, the company is just making public the fact that it has set up a silicon foundry in Chandler, Ariz.

Intel is supplying customers with complete sets of design rules for its high-performance MOS and complementary-MOS processes. Also on offer is its scaled-down H-MOS II technology, and even more advanced processes like H-MOS Ill will be added as they become available. Computer-aided-design facilities for circuit layout and simulation will also be provided, as well as services for testing and packaging finished devices.

A manufacturer’s willingness to invite this kind of business usually indicates an overcapacity situation, and Intel’s case is no exception. Indeed, the company says it is setting aside about 10% of its manufacturing capacity for the foundry. However, mixed with this excess capacity is a sincere desire to tap into the foundry business, states manager Peter Jones. “We kicked around the idea when there wasn’t a spare wafer in the industry,” he says of the firm’s long-standing interest in such services.

Referring to more sophisticated customers, Jones explains that “at the system level, their knowledge is greater than ours, so if we don’t process their designs, we’ll lose that share of the business.” The market for the silicon foundry industry could grow from about \$50 million to nearly a half billion dollars by 1985. It is all but certain that Intel will eventually enter the gate-array business as well, perhaps by next year. At present, it seems to be leaning toward a family of C-MOS arrays with high gate count.

— John G. Posa

That same issue of Electronics included an ad from ZyMOS, a small custom chip house, using the term “foundry service”:

John Ferguson summed up the birth of the foundry industry this way, talking about the manufacturing lines at the IDMs:[11]

Occasionally, however, there were times when those manufacturing lines were idle. In Economics 101, we all learned that’s called “excess capacity,” and it’s generally not a good thing. If your machines and staff aren’t doing anything, they’re costing you money. So the semiconductor companies did what any smart business would do—they invented a new industry. They offered up that excess capacity to other companies. At some point, someone realized that by taking advantage of this manufacturing capacity, they could create an IC design company that had no manufacturing facilities at all. This was the start of the “fabless” model of IC design and manufacturing that has evolved into what we have today.

In 1987, someone realized that same model could go the other way as well. The Taiwan Semiconductor Manufacturing Company (TSMC) was introduced as the first “pure-play” foundry — a company that exclusively manufactured ICs for other companies. This separation between design and manufacturing reduced the cost and risk of entering the IC market, and led to a surge in the number of fabless design companies.

TSMC was not the first foundry — as the September 1981 Intel announcement and ZyMOS ad illustrates. Foundries were going to happen, one way or another. But focusing solely on wafer fabrication for customers — not as a side business, and not in conjunction with IC design services — was TSMC’s key innovation, and the “someone” who realized it could work was Morris Chang, founder of Taiwan Semiconductor Manufacturing Company in 1987.

Chang had spent 25 years at Texas Instruments, and then, after a yearlong stint as president and chief operating officer of General Instrument, left to head Taiwan’s Industrial Technology Research Institute (ITRI). ITRI had played a pivotal role in the birth of Taiwan’s semiconductor industry in March 1976, when one of ITRI’s subgroups, the Electronics Research Service Organisation (ERSO), struck a technology transfer agreement with RCA, to license a 7-micron CMOS process and receive training and support. RCA was a technology powerhouse in the middle decades of the 20th century, under the leadership of David Sarnoff, and had pioneered the first commercial CMOS ICs in the late 1960s. But as Sarnoff grew older, his son Robert took his place as RCA’s president and then as CEO, and the younger Sarnoff more or less fumbled the company’s technical lead in semiconductors in the early 1970s, chasing consumer electronics (television sets, VCRs, etc. — my family upgraded from a Zenith black-and-white vacuum-tube TV to an RCA color transistor TV in 1980) and conglomerate pipe dreams; RCA missed out on a chance to hone its strengths in semiconductor design and fabrication and to make CMOS rule the world. Meanwhile, NMOS, not CMOS, dominated the nascent microprocessor and DRAM markets, at least in the 1970s. RCA did manage to get at least some income from licensing CMOS to companies like Seiko. The ITRI/ERSO agreement came amid RCA’s swan song in semiconductors:[12]

ITRI had accomplished the introduction of semiconductor technology to Taiwan. Of course Taiwan was still far from catching up with advanced nations but the main goal was to learn more about semiconductor technology, and to accumulate knowledge. For this goal, a pilot plant, with design, manufacturing and testing capabilities had been built, and was geared towards producing simple semiconductors. As noted, RCA had developed the first CMOS integrated circuits, but CMOS was at the time not a widespread technology. RCA was actually about to withdraw from the semiconductor industry and the licensing deal with ITRI was an opportunity to squeeze some last income from a mature technology. The 7.0 micron CMOS was mature, and far behind the worlds leading LSI 2.0 micron circuit designs, nonetheless for ERSO this was a way of gaining access to the world of semiconductors. In retrospect, the licensing of CMOS technology proved to be a wise choice. First of all ITRI did not have to directly compete with established producers on a global market. Second the market share of CMOS was relatively small at the end of the 1970s, but started to expand rapidly afterwards to become the most used technology in IC design today. After RCA withdrew from the semiconductor industry in the early 1980s, ITRI also inherited the intellectual property portfolio from RCA that had been related to CMOS technology (Mathews and Cho, 2000).

(The irony, of course, is that eventually CMOS did come to rule the semiconductor world, without RCA in it.)

Chang had not been at the helm of ITRI long when the Taiwan government asked him to start a semiconductor firm, according to an article in IEEE Spectrum about Chang’s founding of TSMC:[13]

Taking on the semiconductor behemoths head-to-head was out of the question. Chang considered Taiwan’s strengths and weaknesses as well as his own. After weeks of contemplation he came up with what he calls the “pure play” foundry model.

In the mid-’80s there were approximately 50 companies in the world that were what we now call fabless semiconductor companies. The special-purpose chips they designed were fabricated by big semiconductor companies like Fujitsu, IBM, NEC, TI, or Toshiba. Those big firms drove tough bargains, often insisting that the design be transferred as part of the contract; if a product proved successful, the big company could then come out with competing chips under its own label. And the smaller firms were always second-class citizens; their chips ran only when the dominant companies had excess capacity.

But, Chang thought, what if these small design firms could contract with a manufacturer that didn’t make any of its own chips — meaning that it wouldn’t compete with smaller firms or bump them to the back of the line? And he realized that this pure-play foundry would mean that Taiwan’s weaknesses in design and marketing wouldn’t matter, while its traditional strengths in manufacturing would give it an edge.

TSMC opened for business in February 1987 with \$220 million in capital—half from the government, half raised from outside investors. Its first customers were big companies like Intel, Motorola, and TI, which were happy to hand over to TSMC the manufacture of products that used out-of-date technology but were still in some demand. That way, the companies wouldn’t have to take up their own valuable fab capacity making these chips and would face little harm to their reputation or overall business if TSMC somehow failed to deliver.

At the time, TSMC was several generations behind the leading-edge CMOS processes. Now, of course, TSMC is the leading semiconductor foundry in the world, with over 50% market share among the foundries (Samsung is a not-so-close second) and is among the only companies left that manufacture the most advanced technology nodes. Foundries like TSMC and UMC are more or less the Taiwanese equivalent to Swiss banks: neutral parties facilitating business from all over the world.

The fabless industry is no longer just a place for relatively small semiconductor companies who don’t want to own their own wafer fabs. Today’s foundry customers include technology giants such as Apple, Nvidia, and Qualcomm. Most of the former IDMs, including all of the major microcontroller manufacturers, are now in the “fab-lite” category, relying on their own fabs for cost-effective manufacturing of larger technology nodes, but outsourcing more advanced manufacturing processes to TSMC / UMC / SMIC / etc. Even Intel uses external foundries like TSMC, although it’s trying to keep the focus on its own leading-edge manufacturing.

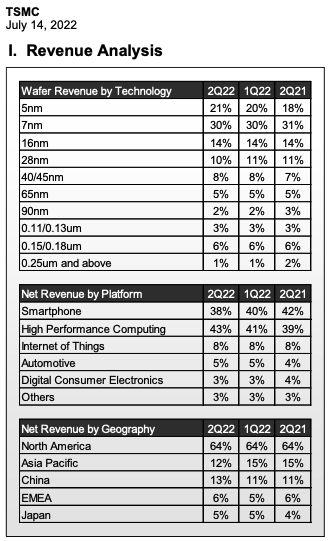

Half of TSMC’s revenue now comes from technology nodes 7nm and smaller. Which brings us back to that Counterpoint Research graph.

Not Fade Away

It’s mesmerizing, isn’t it? What strikes me is the stationarity: the distribution of revenue at TSMC is more or less the same no matter what year it is. In 2013 the three leading-edge processes (28nm, 40/45nm, and 65nm) make up about 60-65% of revenue. In 2018 the three leading-edge processes (10nm, 12/16/20nm, and 28nm) make up about 60-65% of revenue. In 2022 the three leading-edge processes (5nm, 7nm, 16nm — TSMC has settled on 16nm, according to its quarterly reports) make up about 60-65% of revenue.

Which is what, presumably, TSMC and the semiconductor industry want. Stability in motion. A perpetual leading edge; just jump on for a ride anytime you like. Don’t worry, we’ll keep Moore’s Law going.

I was researching the economic impacts and implications of Moore’s Law in October 2021, searching for answers. How does this work? Some of it was unsettling. I mean Moore’s Law can’t go on forever, can it? Sooner or later you run into atomic scale and then what? But apparently we’ve still got a ways to go, at least on the technological front. The metaphor I had in mind then wasn’t a peloton, or Mister Magoo driving on a foggy road, or a bunch of cars driving on a just-paved-in-time highway, or a Depreciation March — it was more like a sleighride careening downhill just barely in control, or like Calvin and Hobbes on a toboggan, or the protagonists in Green Eggs and Ham as they ride on a train down questionably-engineered tracks, the train’s passengers relaxing serenely all the while. (We never do find out the name of the guy who says he doesn’t like green eggs and ham.) Anyway, everything’s stable as long as the ride goes on downhill, but what happens at the end?

That part I can’t answer.

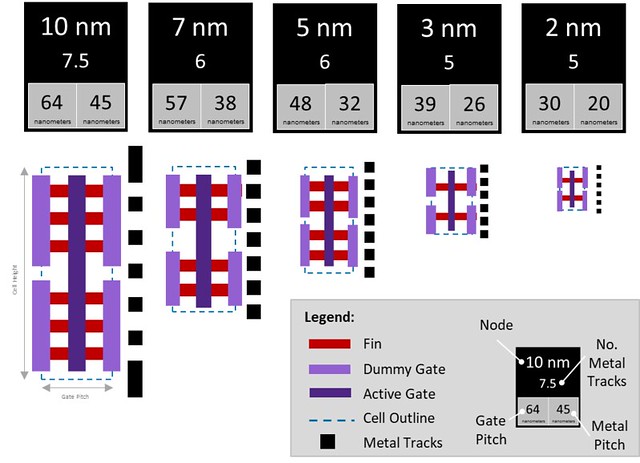

The economic implications of Moore’s Law in recent years, on the other hand, are out there, but some of them are subtle; you just have to look around a bit. One is the rising costs of IC development. Here’s a graph from Handel Jones at IBS, which was included in a 2018 article titled Big Trouble At 3nm:[14]

Yikes! Half a billion dollars to design one chip using 5nm technology is some serious spending. What isn’t clear from graphs like these, however, are the conditions. Is it a die of a given area, say, 6mm x 6mm? If so, the transistor counts are going up along with the complexity, so sure, I would expect the costs to rise. But what happens if I have a chip design that’s going to need 10 million transistors, regardless of whether it’s 28nm or 65nm or 130nm… how does the design cost change with the technology node?

I can’t answer that question, either.

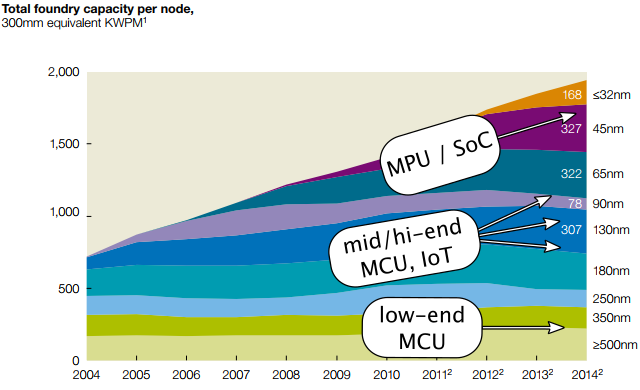

About this time (October 2021) I ran across a series of publications from McKinsey & Company, titled (what else) McKinsey on Semiconductors. Which are good reads; just realize that instead of coming from the technological angle, they’re coming from the management-consultant angle: custom answers for \$\$\$\$; don’t bother asking the questions, since they will tell you the questions you should have asked. Anyway here’s a graph from McKinsey on Semiconductors 2011 that made me do a double-take:

Huh. Leading-edge nodes are only a small share of foundry volume.

The leading edge is where all the money is going. Lots of expense but lots of revenue, even if it’s not a large share of capacity. Today manufacturing a 7-nanometer wafer full of finished chips might cost ten times what a 180-nanometer wafer costs. But that’s because of the cost of depreciation. Once the 7-nanometer equipment has been paid for, the cost of a finished wafer will come down, and then 5-nanometer and 3-nanometer will make up the bulk of the revenue. New, depreciated, legacy, new, depreciated, legacy.... Billions of dollars is on the line from the foundries, from the EDA companies, from the semiconductor equipment companies, and the only way the economics are going to work so well is if they are reasonably stable and predictable: yesterday’s advanced nodes pay for tomorrow’s even more advanced nodes, while yesterday’s advanced nodes become mature nodes, and the capacity of those mature nodes is there for everyone to use to keep the cost down. There have been some hiccups; after 28-nanometer, most of the foundries took a little longer than expected to get to FinFETs, and EUV finally arrived in time to make a big difference, like a star actor bursting on-stage just in time for Act IV of the play. But overall, Moore’s Law has kept right on going.

Still: leading-edge nodes are only a small share of foundry volume. So all the trailing-edge mature legacy nodes are still there; they bring in some revenue, but not as much as the leading edge. And they don’t go away!

That was the part that made me do the double-take. Yes, if you look at things in relative terms, the older technology nodes make up a smaller percentage of foundry capacity than they used to. But the foundry capacity of mature nodes is still there, pumping out new chips year after year. With the chip shortage going on, maybe there’s a perception that the industry has been bringing old technology off-line intentionally, or that adverse events like COVID-19 have reduced available capacity — this is not the case! The capacity has been maintained all this time, but the demand has gone up and we’ve bumped up pretty hard against a supply limitation.

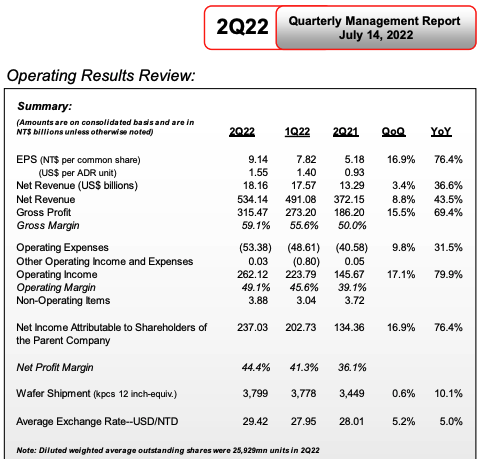

The McKinsey graph is from 2011. I couldn’t find any similar data on foundry capacity that is more recent, but TSMC does publish its financial results every quarter, and they break down their revenue by technology node — I’m assuming the Counterpoint Research graph just used that data — so if you’re willing to go to the trouble to visit TSMC’s website, you can analyze their financial data yourself. Let’s take a look:

Fun With Foundry Financial Figures

I went back over the last 20 years and here’s my graph:

TSMC itself reports revenue breakdown by node as a percentage of total revenue, not in dollars — but it also reports total revenue in New Taiwan dollars, so you can just multiply the two together to get revenue in absolute terms, at least within the granularity of around 1% of the total revenue.[15] Here’s an example from the TSMC 2Q22 Management Report:

Some of the node groups on my graph, which I think of as “buckets”, are a little weird (16/20nm together, and 110/130nm and 150/180nm) but that’s just the way TSMC has lumped them together during most of their history, so to get some consistency, when I looked at the older results I used the same buckets — in other words, back in 2003 when 150nm and 180nm were reported separately I just added those together. Or today, when TSMC just reports 16nm, not 16/20nm — I presume 20nm is dead? — I still applied it to the same 16/20nm bucket.

Anyway it’s remarkable how level these revenue lines are. (Well, at least prior to the chip shortage.) There’s a little blip around the first quarter of 2009 — that’s the 2008 financial crisis kicking in — and in the past year or so, an uptick across-the-board, presumably due to the increased demand of the chip shortage and TSMC’s price increases. Oh, and there was 10nm’s untimely death; now TSMC just reports 16nm and 7nm. But look at 150/180nm. Twenty years and it keeps on going! The same, more or less, with 65nm and 40nm. The 90nm and 110/130nm nodes have decreased since their introduction, but otherwise they’re still there; 28nm and 16nm have come down slightly also.

What would be really interesting is to see the number of wafers manufactured for each node, rather than the revenue, since presumably the cost per wafer decreased after the first few years of production. But that’s proprietary information and I don’t expect TSMC to be very forthcoming.

The graph from Intel’s 2022 Investor Day that I cited earlier is actually from a slide that contains several graphs:

Intel’s message, apparently, is that they want to stop throwing away the old equipment and keep it in use, the way TSMC has done, and squeeze out those extra drops of revenue at the trailing edge — well, at least that’s what I read into it.

Leading-edge nodes are only a small share of foundry volume. And trailing-edge nodes keep on living, bringing in revenue. (Despite rumors to the contrary.)

For TSMC, this isn’t just an accidental phenomenon visible to the careful observer; it’s a conscious decision. Dr. Cheng-Ming Lin has been quoted several times in TSMC’s recent Technology Symposiums of the company’s deliberate decision to keep their processes going.

- In 2018: “We never shut down a fab. We returned a rented fab to the government once.”[16]

- In 2019: “Our commitment to legacy processes is unwavering. We have never closed a fab or shut down a process technology.”[17]

Paul McLellan commented on this in 2019:[18]

The strategy is to run mainline digital when a fab is new, and then find more lines of business to run in it as the volume of digital wafers moves to the next fab over the following years.

The fabless/foundry business model that TSMC pioneered has several advantages over what we just called the “semiconductor business model” and now say “integrated device manufacturer” or IDM. In the old model, where a semiconductor company had fabs, the whole mentality was to fill the fab exactly with lines of business. The company needed just the right amount of business to fill its fabs: not enough, and the excess depreciation was a financial burden, too much and they were on allocation and turning business away. When a fab got too far off the leading edge, there might not be enough business to run in it, and the fab would be closed. Or, in the days when many companies had both memory and logic businesses, they would run memory and then convert the fab to logic, and then eventually close it.

TSMC finds new lines of specialty technologies, finds new customers, and keeps the fabs going. Things like CMOS image sensors (CIS) or MEMS (micro-electrical-mechanical-systems such as pressure sensors). Adding new options to non-leading edge processes such as novel non-volatile memories.

It’s almost as if Henry Ford’s original factory for the Model T was still running, but now making turbine blades for the aerospace industry.

TSMC isn’t the only foundry with this strategy — although it’s so large that sometimes I just think of “TSMC” and “foundries” interchangeably. United Microelectronics Corporation, also located in Taiwan (UMC was ITRI’s first commercial spinoff in 1980, although didn’t get into the pure-play foundry business until 1995), is the third-largest semiconductor foundry in market share after TSMC and Samsung, and it also posts similar information in its quarterly financials:

Plenty of mature nodes in the works, and although I haven’t crunched the numbers on UMC’s financials as carefully as TSMC’s, here’s a similar graph for the second quarter of each year between 2001 and 2022:

UMC’s revenue data has a little more fluctuation than TSMC — probably because UMC is a smaller foundry; the law of large numbers expects more variability in companies with fewer customers — but it’s the same general pattern.

UMC publishes a few tidbits in its earnings reports that TSMC does not choose to share with us, all of which are interesting and lend a little bit more of a glimpse into the world of foundries:

- IDM revenue share

- utilization

- capacity by fab

The IDM revenue share is the percentage of revenue that comes from customers who have their own fabs (remember: IDM = integrated device manufacturer) but choose to outsource some of their wafer production to UMC. Because of this, the term “fab-lite” is more apt than IDM. At any rate, look at the fluctuation:

In the third quarter of 2006 this hit an all-time high of 44%. This was about the time of a very strong PC market that caught semiconductor manufacturers off guard[19] and was the main catalyst for the 2006-2009 DRAM crisis, which I mentioned in Part Two. UMC’s quarterly report states[20]

The percentage of revenue from fabless customers decreased to 56% in 3Q06 from 63% in 2Q06 as several Asian fabless customers adjusted their inventory levels, although demand from IDM customers remained strong.

IDM revenue has made up a much lower fraction of UMC’s business in the past ten years or so.

Utilization measures how much of the available capacity is actually in use; UMC defines this as “Quarterly Wafer Out / Quarterly Capacity” (wafer shipments divided by wafer capacity)

Aside from the economic downturns in 2001 and 2008, UMC has managed to keep fab utilization high. In recent quarters, wafer shipments have been above wafer capacity; because of the urgency (and profitability) of getting product to customers during a time of shortage, the fabs have managed to squeak out a little bit more than their maximum capacity. (It is not at all obvious that it would be possible to go above 100% utilization; more on this in Part Five.)

UMC also states their wafer capacity by fab, with tables like these:

It’s not quite as informative as a breakdown of capacity by technology node, but we can graph this in 8” (200mm) equivalent capacity:

UMC’s Fab 12A is a huge catch-all fab complex (130nm – 14nm at present) with construction in phases, projected to eventually cover eight phases when fully built out. According to UMC’s website:

Fab 12A in Tainan, Taiwan has been in volume production for customer products since 2002 and is currently manufacturing 14 and 28nm products. The multi-phase complex is actually three separate fabs, consisting of Phases 1&2, 3&4, and 5&6. Fab 12A’s total production capacity is currently over 87,000 wafers per month.

It would be interesting to see what their capacity is per node.

Or, perhaps more interesting: how does the average customer price per unit area of silicon depend on the technology node? Neither UMC nor TSMC publish how many wafers they make on each technology node. The best I could do was estimate from per-fab capacity data (TSMC published this from 2006 to 2014) that in 2012 TSMC’s 300mm fabs probably earned around 50% more per unit die area, on average, than the 200mm fabs; this average pricing premium increased to around 60% in 2013 and to around 85% in 2014, as more advanced nodes came online. Pricing at the leading edge is likely a lot higher, which skews revenue in favor of the latest nodes, even though (as I’ve now said several times) leading-edge nodes are only a small share of foundry volume.

TSMC did address this issue (more advanced nodes cost more) in its 2006 annual report (my emphasis):

Technology Migration. Since our establishment, we have regularly developed and made available to our customers manufacturing capabilities for wafers with increasingly higher circuit resolutions. Wafers designed with higher circuit resolutions can either yield a greater number of dies per wafer or allow these dies to be able to integrate more functionality and run faster in application. As a consequence, higher circuit resolution wafers generally sell for a higher price than those with lower resolutions. In addition, we began in November 2001 offering our customers production of 300mm wafers which can produce a greater number of dies than 200mm wafers. Advanced technology wafers have accounted for an increasingly larger portion of our sales since their introduction as the demand for advanced technology wafers has increased. Because of their higher selling price, advanced technology wafers account for a larger pro rata portion of our sales revenue as compared to their pro rata share of unit sales volume. The higher selling prices of semiconductors with higher circuit resolutions usually offset the higher production costs associated with these semiconductors once an appropriate economy of scale is reached. Although mainly dictated by supply and demand, prices for wafers of a given level of technology typically decline over the technology’s life cycle. Therefore, we must continue to offer additional services and to develop and successfully implement increasingly sophisticated technological capabilities to maintain our competitive strength.

But there’s not any quantitative information here… is a 28nm wafer 1.6× the cost per area of a 180nm wafer? or 5× the cost per area?

Oh, if we could only get some more specific information, some better quantitative insight into the variation in price and operating cost and profitability for the different foundry node geometries.... Presumably this is highly proprietary business information for any fab owner, foundry or not — but I do wish that after some period of time (10 years? 15 years?) these companies would publish historical information for us to better understand how the industry works, without significantly impairing any competitive business advantage.

There are certainly some unofficial outside estimates of cost per wafer; I’ll discuss one such reputable estimate in a later section on cost modeling. And there are periodic news rumors that can lead to a less-reputable estimate: for example, DigiTimes mentioned in a March 2022 article that TSMC’s 5nm wafer shipments were around 120,000 wafers per month and would be increasing to 150,000 wafers per month by the third quarter of 2022. If this is accurate, 120KWPM (360K wafers per quarter) represents nearly 10% of TSMC’s first-quarter 2022 shipments (3778K twelve-inch equivalent) but TSMC reported 5nm made up 20% of 2022Q1 revenue (NT\$86.292 billion in 2022Q1’s Consolidated Financial Statements ≈ US\$3 billion, which works out to about \$8300 on average per 5nm wafer; TSMC’s total wafer revenue for 2022Q1 was NT\$438.64 billion ≈ US\$14.5 billion → divide by the 3778K 12” equivalent wafers shipped and we get US\$3800 per 12” wafer on average across all nodes) so 5nm earns an outsized share of revenue relative to the older nodes.

At any rate:

Leading-edge nodes are only a small share of foundry volume. And trailing-edge nodes keep on living, bringing in revenue.

Now, the big question to ask yourself is: with this sort of fab behavior — nothing old disappears, while new nodes keep on adding to capacity, becoming more profitable once equipment depreciation is completed — how have the foundries’ business models impacted the chip shortage?

Notes

[1] Ron Wilson, TSMC risk production: what does it mean for 28nm?, EDN, Aug 26 2009.

[2] W. J. Spencer and T. E. Seidel, International Technology Roadmaps: The U.S. Semiconductor Experience (Chapter 11), Productivity and Cyclicality in Semiconductors: Trends, Implications, and Questions: Report of a Symposium. Washington, DC: The National Academies Press, 2004.

[3] Michel-Dimitri Calvocoressi, M. Ravel Discusses His Own Work, Daily Telegraph, Jul 11 1931. Reprinted in A Ravel reader: correspondence, articles, interviews, 2003.

[4] Integrated Circuit Engineering Corporation, Cost Effective IC Manufacturing 1998-1999: Profitability in the Semiconductor Industry, 1997.

[5] The End of the Line, The Economist, vol. 336, no. 7923, Jul 15 1995.

[6] Eric Bogatin, Roadmaps of Packaging Technology: Driving Forces on Packaging: the IC, Integrated Circuit Engineering Corporation, 1997.

[7] Dylan McGrath, Analysis: More buyers seen for used fab tools, EE Times, Dec 22 2009.

[8] Scotten Jones, Leading Edge Foundry Wafer Prices, semiwiki.com, Nov 6 2020.

[9] David M. Byrne, Brian K. Kovak, Ryan Michaels, Offshoring and Price Measurement in the Semiconductor Industry, Measurement Issues Arising from the Growth of Globalization (conference), W.E. Upjohn Institute for Employment Research, Oct 30 2009.

[10] Leo Wong, High Performance Computing Surpasses Smartphones as TSMC’s Highest Revenue Earner for Q1 2022, Gizmochina, May 7 2022.

[11] John Ferguson, The Process Design Kit: Protecting Design Know-How, Semiconductor Engineering, Nov 8 2018.

[12] Ying Shih, The role of public policy in the formation of a business network, Centre for East and South-East Asian Studies Lund University, Sweden, 2010.

[13] Tekla S. Perry, Morris Chang: Foundry Father, IEEE Spectrum, Apr 19 2011.

[14] Mark LaPedus, Big Trouble At 3nm, Semiconductor Engineering, Jun 21 2018.

[15] Actually, if you want more precise numbers for revenue by process technology, you can look for TSMC’s consolidated financial statements as reviewed by independent auditors. From the 2022 Q2 consolidated financial statement:

I ran across these recently, after I had already typed in all the revenue percentage numbers from the past 20 years of quarterly management reports.

[16] Paul McLellan, TSMC’s Fab Plans, and More, Cadence Breakfast Bytes, May 7 2018.

[17] Tom Dillinger, 2019 TSMC Technology Symposium Review Part I, SemiWiki.com, Apr 30 2019.

[18] Paul McLellan, TSMC: Specialty Technologies, Cadence Breakfast Bytes, May 2 2019.

[19] Jeho Lee, The Chicken Game and the Amplified Semiconductor Cycle: The Evolution of the DRAM Industry from 2006 to 2014, Seoul Journal of Business, June 2015.

[20] United Microelectronics Corporation, 3Q 2006 Report, Oct 25 2006.

Supply Chain Games 2018: If I Knew You Were Comin’ I’d’ve Baked a Cake

Oh, I don’t know where you came from

‘Cause I don’t know where you’ve been

But it really doesn’t matter

Grab a chair and fill your platter

And dig, dig, dig right in— Al Hoffman, Bob Merrill, and Clem Watts, 1950

While you think about foundries and their business models in the back of your mind, let’s talk about today’s game.

Here we leave the 8-bit games of the 1980s behind; today’s computer games are behemoths in comparison. This one is called Supply Chain Idle and it runs in a web browser on a framework called Unity, probably using thousands of times as much memory and CPU power as Lemonade Stand and M.U.L.E. did on the 6502 and 6510 processors.

Thanks, Moore’s Law!

At any rate:

In the game of Supply Chain Idle, you are in charge of a town called Beginner Town. It has 20 industrial plots of land in a 5×4 grid interconnected by roads. You start out with four dollars in your pocket, and can buy apple farms and stores, each for a dollar. That’s enough for two apple farms and two stores. If you put them on the map and connect the apple farms and the stores, then the apple farms start making apples and trucks transport those apples to the stores to be sold.

At first, the rate of apple production is one apple every four seconds, which you can sell at the store for the lofty price of ten cents apiece, in batches of ten apples every five seconds.

With two apple farms and two stores:

- We can produce up to two apples every four seconds = 0.5 apples per second.

- We can sell up to 20 apples every five seconds = 4.0 apples per second

The stores can sell them faster than you can make them, so that means the apple farms are the bottleneck, and at ten cents each, we’re making five cents a second. After 20 seconds, we have another dollar, and can buy another apple farm or store.

We could fill the map with 10 apples and 10 stores. Apple farms would still be the bottleneck, but now we’d be making 25 cents a second.

Or we could be a bit smarter, and realize that with 18 apple farms and 2 stores:

- the farms produce 18 apples every 4 seconds = 4.5 apples per second

- the two stores could sell up to 20 apples / 5 seconds = 4.0 apples per second

In this case, the stores are the slight bottleneck, but now we can make money faster, selling apples at a total of \$0.40/second.

That’s still not very much. (Well, actually, it’s a lot. 40 cents a second = \$24 a minute, \$1440 an hour, \$34560 a day, or \$12.6 million a year. In real life, I’d take it and retire. But in the game, it isn’t very much.) To make more money in Supply Chain Idle, there are a number of methods:

-

Complete achievements. These are milestones like “sell 100 apples” or “get \$100 cash on hand”. Some of the achievements will raise prices by 1% or lower production times by 1%, either of which will increase the rate at which you make money.

-

Shop at the store and spend idle coins on speedups. If you have 100 idle coins, you can triple all prices. You can get idle coins by spending real money (PLEASE DON’T!) or by earning enough achievements… though that takes a long time. This is good for later in the game; save your idle coins for the super speed (10× production rate for 300 coins) if you can.

-

Build more resource producers. We’re already maxed out using all 20 spaces, so that won’t help.

-

Balance production with the rate at which it can be consumed and sold. Already done that for apples — but this is a big part of the game. In case you want to change your mind, Supply Chain Idle lets you sell back resource producers and stores for a full refund. (Try that in real life: good luck.)

-

Upgrade! Just like the Kittens Game, upgrading is a big part of Supply Chain Idle, and there are five ways to upgrade. Resource producers have productivity, speed, and quality levels, while stores have marketing and speed levels.

a. Increase productivity. Each of your resource producers has a productivity level, which increases the amount produced in each batch. For example, to go from Level 0 to Level 1 productivity in apples, you spend \$6 and go from 1 apple in 4 seconds (0.250 apples/second) to 2 apples in 6 seconds (0.333 apples/second), which is a 33.3% speedup.

b. Increase resource producer speed. This increases the speed each batch is produced. To go from Level 0 to Level 1 speed in apples, you spend \$5 and go from 1 apple in 4 seconds to 1 apple in 2 seconds, which is a 100% speedup.

c. Increase resource producer quality. This raises the price you can get from the store. To go from Level 0 to Level 1 quality in apples, the price increases from 10 cents to 13 cents each, which is a 30% price increase.

d. Increase marketing. This lets the stores sell more at a time; to go from Level 0 to Level 1 marketing, the store goes from a batch of 10 in 5 seconds (= 2/second) to a batch of 20 in 7.5 seconds (= 2.666/second), a 33.3% speedup.

e. Increase store speed. This lets the store sell each batch faster; to go from Level 0 to Level 1 speed, the store goes from a batch of 10 in 5 seconds to a batch of 10 in 2.5 seconds, a 100% speedup.

Like selling the resource producers and stores, you can also downgrade any upgrade and get a full refund.

-

Make a new product! Once you sell apples, and have \$100 on hand, you can buy a sugar cane farm. Sugar cane can be produced starting at a batch of 12 in 2 seconds, with a store price of \$0.303… this is \$1.818 per second, much better than the \$0.025 per second you’ll get at the beginning from apples.

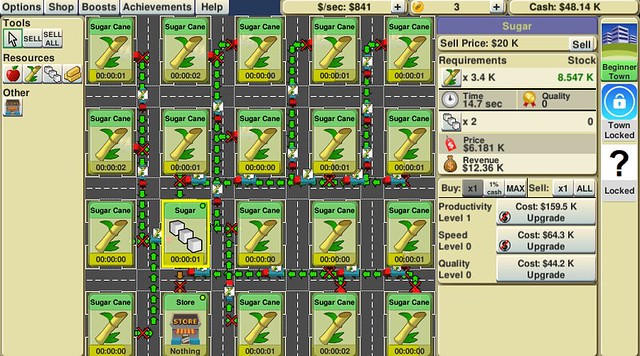

Sugar cane is produced in much higher quantities per second than apples, so the balance between sugar cane farms and stores is different; at one point it looked like I needed about 3 stores for each sugar cane farm. This can change with resource producer or store upgrades, but at some point the cost of the upgrades becomes too high and you’re stuck trying to figure out how to make do with the money you have.

Each time you sell the first unit of a new product, it unlocks the next product. After sugar cane is sugar, which shows one more game dynamic, namely that there are raw resource producers (like apples and sugar cane farms) where there are no production inputs, and resource processors like sugar mills, which need inputs taken from other resource producers. Sugar production needs sugar cane as an input, so the supply chain here goes sugar cane → sugar → \$\$\$. At the time I started sugar production in this game, a sugar mill cost \$20,000 and turned 1700 units of sugar cane into one unit of sugar in about 10 seconds, which I could sell for the price of \$6072 = \$607/second.

The game warns you with a little colored dot in the upper right hand corner of a grid square if the supply chain is not well-balanced in the store or a downstream production step. Stores show orange or red dots if they can’t keep up with the rate of product being added. Resource processors (like sugar mills) show orange or red dots if they can’t get enough inputs to keep producing outputs and have to wait for the inputs to arrive.

The supply chain shown above (one sugar mill supplied from one sugar cane farm) is poorly balanced; here’s a better one with one sugar mill and 18 sugar cane farms, producing \$841/s:

With two sugar mills each supplied by 8 sugar cane farms, I can get \$1266/s even though the sugar mills are a little undersupplied (the color indicator is yellow rather than green):

The upgrade prices go up roughly exponentially, and by the time you have enough money to buy the next resource producer, it’s usually worth it to switch right away. You’ll note that there are no apple farms in the last few images, and as soon as sugar mills are in the mix, it’s no longer worth selling the sugar cane directly to the store — instead, all the sugar cane should be provided as input to the sugar mills.

Next comes lumber mills — \$7 million each; not sure how they churn out lumber without any input from trees… but that’s the way it goes in Beginner Town! — and after that, paper carton plants, at \$3 billion apiece. Sometimes the mills are the bottleneck and sometimes stores are the bottleneck; the game can shift very quickly.

After the first few resource producers, a lot of the game time involves waiting — which is why it is an idle game rather than something that should be actively played — and the big question becomes: what upgrade should be done next? I don’t have a good general strategy for this; it just takes some effort to figure out what upgrade gives the biggest bang for the buck. Quality upgrades are worth pursuing, even the lower ones which make less of a difference: for example, upgrading paper carton plant quality will make more of a difference than upgrading the lumber mill quality in the final price of paper cartons, but both are still worth doing.

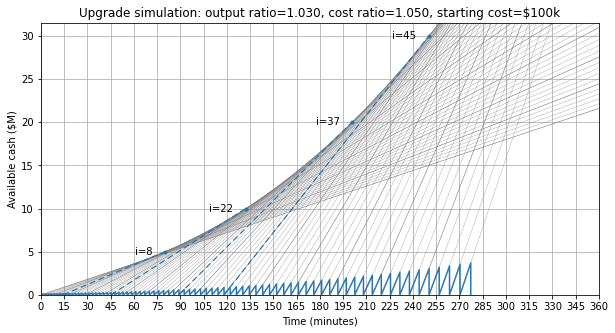

If you hover over any of the upgrade buttons, the game will tell you what effect it will have in advance. For example here I’ve upgraded the various factories in Beginner Town so that I’m making \$5.283 billion per second, and the least expensive upgrade will be a quality upgrade for the paper carton plant, which will bump up the price of paper cartons by 30% — so now I can earn \$6.867 billion per second — but that upgrade will cost me \$303.1 billion. I have \$191.2 billion in cash now, so that will take me another 21 seconds to afford the upgrade, and I’ll need to spend all my remaining cash. Is it worth it?

Well, the next major hurdle in the game is buying an apple juice factory for \$6 trillion — I kid you not — so if I don’t do the quality upgrade, it will take me 1100 seconds, or about 18 minutes. If I do the quality upgrade, it will take me 21 seconds to afford the upgrade, and another 14 minutes to earn the \$6 trillion. Definitely worth the upgrade.

If you want to see this graphically, here it is; there’s a breakeven point at about 3.5 minutes where the upgrade gets us further.

import numpy as np import matplotlib.pyplot as plt %matplotlib inline R1 = 5.283e9 # production rate before upgrade C0 = 191.2e9 # starting cash U2 = 303.1e9 # cost to upgrade quality Ctarget = 6e12 # target cost R2 = R1 * (61.68/47.45) # production rate after upgrade tu = (U2-C0)/R1 t1 = (Ctarget-C0)/R1 t2 = Ctarget/R2 fig = plt.figure(figsize=(8,5)) ax = fig.add_subplot(1,1,1) ax.plot(np.array([0, tu, tu, tu+t2])/60, np.array([C0, U2, 0, Ctarget])/1e9, '-',label='upgrade') ax.plot(np.array([0, t1])/60, np.array([C0, Ctarget])/1e9,'--',label='no upgrade') ax.grid(True) ax.set_xlabel('Time (minutes)') ax.set_ylabel('Available cash (\$B)') ax.set_xticks(np.arange(0,20,2)) ax.set_xlim(0,20) ax.legend();

(This game suffers from the “–illions inflation” of many idle games, something I mentioned in Zebras Hate You For No Reason. You just have to ignore the implausibility if you want to have fun.)

Anyway, after a short time, I do have the \$6T to afford an apple juice factory, and I start building. This is the first complex supply chain:

- apple juice ← (apples, sugar, paper carton)

- sugar ← sugar cane

- paper carton ← lumber

The setup I have shown here is not very good, and the apple juice factory has a red dot, which means I’m not utilizing the factory very well. Maximizing profit in this game is all about balancing the supply chain, and we’re not. The “Stock+Supply” panel tells us a couple of things here:

-

Each supply input for apple juice has an ideal rate to keep the apple juice factory at full utilization. The game shows you the supply rate of each input relative to full utilization, as a percentage. Right now we’re providing 225% of that ideal rate in paper cartons, 30% of the ideal rate in sugar, and 19% of the ideal rate in apples. That leaves the utilitization of the apple factory at only 19%, and means that it’s waiting 81% of the time. Apples are the bottleneck, so if we want more apple juice, we need to increase apple production. Supply rate percentages are shown in red if less than 100%, green otherwise.

-

With current production rates, the game recommends supplying each apple juice factory with 6 apple farms, 3 sugar mills, and 1/5 of a paper carton plant. (Another way of thinking about it: a paper carton plant can supply 5 apple juice factories.)

-

The amount of each input needed for one batch of output is shown. (For example, 11 million apples, 27 thousand units of sugar, and 3.1 million paper cartons, which will get us 2 million cartons of apple juice, currently priced at \$7.115 million each, or \$14.23 trillion per batch.)

-

The amount of each input in stock is also shown. (For example, there are 2.045 million apples, 34.34 thousand units of sugar, and 117 million paper cartons.) If the amount in stock is sufficient to make the next batch, it’s shown in green, otherwise it’s shown in red.