Jaywalking Around the Compiler

Our team had another code review recently. I looked at one of the files, and bolted upright in horror when I saw a function that looked sort of like this:

void some_function(SOMEDATA_T *psomedata)

{

asm volatile("push CORCON");

CORCON = 0x00E2;

do_some_other_stuff(psomedata);

asm volatile("pop CORCON");

}

There is a serious bug here — do you see what it is?

Stacking the Deck Against Yourself

OK, first, what is this function doing? The C code in question was written for use on a Microchip dsPIC33E device. The CORCON register in dsPIC33E devices is the “Core Control Register” — it controls a bunch of miscellaneous things that the processor does. It’s a set of mode bits. Some of the bits control DSP instructions; the E2 content essentially tells the compiler to turn on arithmetic saturation and rounding, and use fractional multiplication, when working with the DSP accumulators.

So the intent of the code was roughly as follows:

- save the

CORCONregister on the stack - configure

CORCONto turn on saturation and rounding, and use fractional multiplication for certain DSP instructions - perform some computations

- restore the

CORCONregister from the stack

But it won’t work as intended. There are a few bugs here.

One is that by setting CORCON = 0x00E2, all the bits of CORCON are being set a specific way, including some which affect the system interrupt priority level and how DO loops behave.

What the code should have done is set or clear only the relevant bits of CORCON, and leave the other bits alone.

The more serious bug has to do with the way that CORCON is saved and restored, and the way that the compiler interacts with inline assembly code.

You see, you have to be really careful when you work with inline assembly in C. It’s kind of like performing heart surgery… while the patient is still awake and walking… and the patient isn’t aware of the surgeon. Well, I hope that scared you a bit, but that really doesn’t explain the danger very well. So here goes: the important things to note are that certain parts of the CPU and memory, like the stack, are managed by the compiler, and that there are certain things that the compiler assumes. These words managed and assumes are important, and as I’m writing this, they trigger certain associations to me.

- managed — You don’t get to touch the stack. The compiler owns the stack. The stack is not something you can read or write directly. Hands off. The compiler will hurt you if you play around with the stack. Please stay off the stack. Do not depend on reading the contents of the stack. Please don’t monkey around with the stack. It’s up to the compiler to use the stack. Leave the stack alone. Be very, very, afraid of C code that manipulates the stack. Don’t even think about it. Danger, keep out.

(Actually, managed makes me think of managed code from around the 2005-2008 era, namely that it was some weird Microsoft feature jargon of C++ or .NET that made no sense, and made me just want to stay away. Microsoft is going to manage something for me, and protect me from myself, okay, what is it, a bunch of things in square brackets, why exactly would I want to use this?)

The stack is an implementation detail of the output program generated by the compiler, a section of memory reserved for the compiler as a LIFO data structure with a thing called the stack pointer which keeps track of the top of the stack. As the compiler generates code to save temporary data, it puts it on the stack and moves the stack pointer, until later when it no longer needs that data and it restores the stack pointer back. Strictly speaking, the compiler could use a completely different mechanism than the CPU stack, and often it optimizes things and places local variables in registers. If you write code like the excerpt below, you have no idea where the variable thing may be placed:

int foo(int a)

{

int thing = a*a;

thing += do_some_stuff(a);

return thing;

}

It might be on the stack. It might be in a register. The compiler could dynamically allocate memory (but probably won’t) or stick it in some other predefined place in memory (though almost certainly won’t). You have no way of knowing, without looking at what the compiler is doing. The compiler has to behave a certain predefined way, and we’re told that it typically uses a stack for local variables, but that’s up to it to decide.

- assumes — Not only does the compiler manage the stack for you, but it also assumes that it is the sole owner of the stack. It assumes certain things about inline assembly code, namely that it has no responsibility to check what that code is doing, and that you, as a programmer, are responsible for making sure you are cooperating properly with the compiler. Technically speaking, unless you interface properly with assembly code (as in GCC extended ASM syntax), you can barely do anything. The compiler treats what you write as a black box and assumes you aren’t touching anything you shouldn’t. Which includes the stack. (Did I mention yet that you shouldn’t modify the stack?)

What this means is processor- and compiler-dependent; let’s look at the XC16 C Compiler User’s Guide, which has the following content in section 12.3 — with my emphasis on managed and assumes:

12.3 CHANGING REGISTER CONTENTS

The assembly generated from C source code by the compiler will use certain registers that are present on the 16-bit device. Most importantly, the compiler assumes that nothing other than code it generates can alter the contents of these registers. So if the assembly loads a register with a value and no subsequent code generation requires this register, the compiler will assume that the contents of the register are still valid later in the output sequence.

The registers that are special and which are managed by the compiler are: W0-W15, RCOUNT, STATUS (SR), PSVPAG and DSRPAG. If fixed point support is enabled, the compiler may allocate A and B, in which case the compiler may adjust CORCON.

The state of these register must never be changed directly by C code, or by any assembly code in-line with C code. The following example shows a C statement and in-line assembly that violates these rules and changes the ZERO bit in the STATUS register.

#include <xc.h> void badCode(void) { asm (“mov #0, w8”); WREG9 = 0; }The compiler is unable to interpret the meaning of in-line assembly code that is encountered in C code. Nor does it associate a variable mapped over an SFR to the actual register itself. Writing to an SFR register using either of these two methods will not flag the register as having changed and may lead to code failure.

This all sounds fine and dandy, but what does it really mean?

Three Wrongs Make a Disaster

Let’s look at another case where an autonomous entity assumed something. You might have heard about the fatality that occurred on March 18, 2018, in Tempe, Arizona, involving a self-driving vehicle owned by Uber Technologies, Inc., and a pedestrian. You hear “pedestrian” and “self-driving vehicle”, and maybe it conjures a vision of some happy-go-lucky guy walking across a busy downtown street, just before an approaching self-driving car decides to slam into him. Well, this is not that. Here’s a recent article from the Washington Post about the March 2018 accident, with my emphasis on “assume”:

However, documents released Tuesday by the National Transportation Safety Board show Uber’s self-driving system was programmed using faulty assumptions about how some road users might behave. Despite having enough time to stop before hitting 49-year-old Elaine Herzberg — nearly 6 seconds — the system repeatedly failed to accurately classify her as a pedestrian or to understand she was pushing her bike across lanes of traffic on a Tempe, Ariz., street shortly before 10 p.m..

Uber’s automated driving system “never classified her as a pedestrian — or predicted correctly her goal as a jaywalking pedestrian or a cyclist” — because she was crossing in an area with no crosswalk, NTSB investigators found. “The system design did not include a consideration for jaywalking pedestrians.”

The system vacillated on whether to classify Herzberg as a vehicle, a bike or “an other,” so “the system was unable to correctly predict the path of the detected object,” according to investigative reports from the NTSB, which is set to meet later this month to issue its determination on what caused Herzberg’s death.

In one particularly problematic Uber assumption, given the chaos that is common on public roads, the system assumed that objects categorized as “other” would stay where they were in that “static location.”

That is, the autonomous vehicle had certain rules: it assumed that detected objects fell into a certain set of fixed foreseeable behaviors.

Assumed?

Autonomous vehicles and compilers aren’t really capable of assuming anything. That’s not something that deterministic systems do, no matter how much we might anthropomorphize them. They contain preconditions and assertions. Rather, their system designers are the ones doing the assuming. (I was going to say that the autonomous vehicle assumed there was no such thing as a jaywalking pedestrian, or that it assumed no pedestrian would cross a busy street, but such statements imply that the designers were aware of such possibilities and programmed their system to include these concepts but reject using them. Much more likely is that they were just outside the set of potential behaviors programmed into the system.)

For the record, the NTSB reports on the March 2018 crash, notably the Vehicle Automation Report, the Highway Group Factual Report, the Human Performance Group Chairman’s Factual Report, and the Onboard Image & Data Recorder Group Chairman’s Factual Report, did not use the word “assume” when referring to the automated driving system (ADS):

… However, certain object classifications—other—are not assigned goals. For such objects, their currently detected location is viewed as a static location; unless that location is directly on the path of the automated vehicle, that object is not considered as a possible obstacle....

…

At the time when the ADS detected the pedestrian for the first time, 5.6 seconds before impact, she was positioned approximately in the middle of the two left turn lanes (see figure 3). Although the ADS sensed the pedestrian nearly 6 seconds before the impact, the system never classified her as a pedestrian—or predicted correctly her goal as a jaywalking pedestrian or a cyclist—because she was crossing the N. Mill Avenue at a location without a crosswalk; the system design did not include a consideration for jaywalking pedestrians. Instead, the system had initially classified her as an other object which are not assigned goals. As the ADS changed the classification of the pedestrian several times—alternating between vehicle, bicycle, and an other— the system was unable to correctly predict the path of the detected object.

I, on the other hand, have no problem using the word “assume” — not because I want to imply any sort of volition, or to be perfectly technically accurate, but because we can use it as a shorthand for making conclusions based on a limited set of data and a simplified set of rules.

In any case, this accident was a tragedy with many contributing factors — a street in an urban industrial area near an elevated highway, at night, with a 45 mile-per-hour speed limit; a pedestrian who decided to cross this street, wearing dark clothing and walking with a bicycle in an area not designed for pedestrian crossing; a system programmed with certain questionable assumptions; a human “operator” who could have taken actions to stop the car, but who was glancing down inside the car and watching streaming video of NBC’s The Voice. The ones most relevant to my theme of assumptions are that

- the pedestrian assumed it was safe enough to cross at a location with no crosswalk — and presumably that if a car did approach, its driver would see her and avoid a collision.

- the vehicle assumed the detected object was not a pedestrian crossing the road, and that the object’s trajectory was not one that would result in a collision.

- the operator assumed it was safe to watch streaming video and rely on the vehicle to drive itself.

All three assumptions were wrong, and a collision occurred.

Arizona Revised Statutes, Title 28 - Transportation

28-793. Crossing at other than crosswalk

- A pedestrian crossing a roadway at any point other than within a marked crosswalk or within an unmarked crosswalk at an intersection shall yield the right-of-way to all vehicles on the roadway.

- A pedestrian crossing a roadway at a point where a pedestrian tunnel or overhead pedestrian crossing has been provided shall yield the right-of-way to all vehicles on the roadway.

- Between adjacent intersections at which traffic control signals are in operation, pedestrians shall not cross at any place except in a marked crosswalk.

28-794. Drivers to exercise due care

Notwithstanding the provisions of this chapter every driver of a vehicle shall:

Exercise due care to avoid colliding with any pedestrian on any roadway.

Give warning by sounding the horn when necessary.

Exercise proper precaution on observing a child or a confused or incapacitated person on a roadway.

Collisions in Assembly-land

Back to our CORCON example. The PUSH and POP instructions cause the stack pointer W15 to be modified and data written to and read from the stack. But the compiler manages the stack pointer W15, and uses it however it sees fit, assuming no one else is going to modify W15 or the memory it points to. It also uses register W14 as a frame pointer using the LNK and ULNK instructions. (If you want more of the technical details, look at the Programmer’s Reference Manual.)

Let’s look at a simple example. I’m using XC16 1.41:

import pyxc16

for optlevel in [1,0]:

print "// --- -O%d ---" % optlevel

pyxc16.compile('''

#include <stdint.h>

int16_t add(int16_t a, int16_t b)

{

return a+b;

}

''', '-c','-O%d' % optlevel)// --- -O1 --- _add: add w1,w0,w0 return // --- -O0 --- _add: lnk #4 mov w0,[w14] mov w1,[w14+2] mov [w14+2],w0 add w0,[w14],w0 ulnk return

The compiler output is quite a bit different, depending on whether we turn optimization off or on.

With optimization on (-O1) the compiler can reduce this function to a single ADD instruction, and the stack isn’t used at all except for the return address (CALL pushes the return address onto the stack; RETURN pops it off.)

With optimization off (-O0), the compiler takes the following steps:

- it allocates a new stack frame of 4 bytes using the

LNKinstruction — this pushes the old frame pointer W14 onto the stack, copies the stack pointer into W14 as the new frame pointer, and allocates 4 additional bytes on the stack - it copies the first argument

afromW0to its place in the stack frame[W14], and copies the second argumentbfromW1to its place in the stack frame[W14+2] - it performs the required computation, taking its inputs from those places (

a=[W14]andb=[W14+2]) and puts the result intoW0 - it deallocates the stack frame with

ULNK

A lot of this work is unnecessary, but that’s what happens with -O0; you’ll see a bunch of mechanical, predictable, and safe behavior to implement what the C programmer requests.

Now we’re going to spice it up by adding some jaywalking to mess with CORCON in inline assembly.

for optlevel in [1,0]:

print "// --- -O%d ---" % optlevel

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

int16_t add(int16_t a, int16_t b)

{

asm volatile("\n_l1:\n push CORCON\n_l2:");

CORCON = 0x00e2;

int16_t result = a+b;

asm volatile("\n_l3:\n pop CORCON\n_l4:");

return result;

}

''', '-c','-O%d' % optlevel)// --- -O1 ---

_add:

_l1:

push CORCON

_l2:

mov #226,w2

mov w2,_CORCON

_l3:

pop CORCON

_l4:

add w1,w0,w0

return

// --- -O0 ---

_add:

lnk #6

mov w0,[w14+2]

mov w1,[w14+4]

_l1:

push CORCON

_l2:

mov #226,w0

mov w0,_CORCON

mov [w14+2],w1

mov [w14+4],w0

add w1,w0,[w14]

_l3:

pop CORCON

_l4:

mov [w14],w0

ulnk

return

Here I’ve added labels _l1 through _l4 to help capture what’s going on at certain instants.

Now, the -O1 case is fairly easy to understand; here we push CORCON onto the stack, write E2 = 226 into CORCON, pop CORCON back off the stack, then do our adding operation.

Huh?

The C code asked the compiler to add a+b in between the PUSH and POP calls. So that’s another bug — not in the compiler, but in the way we interact with it. The compiler assumes it can reorder certain things; it doesn’t know what you’re trying to do with this inline assembly, it just knows that you want to return a+b, and that’s what it does. We’ll look at that again in a bit. (Yes, I know that the ADD instruction isn’t affected by CORCON, but if we were using a DSP instruction like MPY or MAC, then this bug could produce incorrect behavior.)

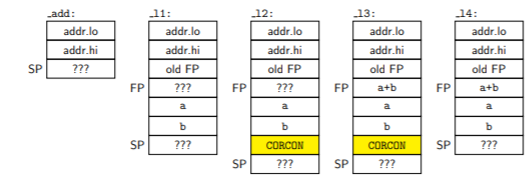

The -O0 case is a little more involved, and it does do the math between labels _l2 and _l3 after setting CORCON to E2. Here’s what the stack looks like at the different instants:

The yellow cells are what we’ve manually added via inline assembly; the rest of them have been handled by the compiler. “FP” stands for frame pointer (W14 in the dsPIC) and “SP” for stack pointer (W15); the “addr.lo” and “addr.hi” content is the return address which has been placed onto the stack when add() is reached via a CALL instruction.

The stack on the dsPIC grows upwards in memory with PUSH and LNK instructions, and either contains allocated or unallocated data:

-

content at addresses below the stack pointer is allocated — the compiler (or us, if we’ve been foolish enough to try to use it) will modify this content only if it is intentionally changing a particular value that has been allocated on the stack, and it is expected to deallocate this content when it is done using it, by restoring the stack pointer to its former location.

-

content at addresses at or above the stack pointer is unallocated — the compiler is allowed to use this for temporary data, and can allocate and deallocate memory. We can’t assume we know the content of unallocated data, because an interrupt could have occurred at any instant, and allocated/used/deallocated memory on the stack. I have marked all unknown content with ???.

So even though we just had the saved value of CORCON on the stack at _l3, we aren’t allowed to assume that this value will still be there at _l4.

Otherwise, no problems here; the compiler does its thing and we do ours, and all is good, right? Just like running across the street when cars aren’t coming.

Let’s raise it up another notch: below is a function foo() which is just like add() but it calls some external function munge() to modify the result a+b before returning it. If you want to test it yourself, create a different file that contains something like

#include <stdint.h>

void munge(int16_t *px)

{

(*px)++; // add 1 to whatever px points to

}

for optlevel in [0,1]:

print "// --- -O%d ---" % optlevel

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

void munge(int16_t *px);

int16_t foo(int16_t a, int16_t b)

{

asm volatile("\n_l1:\n push CORCON\n_l2:");

CORCON = 0x00e2;

int16_t result = a+b;

asm volatile("\n_l3:");

munge(&result);

asm volatile("\n_l4:\n pop CORCON\n_l5:");

return result;

}

''', '-c','-O%d' % optlevel)// --- -O0 ---

_foo:

lnk #6

mov w0,[w14+2]

mov w1,[w14+4]

_l1:

push CORCON

_l2:

mov #226,w0

mov w0,_CORCON

mov [w14+2],w1

mov [w14+4],w0

add w1,w0,w0

mov w0,[w14]

_l3:

mov w14,w0

rcall _munge

_l4:

pop CORCON

_l5:

mov [w14],w0

ulnk

return

// --- -O1 ---

_foo:

lnk #2

_l1:

push CORCON

_l2:

mov #226,w2

mov w2,_CORCON

add w1,w0,w1

mov w1,[w15-2]

_l3:

dec2 w15,w0

rcall _munge

_l4:

pop CORCON

_l5:

mov [w15-2],w0

ulnk

return

Let’s look at the unoptimized -O0 version first.

This looks a lot like the add() case, except here we rcall _munge with the value of W14 as an argument by placing it in W0 — we’re passing in the address contained in the frame pointer. Then munge() can read and write this value as appropriate. After munge() completes:

- pop off the saved value of

CORCONand put it back into theCORCONregister - copy the munged value of

a+bintoW0as the return value - deallocate the stack frame and return to the caller

Again, no problems here.

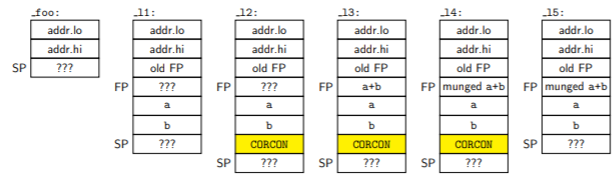

But here’s what happens when this is compiled in -O1:

_foo→_l1— allocate a two-byte stack frame to store a temporary value_l1→_l2— push the value ofCORCONon the stack_l2→_l3— writeE2intoCORCON, computea+b, and store it in the location below the stack pointer =[W15-2]. Uh oh. Here’s where the collision occurs. We saved ourCORCONvalue on the stack, but the compiler doesn’t know it’s there and thinks that[W15-2]is where it allocated the two bytes on the stack, which it owns, and which it can safely modify. If the compiler were aware that we allocated two more bytes on the stack using inline assembly, then it should be storinga+bat[W15-4]… but it’s not aware, and instead, the compiler-generated code overwrites the saved value ofCORCON._l3→_l4– callmunge(), passing inW15-2as an argument by placing it inW0_l4→_l5— our inline assembly is executed, and the CPU pops what we think is the saved value ofCORCONback into theCORCONregister. But instead, it’s the munged value ofa+b._l5→ return fromfoo()— copy these two allocated bytes on the stack intoW0to use as a return value, then deallocate the two-byte stack frame. Unfortunately the compiler thinks those bytes contain the munged value ofa+b, whereas in reality they contain uninitialized memory.

You cannot use inline assembly to allocate stack memory, unless you deallocate it before you return control to the compiler. That is, if you’re going to execute PUSH in a section of inline assembly, that same section has to contain a corresponding POP. Otherwise, your inline assembly conflicts with what the compiler assumes about what’s at the top of the allocated stack, and once this happens, all bets are off; the compiler manages pointers and memory that can be corrupted by our manual meddling with inline assembly, and the result can cause unexpected behavior. This is not a benign failure!

You can try running this yourself if you have a copy of MPLAB X and the XC16 compiler, and you debug using the simulator.

If you step through the code, you will find the results of the collision after foo() returns:

- Instead of restoring its original value,

CORCONwill contain the munged version ofa+bin the bits ofCORCONthat are writeable (some bits are read-only) - The result of

foo()will be whatever value happened to be at the appropriate place on the stack immediately below whereCORCONgetsPUSHed and the value ofa+bgets storesd. (So if the stack pointerW15contained0x1006before the call tofoo(), then the “addr.lo” word in the diagram is located at0x1006, and the result offoo()will be whatever value is contained three words past it, at address0x100c, whereasa+bwill get stored at0x100e, andmunge()will modify the value located there.)

The Right Way to Save CORCON

So how do we fix this? Well, there’s still a way we can save CORCON without corrupting the compiler’s understanding of system state, and that is to just stick it in a local variable:

for optlevel in [0,1]:

print "// --- -O%d ---" % optlevel

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

void munge(int16_t *px);

int16_t foo(int16_t a, int16_t b)

{

uint16_t tempCORCON = CORCON;

CORCON = 0x00e2;

int16_t result = a+b;

munge(&result);

CORCON = tempCORCON;

return result;

}

''', '-c','-O%d' % optlevel)// --- -O0 --- _foo: lnk #8 mov w0,[w14+4] mov w1,[w14+6] mov _CORCON,w1 mov w1,[w14] mov #226,w0 mov w0,_CORCON mov [w14+4],w1 mov [w14+6],w0 add w1,w0,w0 mov w0,[w14+2] inc2 w14,w0 rcall _munge mov [w14],w1 mov w1,_CORCON mov [w14+2],w0 ulnk return // --- -O1 --- _foo: lnk #2 mov w8,[w15++] mov _CORCON,w8 mov #226,w2 mov w2,_CORCON add w1,w0,w1 mov w1,[w15-4] sub w15,#4,w0 rcall _munge mov w8,_CORCON mov [w15-4],w0 mov [--w15],w8 ulnk return

Here the compiler is managing everything, and it can assume that what it allocated on the stack will stay there in the state it intended, unless it modifies the allocated memory itself.

Other Subtleties

Computational Dependencies and Order of Execution

There’s still that other little bug we ran into in add() under -O1, namely that the addition happened outside of the section of code in which CORCON was saved and restored. This bug will still be there even if get rid of our use of inline assembly; see below, where the ADD instruction takes place after we’ve restored CORCON:

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

int16_t add(int16_t a, int16_t b)

{

uint16_t tempCORCON = CORCON;

CORCON = 0x00e2;

int16_t result = a+b;

CORCON = tempCORCON;

return result;

}

''', '-c','-O1')_add: mov _CORCON,w2 mov #226,w3 mov w3,_CORCON mov w2,_CORCON add w1,w0,w0 return

The problem here is that the compiler has no knowledge of data dependency between the content of the CORCON register and the instruction we want to execute. Again — yes, the ADD instruction isn’t affected, but the same problem could occur if we use an accumulator instruction that depends on the CORCON content, like SAC.R:

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

register int accA asm("A"); // accumulator A

int16_t bar(int16_t a, int16_t b)

{

uint16_t tempCORCON = CORCON;

CORCON = 0x00e2;

accA = __builtin_lac(a+b, 3);

int16_t result = __builtin_sacr(accA, 4);

CORCON = tempCORCON;

return result;

}

''', '-c','-O1')_bar: mov _CORCON,w2 mov #226,w3 mov w3,_CORCON add w1,w0,w0 lac w0, #3, A sac.r A, #4, w0 mov w2,_CORCON return

In this case it doesn’t, but it’s unclear to me whether you can depend on this C code to work — in other words, whether the compiler knows it can’t reorder an accumulator __builtin with respect to a volatile memory access.

We can force our add() function to not reorder by computing the result in a volatile local variable.

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

int16_t add(int16_t a, int16_t b)

{

uint16_t tempCORCON = CORCON;

CORCON = 0x00e2;

volatile int16_t result = a+b;

CORCON = tempCORCON;

return result;

}

''', '-c','-O1')_add: lnk #2 mov _CORCON,w2 mov #226,w3 mov w3,_CORCON add w1,w0,w1 mov w1,[w15-2] mov w2,_CORCON mov [w15-2],w0 ulnk return

Unfortunately this causes the compiler to put the sum on the stack rather than just stick it in the W0 register, as desired. It’s very difficult to convince the compiler to do want you want, and be sure that you have done it correctly, which is why mode bits can be a real pain in cases like this.

You can also try to use “barriers” to force the compiler to assume certain dependency constraints in its computations. These are basically empty blocks of inline assembly, that utilize the extended assembly syntax to express those constraints, but it can be very tricky to ensure that your code is correct.

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

int16_t add(int16_t a, int16_t b)

{

uint16_t tempCORCON = CORCON;

CORCON = 0x00e2;

asm volatile("" :"+r"(a));

// don't actually do anything, but tell the compiler

// that the value of "a" might depend on this assembly code

int16_t result = a+b;

asm volatile("" ::"r"(result));

// don't actually do anything, but tell the compiler

// that this assembly code might depend on the value of "result"

CORCON = tempCORCON;

return result;

}

''', '-c','-O1')

_add: mov _CORCON,w2 mov #226,w3 mov w3,_CORCON add w0,w1,w0 mov w2,_CORCON return

CORCON and Fixed-Point

Finally, one of the notes in section 12.3 of the XC16 C Compiler User’s Guide is that the compiler also manages CORCON in some cases:

The registers that are special and which are managed by the compiler are: W0-W15, RCOUNT, STATUS (SR), PSVPAG and DSRPAG. If fixed point support is enabled, the compiler may allocate A and B, in which case the compiler may adjust CORCON.

In the code below, the compiler adds its own push _CORCON and pop _CORCON instructions at the beginning and end of the function, but doesn’t seem to modify CORCON, and the ordering of the computations get rearranged (the CORCON = tempCORCON translates into mov w2,_CORCON which executes before any of the _Fract/_Accum code even runs)

pyxc16.compile(r'''

#include <stdint.h>

extern volatile uint16_t CORCON;

int16_t baz(int16_t a, int16_t b)

{

uint16_t tempCORCON = CORCON;

CORCON = 0x00e2;

_Fract af = a;

_Fract bf = b;

_Accum acc = 0;

acc += af*bf;

_Fract result = acc >> 15;

CORCON = tempCORCON;

return (int16_t)result;

}

''', '-c','-O1', '-menable-fixed')

_baz: push _CORCON mov _CORCON,w2 mov #226,w3 mov w3,_CORCON mov w2,_CORCON cp0 w1 mov #0x8000,w2 btsc _SR,#0 mov #0x7FFF,w2 btsc _SR,#1 clr w2 cp0 w0 mov #0x8000,w1 btsc _SR,#0 mov #0x7FFF,w1 btsc _SR,#1 clr w1 mul.ss w2,w1,w4 sl w4,w4 rlc w5,w0 mov w0,w1 clr w0 asr w1,#15,w2 mov #15,w3 dec w3,w3 bra n,.LE18 asr.b w2,w2 rrc w1,w1 rrc w0,w0 bra .LB18 .LE18: mov w1,w1 asr w1,#15,w0 pop _CORCON return

The _Accum and _Fract types have certain semantics as defined in ISO C proposal N1169 (Extensions to support embedded processors, but I’m not familiar enough with them to give you advice on how to use them.

Wrapup

We talked about how not to interact with the C compiler in inline assembly — basically don’t mess with any of the CPU resources managed by the compiler, because the compiler assumes certain things about the state of the CPU at all times. These resources include core CPU registers and the stack.

As a metaphor for this dangerous behavior, I cited the March 2018 traffic fatality involving a jaywalking pedestrian and a self-driving vehicle. Improper assumptions can be deadly. Don’t jaywalk around the compiler. Please be careful.

© 2019 Jason M. Sachs, all rights reserved.

- Comments

- Write a Comment Select to add a comment

Excellent article! Basically:

- Thou shalt not confuse the compiler

- Thou shalt understand the optimization results

- Thou shalt understand the compiler actions can change over revisions

- Thou shalt not do stupid stuff

The other safe way around this issue is write a function in ASM and control everything. The problem of writing it in C is the results are hidden - as Jason clearly and expertly shows.

This is the kind of "technique" that can take days (if one is really lucky) to find, and probably not even looked for until something Really Bad (tm) happens - like somebody dies.

Jason: Well Done!

I absolutely agree that you shouldn't mess around with compiler-managed resources. The compiler I use, IAR, actually allows inline assembler with a syntax that tells the compiler what was used and what was destroyed. Even so, I've never found any reason for using inline assembler outside of a crash handler I wrote that performed a register and stack dump. Even special registers have intrinsic functions supplied by the compiler. I've found that if I need to optimize code for speed, I can do it in C and the optimizer will generate better code than if I try to do it in assembler (it knows a few tricks I don't).

Excellent Jason, Thanks!

Likewise, only time I've been forced to use inline assembly is for OS internals and crash-dump.

And then, one must carefully check assembly output to ensure no missing barriers or other errors...

Thanks again!

To post reply to a comment, click on the 'reply' button attached to each comment. To post a new comment (not a reply to a comment) check out the 'Write a Comment' tab at the top of the comments.

Please login (on the right) if you already have an account on this platform.

Otherwise, please use this form to register (free) an join one of the largest online community for Electrical/Embedded/DSP/FPGA/ML engineers: