Important Programming Concepts (Even on Embedded Systems) Part III: Volatility

1vol·a·tile adjective \ˈvä-lə-təl, especially British -ˌtī(-ə)l\

: likely to change in a very sudden or extreme way

: having or showing extreme or sudden changes of emotion

: likely to become dangerous or out of control

— Merriam-Webster Online Dictionary

Other articles in this series:

- Part I: Idempotence

- Part II: Immutability

- Part IV: Singletons

- Part V: State Machines

- Part VI: Abstraction

Believe it or not, when I first thought of writing this group of articles, I was going to write one article on five concepts. I figured, hey, the basics are pretty simple, I’m going to keep it short and sweet. The basics are pretty simple — but then some interesting subtleties got in the way, and I figured I’d better keep each concept separate, and it was a good thing, because immutability got to be a rather long article. I promised myself that volatility was going to be much shorter, because there just wasn’t much to say. And it will be shorter — just not for the reason I originally thought.

The importance of volatility in programming, when you get down to the core idea, is really just the recognition that some data can change unpredictably and without warning. And if you are sure, and I mean REALLY sure, that you understand all the implications of that, go ahead, mark it TL;DR and move on to something else.

Okay, so the last article was on immutability, this article is on volatility… isn’t that just the opposite of immutability? Well… maybe yes for the core concept, but not the implications.

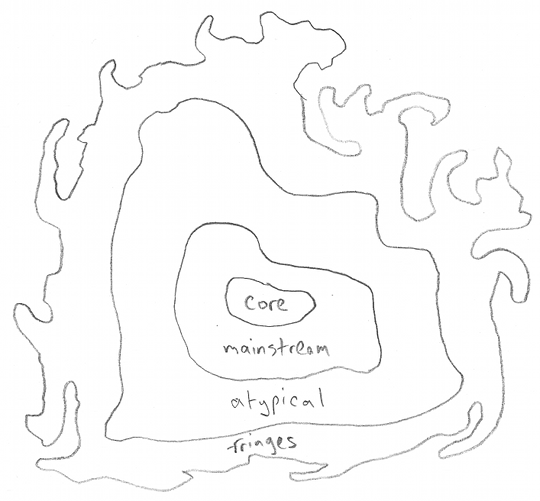

I discussed a number of things in the immutability article that fall under the topic of functional programming. Let’s think of the field of programming as a kind of tower, where the position in the tower corresponds to some assessment of the conceptual perspective involved. The software engineers working with embedded microcontrollers are near the ground floors; the engineers doing game programming are a little higher; the engineers working on word processors and tax-assistance software and other desktop applications are in the middle; the guys working with large-scale distributed systems are further up; the Functional Wizards doing programming language research are at the top. (And the guys writing compilers are running around all over the place.) But you’ve also got the posse in the basement and sub-basement, down in there with the electric switchgear, and the water and sewer pipes, and the boiler belching fire and all that. We rely on them every day, but we’re kind of scared of them and don’t quite trust them. So who are they, anyway?

Volatility 101: Pay no attention to that man behind the curtain!

Let’s ignore the posse in the basement for now. Forget I even mentioned them.

Time for a different analogy: Pretend you are a bachelor, a prince of solitude. You own your own house, in the middle of a large, quiet, wooded lot, a few hundred feet from the road and from any of your neighbors. You live there all by yourself, no girlfriend, no pets.

You decide one day to scribble a note on the back of a dog-eared envelope in the middle of a pile of old bills on one end of the sofa. Maybe it’s a thought about a stock tip, maybe it’s about beer brewing. It’s your house, so it’s your prerogative.

Six months later, the pile is still there, on one end of the sofa, and you decide to pick up the dog-eared envelope and read the note. It’s just as you left it.

Your friend Jay had a similar thought one day, which he wrote on the back of an envelope on the sofa. But Jay lives with his wife, who picked up the envelope while tidying up the living room a few hours later, and threw it away. The next day Jay went to find the envelope, and it was gone, and he and his wife argued about it. Blah blah blah I can’t find anything You don’t respect all the time I put in to keep this place clean Blah blah blah.

Simply put: Jay lives in a world that is more volatile than yours.

Okay, I hope you got the analogy. Programming is a way of manipulating your computing environment, and if you’re the only entity doing it, you can make a lot of assumptions. Single-threaded programming on a desktop PC is a piece of cake. It’s like your bachelor house. Set a variable called doodad in your program to the value 367 one day, and as long as the program is still running, and you don’t change it yourself, it will remain 367 forever. In fact, you don’t even have to look at it. You know it will be 367.

Volatility comes into play when there are other entities at work in your computing environment.

Volatility Source #1: Other Threads

One of them is probably obvious: concurrent threads and processes. Let’s say you’re writing an application which has two threads, a main thread and a worker thread, which share the variable doodad. Just because the main thread writes 367 to doodad doesn’t mean that the worker thread isn’t going to write some other value there. In fact, in order for these threads to coexist, they need to have some ground rules. It’s not like your friend Jay’s house. Jay looked for the note on the sofa and recognized that it wasn’t there. Software programs don’t have that luxury. If Jay had been a software program, he would have looked on the sofa, located his daughter’s teen magazine at the exact same place, and instead of finding his note on beer brewing ingredients, he read the text miley cyrus finds radioactive canteloupe, brewed a batch of beer with the wrong ingredients, and eventually died along with everyone else who drank it.

There is no single best approach for sharing data among concurrent threads. The easiest and safest approach is that of exclusive access: only one thread of control must be able to access the data at a time. (Jay can use the sofa and write a note on the back of an envelope, but when he’s done, he has to put it somewhere safe. Same with his daughter and the teen magazine. Leave the sofa, and you have to put your stuff away.) In software, we use locks or mutexes to control exclusive access. Two or more processes must decide to follow the same rules that in order to access the variable doodad, they will have to acquire the lock doodad_lock, perform any reads and writes, and then release doodad_lock.

There are other approaches which can increase performance, like single-writer multiple-reader locks or lock-free techniques but they are more complicated and are harder to guarantee correctness. In general, if you’re not fluent in concurrent programming, don’t use these techniques on “real” programs, and keep them confined to your own private experiments. This article is not about concurrency in general, so I’m not going to go into detail about the more complicated techniques. If you’re programming in Java, the classic reference is Java Concurrency in Practice by Brian Goetz, Tim Peierls, Joshua Bloch, Joseph Bowbeer, Doug Lea, and David Holmes. In any case, if you’re just getting into concurrency, best of luck. It can be a scary world.

Volatility Source #2: Ghost in the Machine

The other sources of volatility are due to software and hardware features in the underlying computing environment. Software sources are associated with an operating system, for example, and are rarely accessible directly, unless you decide not to follow the rules and start hacking around into OS-managed private data.

The hardware sources of volatility are probably more familiar to embedded software engineers. These include any of the special function registers in microcontrollers that interact with I/O ports or peripherals. You are probably familiar with at least some of them: timers, counters, status registers, that sort of thing. In microcontrollers without an operating system, it’s up to you to remember that many of these registers can change at any time.

The classic area of concern in this area is the read-modify-write problem. Let’s say you want to change the state of just one pin of an output port. But your microprocessor is an 8-bit or 16-bit processor, and in order to change the output you have to write the whole memory location. To change one output pin, you have to read the old value, change the appropriate bit, and write it back. In some of the 8-bit PIC microprocessors, reading the old value means accessing the actual logic level present on the pins, which can change at any time: inputs are subject to whatever hardware is connected to them, and outputs can be altered by momentary short-circuit faults. So the safe way to handle I/O ports on these processors is to maintain a private copy of the desired output pin states. When you want to change an output pin, you modify the copy, then write to the output port register. This way the data only flows one way, and you only ever write from the copy to the output ports. Newer processors have separate registers for each direction, one set of data latch registers for writing outputs and another set of port input registers for reading the pin state. Or they have special-purpose registers for setting/clearing/toggling bits: you write a 1 to the appropriate bit to one of these special registers, and the pin state will change; writing a 0 leaves the bits unchanged. TI’s C2000 processors have this type of registers.

Programming Language Support for Volatility

In the immutability article, I talked about the const keyword in C/C++ and the final keyword in Java. Both languages have a volatile keyword, which I’ll describe, but I have some hesitation in doing so. You’ll occasionally need to use volatile in C and C++, but in Java, you should not be using volatile unless you really know what you are doing. (Ironically the semantics of volatile in Java are more useful and are more clearly defined than they are in C and C++.)

In both languages, the volatile keyword is not really for your benefit; instead, it tells the compiler that it needs to expect that another source of control (another thread, the operating system, or the hardware itself) may be modifying data asynchronously, and therefore the compiler cannot make certain optimizations. The classic example in C is a blocking loop:

volatile bool ready_flag;

void wait_flag()

{

ready_flag = false;

while (!ready_flag)

pause_for_an_instant();

}

void signal_flag()

{

ready_flag = true;

}

Here, one thread can call wait_flag() and execute the while loop until a second thread calls signal_flag(). If the volatile qualifier is not there, then the compiler is free to optimize access to ready_flag and decide that since it has just set ready_flag = false; at the beginning of the loop, it can assume that ready_flag is always false and the while loop will never exit. Including volatile tells the compiler it must not make that assumption, and that each evaluation of the contents of the C variable ready_flag requires a memory read to its storage location. Similarly, any assignment to ready_flag actually requires a memory write. For example, suppose you have this in C:

volatile int answer_to_the_universe;

void something_or_other()

{

answer_to_the_universe = 54;

answer_to_the_universe = 42;

}

In this case, the compiler is required to write 54 to memory and then 42 to memory. If answer_to_the_universe weren’t declared as volatile, the compiler could look at this program and decide, “Hey, look, that moron programmer is doing stupid things again. He’s writing twice to the same variable. That first assignment of 54 never gets used so I can just optimize it out.” But with volatile, it does exactly what you ask. This example isn’t actually that far-fetched; some embedded processors require certain back-to-back sequences of writes to the same system register to unlock areas of memory and allow them to be changed.

Back to our wait_flag() and signal_flag() example:

In a system with an OS, pause_for_an_instant() should call an appropriate sleep() or wait() function to release control to the OS. In low-level embedded systems without an OS, if signal_flag() is called in an interrupt service routine, and there’s nothing else for mainline code to do but wait, it’s OK to use a spin loop:

void wait_flag()

{

ready_flag = false;

while (!ready_flag)

;

}

Also, the volatile qualifier acts similarly to the const qualifier, in that it is a constraint. Variables marked volatile cannot be passed by reference to a function, unless that function’s argument is a volatile * or volatile & (in C++). If we want to rewrite the wait_flag() and signal_flag() functions so they don’t access global variables, we’d have to use this syntax:

void wait_flag(volatile bool *pready_flag)

{

*pready_flag = false;

while (!*pready_flag)

pause_for_an_instant();

}

void signal_flag(volatile bool *pready_flag)

{

*pready_flag = true;

}

Unless we use the volatile qualifier in the function signature, we cannot pass in the address to a volatile variable to a function. If we remove the volatile, the compiler will report an error:

/* Wrong! This version of wait_flag()

* allows the compiler to optimize out

* the read access to pready_flag

* at the top of the while() loop

* incorrectly, and it will cause

* a compilation error if you call

* wait_flag() with a volatile pointer.

*/

void wait_flag_bad(bool *pready_flag)

{

*pready_flag = false;

while (!*pready_flag)

pause_for_an_instant();

}

This is the key concept you must know if you are going to be working with peripheral registers in embedded systems, even if you have no intention of mucking around with concurrency. If you want to write functions that accept an address of a volatile peripheral register, such as a routine that can write bytes to one of several serial ports, the function parameter has to include a volatile qualifier. Also, like the const keyword, the compiler will automatically promote non-volatile pointers and references to volatile, but to go the other way is not safe. (In other words, wait_flag() can be called with a non-volatile pointer, but wait_flag_bad() can’t be called with a volatile pointer.)

Note that const and volatile are not exclusive: const just tells the compiler that you promise not to change the data, whereas volatile tells the compiler that something else might be changing the data. So functions that read peripheral registers by address, but do not write them, should look like this:

bool data_available (const volatile uint16_t *uart_status_ptr)

{

return (*uart_status_ptr & DATA_AVAILABLE_BIT) != 0;

}

The const keyword is optional; it helps the compiler check that you are faithful to your promise not to write to the pointer. The volatile keyword is required if you pass in the address to a volatile register.

When else should you use volatile in C and C++?

Here we leave that well-traveled, bright open road, and enter the shady back alley, where fools rush in and angels fear to tread. That’s right, it’s Undefined Behavior Lane. This is where the C Standard effectively says that anything can happen. Technically, if you write C code that includes undefined behavior, the compiler can create object code that does anything. Anything! It could delete your hard drive. In practical terms that wouldn’t happen, but the compiler can behave in ways you wouldn’t expect because it’s allowed to do anything. In effect, the standard is telling the compiler that it doesn’t have to be responsible, that any action it takes to handle undefined behavior is okay, because the programmer will never do that. Right? If the source code includes undefined behavior, the compiler can drop all the symbols with q‘s in them, it doesn’t have to get the stack accesses correct, it can just chill out and create an infinite loop that spits out 0xCAFEBABE to the console. Whatever the compiler does is okay, because it’s one of those cases that will never happen. A program in C will never try to dereference a null pointer because it’s undefined behavior. It’s the program writer’s responsibility to make sure it doesn’t happen.

A short distance away from Undefined Behavior Lane is another thoroughfare, Implementation-Defined Behavior Way, where the compiler can do anything but it has to document that behavior. So at least it has to tell you if it’s going to be irresponsible… and in practice that means it’s going to try to do the right thing, at least in the opinion of the compiler authors. Take a look at Appendix A of the XC16 Compiler User’s Guide, for example — here’s 12 pages covering everything from what happens if you right-shift a negative signed integer, to what the value of the character constant '\n' is, to what happens when you cast an integer to a pointer or a pointer to an integer.

Remember this diagram? Undefined Behavior Lane and Implementation-Defined Behavior Way are scattered among the fringes of the language, and if you want to tramp around in this part of the world, you’d best have your copies of the language standard and the compiler manual with you, and understand exactly what they’re saying.

The volatile keyword leads to these fringes… here’s something the XC16 User’s Guide has to say about volatile:

Another use of the

volatilekeyword is to prevent variables being removed if they are not used in the C source. If a non-volatilevariable is never used, or used in a way that has no effect on the program’s function, then it may be removed before code is generated by the compiler.

Let’s say I wanted to create a runtime-determined delay, for example:

function delay(uint16_t n)

{

uint16_t i;

for (i = 0; i < n; ++i)

;

}

The compiler is free to optimize out the loop because the variable i is never used. So one way that will fix this — at least for the XC16 compiler; I’m not sure this technique is portable — is as follows:

function delay(uint16_t n)

{

volatile uint16_t i;

for (i = 0; i < n; ++i)

;

}

Another use of volatile in C is that it may help you prevent the compiler from reordering memory accesses among volatile variables. Maybe. From what I’ve read this is one of those things the C standard hasn’t documented clearly and so it’s up to the interpretation of the compiler.

In Java, volatile has some very specific semantics: it essentially guarantees certain ordering constraints of memory reads and writes among threads. This is really hard to use correctly, and since there are plenty of built-in concurrency features, you should be using them rather than getting your fingers dirty with volatile.

And here’s where we get to the posse in the basement. Don’t worry, we won’t visit very long, because it’s kind of scary down there.

(By the way, other computer languages have a mix of approaches: Fortran has a C-like volatile keyword; Python, Ruby, Go, and Rust do not, preferring to keep this behavior out of the core language and leave it up to standard libraries, which is probably the better choice.)

Volatility 304: Yoda, Memory Models, and the Posse in the Basement

Ready are you? What know you of ready? — Yoda, The Empire Strikes Back

Oh, I love a good excuse to surf the Web for Star Wars quotes. You remember Yoda and his weird way of talking, right? Why is it weird? In part, because he orders words differently from the way you would hear a native English speaker. We can still understand him because the words have an equivalent meaning.

Reordering can occur in computer programs as well. We tend to learn about computer programming as a way of specifying a sequence of steps. We write these sequences and the compiler translates them directly into assembly language, right? There are two reasons the order you write statements in a computer program is not the same order they are executed on a machine.

Imagine that you’re at work at the end of a hot summer day, and your roommate calls to ask you to pick up some ice cream at the grocery store and a copy of The Phantom Tollbooth at the library. If you’re smart, you would probably get the book first and then the ice cream, so that the ice cream is less likely to melt. But that involves judgment that it’s bad to allow ice cream to melt, so let’s forget about that for a moment. On the other hand, let’s say you have a shorter route if you go to the library, get the ice cream, and then go home, than if you get the ice cream first and then go to the library. In any case, there’s really no restriction on which you can get first; both are equivalent choices, but one choice of ordering is more optimized.

In the same way, the compiler can reorder program statements to optimize the program size or execution time, when it reduces them from a source file to the compiled output, as long as it doesn’t change the program semantics.

Even if the compiler doesn’t reorder your memory accesses, there’s another thing to think about. Again, when we learn about computer programming, we tend to think of memory access as though the computer has one big set of cubbyholes where reads and writes happen in some sequence. In a single-threaded program, we think this happens as a single sequence of accesses, like a student working in the lobby of their college dormitory, moving things from one cubbyhole to another at a time. In a multi-threaded program, we think this happens in parallel, like a number of dorm workers all moving things around in the lobby.

The problem is that this doesn’t match what’s actually going on in a modern multicore processor. Because in many processors, there’s a cache between each processor core and the memory. (So it’s more like each of those dorm workers has their own set of cubbyholes, and there’s some magic pneumatic tubes that keep the cubbyholes in sync, and swap in and out different pieces of a much larger set of storage locations.) And if you were to look at the memory from each core’s point of view (through the cache), the order of events might seem different. Not because the compiler is doing something different, but because the processor itself has to optimize memory access, and to do so, it is allowed to reorder memory accesses and the way they are visible to other cores, as long as they don’t change the semantics of operations within each thread of control.

The guys in the basement are the processor architects and designers and library writers who have to deal with this world of caching and memory models and barriers. They’re professionals, and they know what they’re doing. But you really don’t want to stay in this level of thinking; instead you should use libraries which have appropriate memory semantics documented so you can think about things at a higher level. The basement posse uses memory barriers and worries about things like the Java happens-before relationship.

Please note that C’s volatile qualifier does not necessarily guarantee memory access ordering. If you need to ensure that thread A writes variable foo after variable bar changes, and you want thread B to see foo change first before bar, you need to use the library functions and intrinsics properly. Java’s volatile is specified with certain memory ordering semantics, but again, use the concurrency libraries rather than trying to build something yourself just using volatile.

I’m not going to say any more about this issue, other than to point out a few articles for further reading. Let’s leave the basement posse to their work, and don’t forget that we depend on them a lot.

Wrapup

To recap:

- Volatility in computer programming is when data can change from an external agent that is outside of a program’s thread of control. That agent can be another thread of control, or the operating system, or the computing hardware itself. (Particularly control registers in embedded microprocessors.)

- The

volatilequalifier in C has a couple of important aspects:- It is used to denote program variables that are subject to external change.

- It requires the compiler to perform memory read and writes when program variables are evaluated or assigned.

- It can also prevent the compiler from optimizing out apparently “useless” calculations, at least in some compilers.

- Functions accepting arguments that are pointers (or references in C++) that are not qualified with

volatilecan accept only non-volatileinputs. If the function signature has an argument qualified withvolatile, it can accept pointers to eithervolatileor non-volatilevariables.

- The

volatilekeyword in Java is used to denote certain types of synchronization of memory accesses between threads. Don’t use it yourself; instead, use the higher-level facilities injava.lang.concurrent. - Writing statements in a particular order in a C or Java program doesn’t mean the compiler and the processor can’t reorder them, as long as it doesn’t change the resulting computations. Understanding all of the mechanisms involved in memory access reordering is tricky, and it’s a lot easier to leave it to the experts and instead use libraries that are designed and tested in well-specified ways.

Further reading in the basement

If you do want to find out more about the posse in the basement, here are some resources you might find useful.

volatile: The Multithreaded Programmer’s Best Friend, Andrei Alexandrescuvolatilevs.volatile, Herb Sutter- Nine ways to break your systems code using volatile, John Regehr

- Should

volatileAcquire Atomicity and Thread Visibility Semantics?, Hans Boehm - Volatile: Almost Useless for Multi-Threaded Programming, Arch D. Robison

- Volatile, Ian Lance Taylor

- JSR 133 (Java Memory Model) FAQ, Jeremy Manson and Brian Goetz

- Memory Consistency Errors, Oracle Corp.

- Atomicity, volatility and immutability are different, part three, Eric Lippert

- Sayonara volatile, Joe Duffy

- Memory Ordering in Modern Microprocessors, Paul E. McKenney

And no discussion of modern memory models would be complete without referring to Jeff Preshing’s blog:

- Memory Reordering Caught in the Act

- Memory Barriers Are Like Source Control Operations

- Acquire and Release Semantics

- This Is Why They Call It a Weakly Ordered CPU

- The Happens-Before Relation

- The Synchronizes-With Relation

- Acquire and Release Fences

- Acquire and Release Fences Don’t Work the Way You’d Expect

Coming up next: singletons

© 2014 Jason M. Sachs, all rights reserved.

- Comments

- Write a Comment Select to add a comment

To post reply to a comment, click on the 'reply' button attached to each comment. To post a new comment (not a reply to a comment) check out the 'Write a Comment' tab at the top of the comments.

Please login (on the right) if you already have an account on this platform.

Otherwise, please use this form to register (free) an join one of the largest online community for Electrical/Embedded/DSP/FPGA/ML engineers: